A low-resolution display can produce super-resolution images using artificial intelligence-designed structured materials

In the field of augmented/virtual reality (AR/VR), one of the most promising technologies is holographic image displays that mimic 3D optical waves by using coherent light illumination. As a result of these holographic image displays, wearable displays may be able to simplify their optical setup, which may lead to more compact and lightweight designs.

Alternatively, in order to achieve a truly immersive AR/VR experience, relatively high-resolution images must be displayed within a sufficient field of view in order to match the resolution and viewing angle of the human eye. In existing image projectors, the number of independently controllable pixels limits holographic image projection systems.

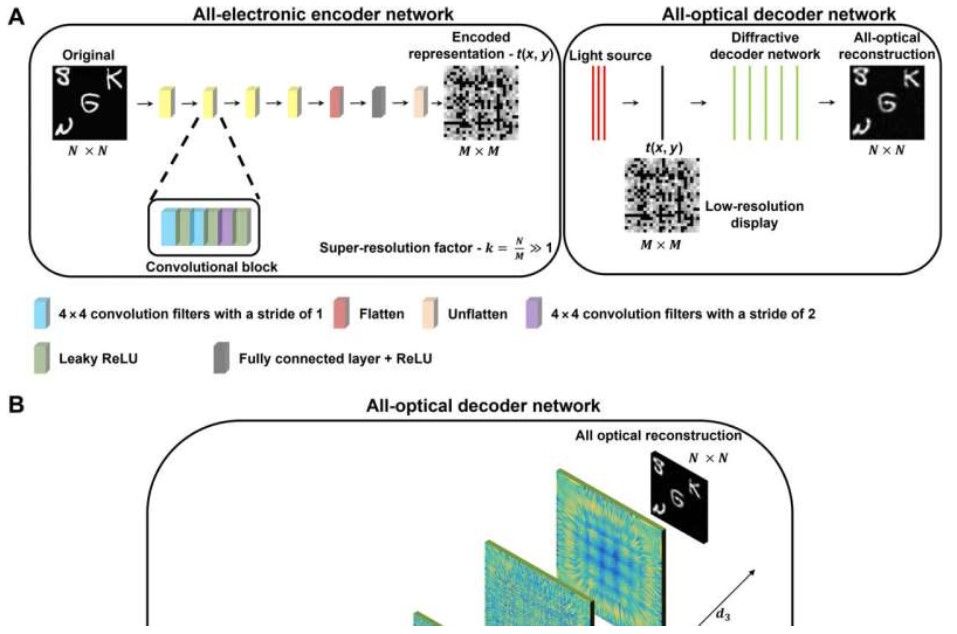

Recent research published in Science Advances describes a transmissive material designed using deep learning that is capable of projecting super-resolved images using low-resolution displays. According to UCLA researchers, led by Professor Aydogan Ozcan, "Super-resolution image display using diffractive decoders," they used deep learning to spatially engineer transmissive diffractive layers at the wavelength scale and developed a material-based physical image decoder that provides super-resolution image projection as light passes through its layers.

Consider the possibility that a stream of high-resolution images is waiting for you in the cloud or on your local computer to be sent to your display for your viewing pleasure. This new technology compresses the high-resolution images into lower-resolution images rather than sending them directly to your wearable display. Instead of sending these images directly to your wearable display, these images are first run through a digital neural network (the encoder) to reduce their resolution.

Due to the fact that this image compression method is not decoded or decompressed by a computer, this method differs from other digital image compression methods. By passing light from the low-resolution display through thin layers of a diffractive decoder, the light is optically decompressed and the high-resolution image is projected. Due to the thinness of the transparent diffractive decoder, the decompression of low-resolution images to high-resolution images relies solely on light diffraction through a passive structure, making the process extremely fast.

In addition to being extremely fast, this diffractive image decoding scheme is also energy-efficient, since only illumination light is consumed during the decompression process.

This diffractive decoder was demonstrated by UCLA researchers to have a super-resolution factor of 4 in each lateral direction, which is an increase of 16 times in the number of useful pixels.

Furthermore, this diffractive image display provides a significant reduction in data transfer and storage requirements in addition to improving the resolution of projected images. By encoding high-resolution images into compact optical representations with fewer pixels, the amount of information that is transmitted to wearable displays is significantly reduced.

Researchers have experimentally demonstrated their diffractive superresolution image display using 3D-printed diffractive decoders operating at the terahertz part of the electromagnetic spectrum, which is typically used by airport security image scanners.

Also, the researchers reported that the presented diffractive decoders were capable of displaying color images with wavelengths of red, green, and blue.

Src: UCLA Engineering Institute for Technology Advancement

Comments ()