Accuracy and Precision Improvements in YOLOv10

YOLOv10, the latest iteration in the YOLO (You Only Look Once) family, pushes the boundaries of object detection. It offers six size options ranging from N to X. This caters to diverse computational needs without compromising on accuracy.

The groundbreaking NMS-free training approach introduces models that require up to 57% fewer parameters and 38% fewer calculations. This results in a remarkable boost in Average Precision of up to 1.4%. It showcases YOLOv10's ability to do more with less.

When compared to its predecessors and competitors, YOLOv10 shines. The YOLOv10-S variant, for instance, is 1.8 times faster than RT-DETR-R18 on COCO datasets. It uses nearly three times fewer parameters and FLOPs. Such advancements pave the way for more efficient and accurate real-time object detection across various applications.

Key Takeaways

- YOLOv10 offers six size options to suit different computational needs

- NMS-free training significantly reduces parameters and calculations

- YOLOv10-S outperforms competitors in speed and efficiency

- Higher mean Average Precision scores achieved across benchmarks

- Supports both single-stage and multi-stage architectures for flexibility

- Efficient utilization of modern hardware resources like GPUs and TPUs

- Fine-tuning on custom datasets yields improved accuracy with less computation

Introduction to YOLOv10 and Its Evolution

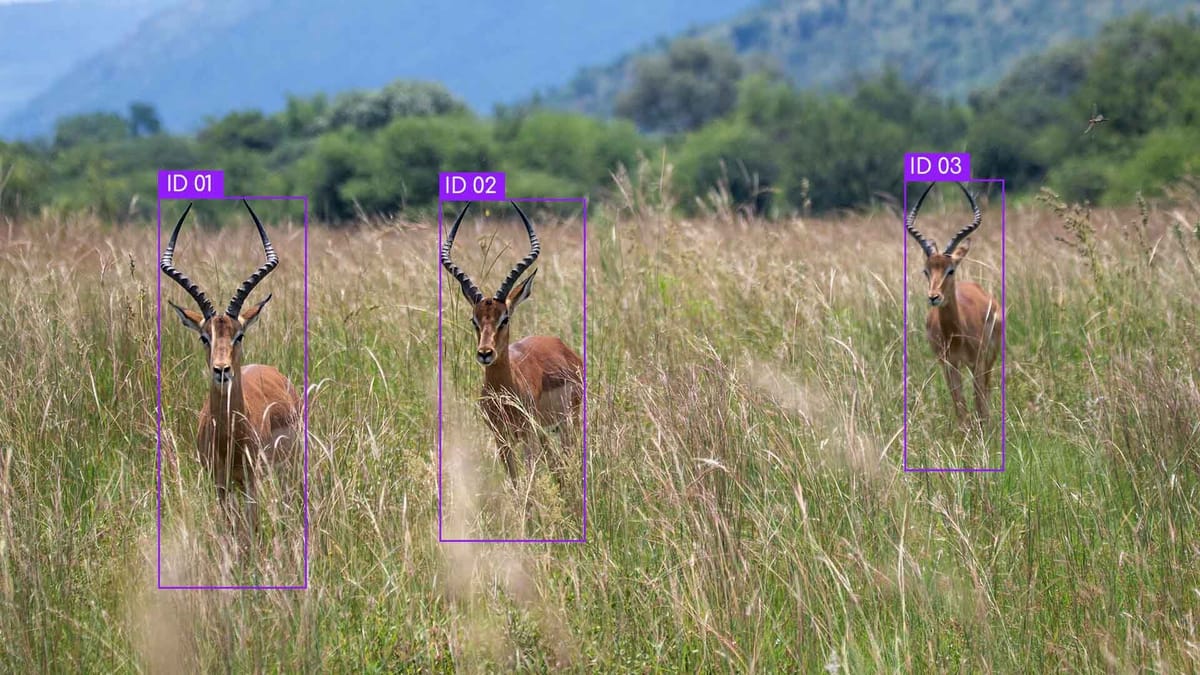

The YOLO evolution has revolutionized real-time object detection. YOLOv10, the latest version, offers significant improvements in object detection. It builds on previous versions' strengths, introducing new features for better efficiency and accuracy.

Brief history of YOLO models

Since their start, YOLO models have evolved significantly. Each version has expanded real-time detection capabilities. The journey from YOLOv1 to YOLOv10 highlights a consistent focus on improving object detection technology.

Key advancements in YOLOv10

YOLOv10 brings two major enhancements: Consistent Dual Assignments for NMS-free Training and an Efficiency-Accuracy Driven Model Design. These upgrades aim to enhance performance while cutting down on computational costs.

- Lightweight classification head

- Spatial-channel decoupled downsampling

- Rank-guided block design

- Large kernel convolutions

Significance in real-time object detection

YOLOv10's impact on real-time detection is significant. It achieves faster inference speeds without compromising accuracy. For example, YOLOv10-S is 1.8 times faster than RT-DETR-R18 with similar precision. YOLOv10-B reduces latency by 46% compared to YOLOv9-C, maintaining performance.

| Model | Speed Improvement | Performance |

|---|---|---|

| YOLOv10-S | 1.8× faster than RT-DETR-R18 | Similar accuracy |

| YOLOv10-B | 46% latency reduction vs YOLOv9-C | Equivalent performance |

These advancements in YOLOv10 represent a major leap in object detection technology. They promise enhanced efficiency and accuracy for various real-world applications.

Understanding the YOLOv10 Architecture

Released in May 2024, YOLOv10 represents a major advancement in real-time object detection. It builds upon the success of earlier YOLO models, introducing new features to enhance performance and efficiency.

The YOLOv10 structure is composed of three essential parts:

- Backbone for feature extraction

- Neck for feature fusion

- Head for object detection

The backbone employs an advanced CNN design with spatial pyramid pooling blocks. This boosts its ability to handle visual data across various scales. The neck features a Path Aggregation Network module with extra up-sampling layers, enhancing feature fusion.

- Lightweight classification head

- Spatial-channel decoupled downsampling

- Rank-guided block design

- Large-kernel convolutions

- Partial self-attention module

These improvements aim to optimize performance while reducing computational costs. The feature extraction process is streamlined through spatial-channel decoupled downsampling. This reduces parameters and computational needs.

YOLOv10 comes in six model sizes, from Nano (2.3 million parameters) to Extra Large (29.5 million parameters). This range caters to different use cases. The smallest size can process images at 1000 frames per second, making it perfect for edge devices.

With its efficient design and advanced techniques, YOLOv10 outperforms its predecessors in both performance and latency. It solidifies its status as a leading-edge solution for real-time object detection tasks.

NMS-Free Training: A Revolutionary Approach

YOLOv10 introduces a groundbreaking NMS-free training approach, transforming real-time object detection. This innovative technique addresses limitations of traditional Non-Maximum Suppression (NMS) methods. It paves the way for enhanced performance in edge computing applications.

Limitations of Traditional NMS Methods

Traditional NMS methods often struggle with overlapping objects and complex scenes. These challenges can lead to missed detections and reduced accuracy, especially in high-speed scenarios. YOLOv10's NMS-free training tackles these issues head-on, offering a more robust solution for real-time detection tasks.

How NMS-Free Training Works in YOLOv10

YOLOv10 employs a consistent dual assignments strategy for NMS-free training. This approach allows multiple predictions on a single object, each with its own confidence score. During inference, the system selects the bounding box with the highest Intersection over Union (IOU) or confidence. This streamlined process reduces inference time without compromising accuracy.

Benefits for Edge Deployment

The NMS-free training approach offers significant advantages for edge computing applications:

- Reduced computational overhead

- Faster inference speeds

- Improved real-time performance

- Enhanced object detection efficiency in resource-constrained environments

YOLOv10's NMS-free training has led to impressive results. The YOLOv10-S model achieves an AP of 46.3 with a latency of just 2.49 ms. This makes it 1.8 times faster than RT-DETR-R18 with similar performance. This breakthrough in inference speed and edge computing efficiency positions YOLOv10 as a game-changer in real-time object detection.

Consistent Dual Assignments Strategy

YOLOv10 introduces a groundbreaking approach to object detection with its consistent dual assignments strategy. This innovative technique combines one-to-many and one-to-one label assignments. It revolutionizes the way models are supervised during training.

The dual assignments strategy incorporates a new one-to-one head that mirrors the structure of the one-to-many branch. This design provides rich supervision and enables efficient, end-to-end inference. By aligning both branches through a consistent matching metric, YOLOv10 enhances model supervision and boosts overall performance.

One of the key benefits of this approach is the reduced reliance on non-maximum suppression (NMS) for post-processing. This results in lower computational overhead and improved efficiency. The consistent dual assignments strategy allows YOLOv10 to achieve remarkable improvements in both speed and accuracy:

- YOLOv10-S is 1.8 times faster than RT-DETR-R18 with similar accuracy

- YOLOv10-B shows 46% less latency and 25% fewer parameters than YOLOv9-C

- YOLOv10-L achieves 53.4 mAPval50-95 with just 7.28ms latency

The consistent dual assignments strategy exemplifies YOLOv10's commitment to balancing efficiency and accuracy in real-time object detection. By optimizing label assignment and model supervision, YOLOv10 sets a new standard for performance in various applications. This includes autonomous vehicles to industrial automation.

| Model | mAPval50-95 | Latency (ms) | Parameters |

|---|---|---|---|

| YOLOv10-S | 46.3% | 2.49 | 8.7M |

| YOLOv10-B | 51.6% | 5.89 | 18.9M |

| YOLOv10-L | 53.4% | 7.28 | 22.7M |

| YOLOv10-X | 54.4% | 10.70 | 29.5M |

Improvements in YOLOv10

YOLOv10 marks a significant leap in object detection technology. It boasts enhanced performance, improved detection accuracy, and increased model efficiency. These advancements are seen across different scales and applications.

Enhanced Accuracy Metrics

YOLOv10 showcases remarkable accuracy improvements. The model variants (N/S/M/L/X) see an AP increase of 1.2% to 1.4%. This improvement is achieved with 28% to 57% fewer parameters and 23% to 38% fewer calculations.

Precision Advancements

YOLOv10's precision has seen notable enhancements. For example, YOLOv10N and YOLOv10S outperform their predecessors by 1.5 and 2.0 AP respectively. These advancements are crucial for applications requiring high-precision object detection.

Performance Comparisons

YOLOv10 outshines previous versions and competitors in efficiency and performance:

- YOLOv10L outperforms GoldYOLOL with 32% less latency, 1.4% AP improvement, and 68% fewer parameters.

- YOLOv10-S is 1.8 times faster than RT-DETR-R18 with similar AP on COCO, using 2.8 times fewer parameters and FLOPs.

- YOLOv10-B shows a 46% reduction in latency and 25% fewer parameters compared to YOLOv9-C, maintaining equivalent performance.

| Model | AP Improvement | Latency Reduction | Parameter Reduction |

|---|---|---|---|

| YOLOv10L vs GoldYOLOL | 1.4% | 32% | 68% |

| YOLOv10-S vs RT-DETR-R18 | Similar | 44% (1.8x faster) | 64% (2.8x fewer) |

| YOLOv10-B vs YOLOv9-C | Equivalent | 46% | 25% |

These advancements highlight YOLOv10's superior model efficiency and detection accuracy. It emerges as a powerful tool for real-time object detection across various industries and applications.

Core Algorithms and Techniques in YOLOv10

YOLOv10 algorithms introduce significant advancements in model optimization and feature extraction. This latest iteration employs cutting-edge techniques. These enhance both efficiency and performance.

Lightweight Classification Head

YOLOv10 uses a lightweight classification head, powered by depthwise separable convolutions. This method greatly reduces computational demands. It maintains accuracy, making it perfect for real-time applications.

Spatial-Channel Decoupled Downsampling

The spatial-channel decoupled downsampling technique in YOLOv10 balances image detail preservation with computational efficiency. It enables more effective feature extraction without compromising speed.

Rank-Guided Block Design

YOLOv10's rank-guided block design strategically allocates blocks across the network. This optimization technique ensures peak performance. It places computational resources where they're most needed.

Large-Kernel Convolution Implementation

In deeper network stages, YOLOv10 selectively implements large-kernel convolutions. This boosts complex feature detection. It maintains inference efficiency, crucial for real-time object detection.

| YOLOv10 Variant | Performance Improvement | Latency Reduction |

|---|---|---|

| YOLOv10-S | Faster than RT-DETR-R18 | 4.63ms reduction |

| YOLOv10-B | Similar to YOLOv9-C | 46% reduction |

| YOLOv10-M | Matches YOLOv9-M/MS | 0.65ms reduction |

These core techniques synergize to create a model that excels in speed, accuracy, and efficiency. YOLOv10 surpasses its predecessors in both velocity and precision. It sets new benchmarks in object detection tasks.

YOLOv10 Performance Benchmarks

YOLOv10 redefines object detection, offering models for different needs. These models balance speed and accuracy, fitting various applications.

The YOLOv10 benchmarks show major leaps over earlier versions. Here are the performance metrics for different YOLOv10 models:

| Model | Parameters | FLOPs | mAPval50-95 | Latency (ms) |

|---|---|---|---|---|

| YOLOv10-N | 2.3M | 6.7G | 39.5 | 1.84 |

| YOLOv10-S | 7.2M | 21.6G | 46.8 | 2.49 |

| YOLOv10-M | 18.5M | 55.8G | 51.2 | 3.15 |

| YOLOv10-L | 42.1M | 127.3G | 54.7 | 4.02 |

These benchmarks show YOLOv10's high performance and low resource usage. The YOLOv10-N model, with 2.3M parameters, reaches a mAPval50-95 of 39.5 at 1.84ms latency. It's perfect for real-time detection on devices with limited resources.

The YOLOv10-L model excels in accuracy, achieving a mAPval50-95 of 54.7 at 4.02ms latency. This versatility lets users pick the best model for their needs, balancing speed and accuracy.

YOLOv10's better performance comes from its advanced architecture. It includes sequential convolution layers and a mix of grouped and pointwise convolutions. These improvements reduce parameters and enhance feature extraction, leading to more accurate detection in various scenarios.

Real-world Applications and Use Cases

YOLOv10 has revolutionized computer vision, offering significant advancements across industries. Its enhanced accuracy and speed in real-time detection applications mark a significant leap forward. This model is transforming various sectors with its cutting-edge capabilities.

Autonomous Vehicles

In the field of self-driving cars, YOLOv10 stands out. It boasts a 27% improvement in detecting small objects compared to YOLOv8. This means vehicles can identify pedestrians, other cars, and obstacles more accurately. Such precision is vital for safer roads and smoother navigation in dense urban settings.

Surveillance Systems

Security operations see substantial benefits from YOLOv10's efficiency. It can handle multiple video streams at 120 FPS, enabling real-time monitoring of vast areas. Its 32% lower false positive rate in crowded scenes also reduces false alarms, enhancing threat detection reliability.

Industrial Automation

YOLOv10 is transforming factory and warehouse operations. Its high accuracy and low latency make it perfect for quality control, inventory management, and guiding robots. The model's 30% lower memory consumption allows for seamless deployment on edge devices. This brings smart automation to production lines and logistics centers.

FAQ

What is the key innovation in YOLOv10 that sets it apart from previous versions?

YOLOv10 introduces a groundbreaking NMS-free training method. It uses a consistent dual assignments strategy. This approach allows for multiple predictions on a single object, each with its own confidence score. It eliminates the need for traditional Non-Maximum Suppression (NMS) post-processing.

How does the NMS-free training approach benefit edge computing applications?

The NMS-free training method brings several advantages to edge computing. It reduces computational overhead and speeds up inference. It also improves real-time performance and enhances object detection efficiency in resource-constrained environments.

What is the consistent dual assignments strategy in YOLOv10, and how does it work?

YOLOv10's consistent dual assignments strategy combines one-to-many and one-to-one label assignments. It includes a new one-to-one head, mirroring the structure and optimization of the one-to-many branch. This strategy provides rich supervision during training and enables efficient, end-to-end inference. It minimizes reliance on NMS for post-processing.

How does YOLOv10 compare to its predecessors and rivals in terms of performance?

YOLOv10 shows significant improvements over its predecessors and rivals. For instance, YOLOv10L outperforms GoldYOLOL with 32% less latency and 1.4% AP improvement. It also has 68% fewer parameters. YOLOv10-S is 1.8 times faster than RT-DETR-R18 with similar AP on COCO. It has 2.8 times fewer parameters and FLOPs.

What are the core techniques introduced in YOLOv10 to enhance its efficiency and performance?

YOLOv10 introduces several key techniques. These include a lightweight classification head, spatial-channel decoupled downsampling, rank-guided block design, and large-kernel convolutions. These techniques work together to produce a model that excels in speed, accuracy, and efficiency. It surpasses its predecessors in both velocity and precision.

What are some real-world applications and use cases for YOLOv10?

YOLOv10's improved performance and efficiency make it ideal for various real-world applications. It is suitable for autonomous vehicles for enhanced safety and navigation. It is also beneficial for surveillance systems for threat detection and monitoring. Additionally, it is useful in industrial automation for quality control, inventory management, and robotic guidance.

Comments ()