Adaptive Prompting: Dynamic Instructions Based on Model Feedback

Adaptive prompts are the key to correct responses across NLP tasks. This method has changed the way large language models interact and train. Language models generate responses based on feedback, which is important for high-quality task performance with multi-step reasoning. The integration and development of adaptive methods ensure the performance of AI models on tasks of varying complexity.

Key Takeaways

- Adaptive prompts improve the performance of AI models across NLP tasks.

- Dynamic instructions adjust AI models based on feedback.

- Adaptive prompts overcome the limitations of existing thought-chaining methods.

- Self-adaptive mechanisms improve AI model responses.

What is Adaptive Prompting?

Adaptive prompts are AI features that generate answers based on context analysis and previous interactions. Unlike static prompts, adaptive prompts can be adjusted in real-time. Dynamic instructions adjust AI models based on feedback.

Benefits of adaptive prompts

- Contextual accuracy. Prompts adapt to the specific context and needs of the user, making answers relevant.

- User experience. Provide the correct information that is needed at a particular moment.

- Process automation. Automatically suggest the right options.

- Error reduction. Increase the accuracy of results through timely and relevant recommendations.

Areas of use

- Chatbots and virtual assistants that change prompts depending on the topic of the dialogue and the user's queries. This ensures that the answers will accurately match the queries.

- In educational platforms, adaptive prompts customize educational content for a specific student.

- In text editors, adaptive suggestions help you formulate sentences or suggest corrections based on your writing style or tone.

- In software development environments (IDEs), adaptive suggestions offer code auto-completion.

- In e-commerce, adaptive suggestions help with personalized recommendations. Systems analyze previous purchases or product reviews and suggest relevant options.

Understanding Zero-Shot and Few-Shot Prompting

Zero-shot hints is an approach in which an AI model solves a problem using new categories without being trained on examples of those categories.

Multiple-shot hints is an approach where an AI model learns to perform a task based on a few examples.

Basics of Zero-Shot and Few-Shot Learning

The AI model uses general knowledge or semantic relationships between classes to predict zero-shot hints.

This method translates text into a language the AI model has not yet learned.

In multiple-shot hints, the AI model uses a few training examples to understand new data. Used to analyze new products based on multiple reviews.

Challenges with Static Prompting

- Static hints are fixed templates for an AI model that do not change depending on the context or situation.

- Static hints lack guidance for complex tasks, leading to incorrect results.

- Static prompts do not change according to the context, which is unsuitable for dynamic situations.

- Such prompts do not allow the AI model to learn from new data and adapt to the evolving conditions properly

- Static prompts can be created based on outdated data, which leads to inaccurate answers or decisions.

Methods for implementing adaptive prompts in modern LLMs

- Contextual adaptation. The AI model analyzes the user's previous requests and corrects the response to the context.

- Prompt generation. The AI model automatically creates prompts based on the user's answers and reactions.

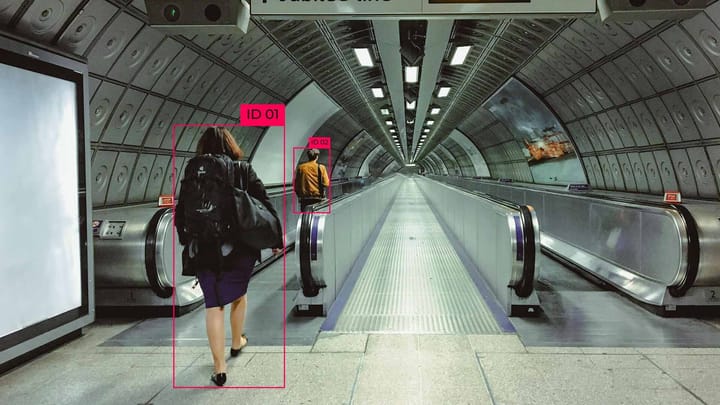

- Multimodal adaptive prompts. Prompts are created based on analyzing text and images in scenarios with multimodal data.

- Feedback-based machine learning. The AI model uses user feedback for accurate answers.

Case Studies Using Adaptive Methods

Feedback and iterative improvement have improved the accuracy of machine-learning tasks.

Studies have shown the benefits of self-adaptive prompts. In arithmetic tasks, the accuracy of AI models has increased by 15% compared to traditional methods. This approach has increased the logicality and correctness of task completion by 10%.

These studies show that the self-adaptive prompting method can overcome performance gaps in various tasks.

Integrating universal self-adaptive prompts has changed how AI machines process and respond to complex tasks. This approach has increased accuracy and optimized resource utilization.

The future of self-adaptive suggestions

The development will aim at full contextual integration. This will allow processing not only the current text but also the entire accumulated context of the user.

AI models will learn in real-time and improve their results based on live data.

Models can create personalized language profiles of users to adapt responses to their usual communication style. This will give users relevant advice based on their experience and preferences.

AI models will take into account the user's emotional state and change the tone and form of the conversation. This will allow the interlocutor to be supported in stressful situations and instill trust.

FAQ

What is adaptive prompting?

Adaptive prompting changes prompts for large language models (LLMs) based on context or user queries.

How does zero-shot prompting work, and what are its limitations?

Zero-hit prompting is a method where AI models are trained to perform tasks without prior training. It is universal, but its lack of individual examples makes its answers less reliable.

How does summarization benefit from adaptive prompting techniques?

They provide personalized answers and adapt prompts to the context and needs of the user, ensuring that the AI machine's answers are correct and relevant.

How does adaptive prompting improve question-answering tasks?

By dynamically refining prompts based on context and feedback, adaptive prompting enhances the relevance and accuracy of answers, especially in complex or ambiguous queries.

What is the difference between few-shot and zero-shot prompting in terms of performance?

Few-shot prompting typically outperforms zero-shot prompting due to the availability of examples, which better guide the model. However, both methods aim to enable the model to handle unseen tasks.

How does adaptive prompting contribute to the overall performance of large language models?

Adaptive prompting enhances model performance by allowing dynamic adjustments to instructions, improving accuracy, and enabling better handling of diverse and complex tasks.

Comments ()