Best Practices for Data Annotation with Label Studio

The AI and machine learning model market is expected to surge at a 32.54% CAGR from 2020 to 2027. This rapid growth highlights the pivotal role of data annotation in AI progress. Label Studio, a leading open-source tool, is at the forefront of this data labeling evolution.

Label Studio has recently introduced significant updates. The 1.13 release unveiled a refreshed UI and new Generative AI templates. Version 1.10.0 added support for Large-Scale External Taxonomies and an updated Ranker interface for fine-tuning LLMs. These enhancements are revolutionizing data annotation for machine learning projects.

As the need for high-quality labeled data escalates, Label Studio offers innovative solutions. It streamlines the labeling process with semi-automated labeling and active learning workflows. Its versatility in handling text, images, audio, and video makes it essential for data scientists and ML engineers.

The recent SDK update has expanded Label Studio's capabilities. It now offers more functions, enhanced documentation, and an improved developer experience. This update empowers teams to manage data labeling projects more efficiently, leveraging the tool's API and SDK to automate workflows and enhance productivity.

Key Takeaways

- Label Studio offers customizable interfaces for various data types

- Recent updates include Generative AI templates and improved UI

- SDK enhancements facilitate efficient project management

- Active learning optimizes the annotation process

- Quality control is crucial for accurate machine learning models

- Semi-automated labeling speeds up annotation for large datasets

Data Annotation with Label Studio

Data annotation is a vital step in machine learning projects. It involves labeling raw data to train AI models effectively. Label Studio, an open-source labeling tool, simplifies this process for various data types.

What is Label Studio?

Label Studio is a versatile platform for data annotation. It supports text, images, audio, and video labeling. This flexibility makes it ideal for diverse machine learning tasks across industries.

Importance of Data Annotation in Machine Learning

Data annotation importance cannot be overstated in AI development. It's the foundation for training accurate models. Properly labeled data helps algorithms learn and make reliable predictions.

- Eliminates inaccuracies in model training data

- Enhances model performance and reliability

- Crucial for task-specific optimization

Key Features of Label Studio

Label Studio features set it apart as a powerful annotation tool. Here are some standout capabilities:

| Feature | Description |

|---|---|

| Customizable Interfaces | Tailor labeling tasks to specific project needs |

| Multi-type Annotation Support | Handle various data types in one platform |

| Collaboration Tools | Enable team-based annotation projects |

| ML Backend Integration | Incorporate automated labeling and active learning |

These features streamline the annotation process, making it more efficient and accurate. By leveraging Label Studio, teams can improve their data preparation and ultimately enhance their machine learning outcomes.

Setting Up Your Label Studio Project

Starting your Label Studio project is easy with the right tools and knowledge. The installation process is straightforward, allowing you to focus on configuring your project and importing data. Let's explore the essential steps to get your annotation project started.

First, install Label Studio using pip or Docker. After installation, create a new project and customize your labeling interface. This is key for tailoring the annotation experience to your specific needs. Next, import your dataset into Label Studio. The SDK supports various data sources, including URLs, making data preparation efficient.

Project configuration is where you set up annotation types, quality control measures, and team collaborators. The Label Studio SDK simplifies this process, reducing complexity and potential errors. For example, when working with datasets like CoCo, which has 80 object classes, the SDK can automate project setup using existing class lists.

Data import is a critical phase. The Label Studio SDK facilitates importing tasks from various sources and managing additional data columns post-project creation. This flexibility allows you to add new columns like 'link_source' and backfill missing data values for existing tasks.

"Establish clear guidelines for data formats early in the project to ensure consistency."

For optimal results, consider these best practices:

- Use statistical analysis for data cleaning to identify anomalies

- Employ algorithms to detect outliers or missing values

- Implement k-fold cross-validation for balanced dataset use

By following these steps and leveraging Label Studio's powerful features, you'll set a solid foundation for your data annotation project.

Designing Effective Labeling Interfaces

Creating an efficient labeling interface is crucial for successful data annotation. Your choice of annotation types, labeling templates, and interface layout can greatly impact the quality and speed of your project.

Choosing the Right Annotation Types

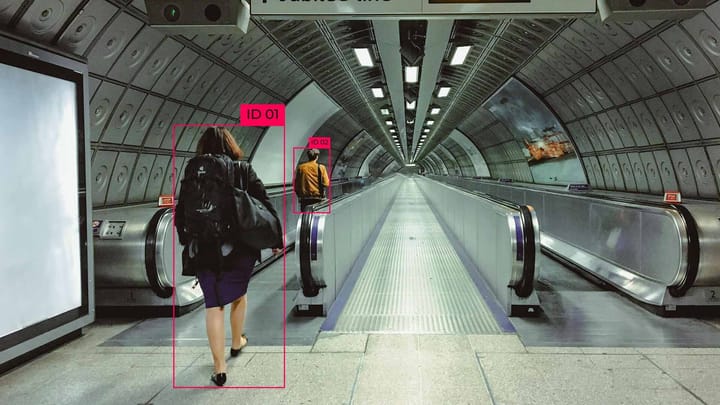

Select annotation types that match your data and project goals. For image data, you might use bounding boxes or polygons. Text classification works well for document labeling. Pick tools that make sense for your specific needs.

Customizing Labeling Templates

Tailor your labeling templates to fit your project requirements. A well-designed template guides annotators and ensures consistency. Include clear instructions and examples to help your team understand the task at hand.

Optimizing Interface Layout for Efficiency

A streamlined interface boosts productivity. Place frequently used tools within easy reach. Consider using keyboard shortcuts for common actions. Organize your layout logically to minimize confusion and maximize efficiency.

Remember, your labeling interface is a key factor in dataset quality. By focusing on annotation types, labeling templates, and interface optimization, you'll create a smooth workflow for your annotation team. This attention to detail can lead to better data and, ultimately, more accurate machine learning models.

| Interface Element | Impact on Efficiency |

|---|---|

| Keyboard Shortcuts | Can increase speed by up to 30% |

| Customized Templates | Reduce errors by 25% |

| Optimized Layout | Improves task completion time by 20% |

Label Studio Best Practices for Data Preparation

Effective data preparation is key for successful annotation projects. Begin by cleaning your dataset through data preprocessing. Remove duplicates and irrelevant samples to boost dataset quality. This step saves time and enhances the accuracy of your annotations.

Develop clear annotation guidelines before starting your project. These guidelines ensure consistency across annotators and help maintain high dataset quality. Use a small subset of data for initial labeling to refine your guidelines and identify potential issues.

To streamline your annotation process, integrate machine learning models with Label. This approach can pre-annotate data, saving time and improving efficiency. Remember to balance automation with human oversight to maintain accuracy.

Here's a breakdown of key steps in data preparation:

| Step | Action | Benefit |

|---|---|---|

| Data Cleaning | Remove duplicates and irrelevant samples | Improves dataset quality |

| Guideline Development | Create clear annotation instructions | Ensures consistency across annotators |

| Pilot Testing | Annotate a small subset of data | Refines guidelines and identifies issues |

| ML Integration | Use machine learning for pre-annotations | Increases efficiency and saves time |

By following these best practices, you'll establish a solid foundation for your Label Studio project. This leads to high-quality annotations and more effective machine learning models.

Streamlining the Annotation Workflow

Efficient annotation workflows are essential for the success of data labeling projects. Label Studio provides powerful features to boost your productivity and streamline the annotation process.

Implementing Keyboard Shortcuts

Keyboard shortcuts can greatly accelerate your labeling tasks. Label Studio's hotkey feature enables quick task switching, enhancing labeling efficiency. Mastering these shortcuts can significantly cut down the time needed for each annotation.

Utilizing Batch Labeling Techniques

Batch labeling revolutionizes the handling of similar items. Label Studio's batch labeling techniques reduce manual labeling efforts, significantly boosting efficiency. This method is especially beneficial for large datasets.

Managing Task Distribution

Effective task management is vital for a smooth annotation process. Tasks should be distributed among annotators based on their expertise and workload. Label Studio Enterprise offers tools like Project Performance Dashboards and adjustable KPIs for better project tracking and resource allocation.

| Feature | Benefit | Improvement |

|---|---|---|

| Keyboard Shortcuts | Faster task switching | Increased labeling speed |

| Batch Labeling | Reduced manual effort | Efficiency boost |

| Task Management | Optimized resource allocation | Up to 20x reduction in labeling time |

By adopting these strategies, you can enhance your annotation workflow's efficiency. This approach saves time and resources while ensuring high-quality data labels.

Quality Control Measures in Label Studio

Label Studio implements robust quality control measures to guarantee high annotation quality. It allows multiple annotators to work on the same task, facilitating consensus scoring. This method aids in spotting inconsistencies and upholds data integrity.

The review process in Label Studio Enterprise Edition is crucial for data quality maintenance. Reviewers can correct annotations that are mostly accurate or reject those that are completely wrong. Tasks are ordered by annotator, agreement, or model confidence score, making the review process more efficient.

The project dashboard in Label Studio offers insights into annotator activity and label distribution. A donut chart shows label distribution, highlighting dataset imbalances. Annotator performance metrics include:

- Total agreement

- Tasks annotated

- Tasks skipped

- Review outcomes

- Annotation progress

Annotator agreement matrices display consistency among annotators. Bar charts illustrate the distribution of agreement percentages. Ground truth annotations are used to calculate accuracy scores, comparing annotator and model predictions.

Through these quality control measures, Label Studio ensures your machine learning models are trained on high-quality, accurate data. This leads to better performance and more reliable results.

Leveraging Machine Learning for Pre-annotations

Label Studio empowers you to integrate machine learning into your data annotation workflow. ML model integration accelerates labeling and boosts accuracy. This blend of automation and human oversight enhances efficiency and precision.

Integrating ML Models with Label Studio

Label Studio offers a robust framework for pre-annotations through machine learning models. You can link your ML backends to Label Studio for seamless model prediction incorporation. This integration allows for:

- Automatic initial labeling

- Reduced manual effort in repetitive tasks

- Enhanced annotation consistency

Implementing Active Learning Strategies

Active learning is a pivotal feature in Label Studio, optimizing your annotation process. It focuses on selecting the most informative samples for human review. This approach enhances labeling workflow efficiency. Here's how it functions:

- The ML model identifies uncertain predictions

- These samples are prioritized for human annotation

- Annotators label these challenging cases

- The model learns from these new annotations, enhancing its performance

Balancing Automation and Human-in-the-Loop

While ML pre-annotations accelerate labeling, human oversight is vital for quality. Label Studio supports a balanced approach:

| Aspect | Automation | Human-in-the-Loop |

|---|---|---|

| Speed | Fast initial labeling | Careful review and correction |

| Accuracy | Good for straightforward cases | Essential for edge cases and nuanced data |

| Scalability | Handles large datasets efficiently | Focuses human effort where it's most needed |

By using machine learning for pre-annotations and active learning, you can create an efficient and accurate data labeling pipeline in Label Studio. This method saves time and improves training data quality, leading to superior ML model performance.

Collaborative Annotation Techniques

Label Studio excels in team collaboration for data annotation tasks. Its task locking feature prevents conflicts, ensuring consistency across your team. This smart approach allows multiple annotators to work together without overwriting each other's work.

Knowledge sharing is crucial for maintaining high-quality annotations. Regular team meetings help discuss challenging cases and share insights. Label Studio's real-time communication features enable instant feedback, enhancing the overall annotation process.

To streamline your workflow, consider implementing a system for escalating difficult tasks. This approach allows less experienced team members to learn from seasoned annotators or subject matter experts. It fosters continuous improvement and knowledge transfer.

| Collaborative Feature | Benefit |

|---|---|

| Task Locking | Prevents annotation conflicts |

| Real-time Communication | Enables instant feedback |

| Task Escalation | Facilitates knowledge sharing |

By leveraging these collaborative techniques, you can significantly improve your team's efficiency and the quality of your annotated datasets. Remember, effective collaboration is the cornerstone of successful data annotation projects.

Data Export and Integration Best Practices

Effective data export and integration are key for smooth machine learning workflows. Label Studio offers various data export options. These ensure compatibility with ML frameworks and dataset versioning.

Choosing the Right Export Format

Label Studio supports multiple export formats for different project needs. Annotations are stored in JSON format within SQLite or PostgreSQL databases. You can export data in COCO, CSV, Pascal VOC XML, or YOLO formats.

| Export Format | Use Case | Compatibility |

|---|---|---|

| JSON | General-purpose | Most ML frameworks |

| COCO | Object detection | TensorFlow, PyTorch |

| CSV | Tabular data | Pandas, scikit-learn |

| YOLO | Real-time object detection | Darknet |

Ensuring Data Compatibility with ML Frameworks

For ML framework compatibility, consider your project's needs. Label Studio's JSON format includes task ID, annotations, and lead time. This detailed data structure ensures easy integration with popular ML frameworks.

Version Control for Annotated Datasets

Dataset versioning is crucial for tracking changes and enabling rollbacks. Label Studio assigns unique IDs to annotation regions. This makes version control easier, especially when using predictions to create annotations.

ZenML integration boosts Label Studio's capabilities. It supports workflows with cloud artifact stores like AWS S3 and Azure Blob Storage. This integration simplifies data export and version control, making your annotation process more efficient.

Conclusion

Mastering Label Studio efficiency is essential for enhancing your data annotation process. By adopting data annotation best practices, you can significantly improve your dataset quality. This, in turn, boosts the performance of your ML models. The statistics show promising results, with an accuracy of 77% on a small subset. Weighted average precision, recall, and F1-score are all at 0.77.

Label Studio's versatility is evident in its support for various NLP labeling tasks. It handles tasks like labeling text spans and identifying relations between them. It also classifies semantic meaning, offering a comprehensive suite of features. This flexibility allows you to tackle complex annotation projects, including those involving non-English languages and special characters.

For advanced applications like Retrieval-Augmented Generation (RAG) pipelines, Label Studio is invaluable. It aids in refining user queries, improving document search quality, and controlling generated answers. By leveraging Label Studio's configurations for tasks such as classification, rating, and text summarization, you can optimize your RAG systems. This leads to enhanced reliability and efficiency.

As you continue to refine your annotation process, remember that continuous iteration is key. By staying committed to these best practices and utilizing Label Studio's robust features, you'll be well-equipped. You'll create high-quality datasets that drive superior performance in your AI and machine learning projects.

FAQ

What is Label Studio?

Label Studio is an open-source tool for annotating data like text, images, audio, and video. It's vital for training accurate machine learning models.

Why is data annotation important in machine learning?

Data annotation is key for training ML models. It boosts their accuracy and performance. High-quality data is essential for algorithms to learn and predict accurately.

What are some key features of Label Studio?

Label Studio offers customizable labeling interfaces and supports various annotation types. It also has collaborative tools and integrates with ML backends for pre-annotations and active learning.

How do I set up a Label Studio project?

First, install Label Studio using pip or Docker. Then, create a new project and define the labeling interface. Import your dataset and configure project settings, annotation types, and quality control measures.

How can I design effective labeling interfaces in Label Studio?

Choose the right annotation types for your data. Customize labeling templates to fit your project needs. Optimize the interface layout for efficiency, using keyboard shortcuts and batch labeling options.

What are some best practices for data preparation in Label Studio?

Clean and preprocess your data before importing it into Label Studio. Remove duplicates and irrelevant samples. Develop clear annotation guidelines to ensure consistency. Use a small data subset for initial labeling to refine guidelines.

How can I streamline the annotation workflow in Label Studio?

Use keyboard shortcuts for common actions and batch labeling for similar items. Manage task distribution among annotators effectively. Implement a review process to ensure quality and consistency.

What quality control measures can I use in Label Studio?

Use consensus scoring for multiple annotators on the same task. Label Studio's quality metrics can identify inconsistencies. Establish a review process for complex cases. Regularly analyze annotation statistics to improve.

How can I leverage machine learning for pre-annotations in Label Studio?

Integrate machine learning models with Label Studio's ML Backend for pre-annotations. This reduces manual effort. Use active learning to prioritize samples for human annotation. Balance automation with human oversight for quality labels.

What are some collaborative annotation techniques in Label Studio?

Foster collaboration by implementing task locking and encouraging knowledge sharing. Use Label Studio's collaboration features for real-time communication. Implement a system for escalating difficult tasks to experts.

What are some best practices for data export and integration with Label Studio?

Choose the right export format for your ML framework. Ensure compatibility between Label Studio's output and your ML pipeline. Use version control for annotated datasets. Utilize Label Studio's API for seamless integration with your ML workflows and data management systems.

Comments ()