Beyond Simple Cutouts: Advanced Segmentation Techniques with Segment Anything

In the swiftly advancing field of computer vision, image segmentation stands out as a vital challenge. It extends past visual comprehension into applications like medical imaging, autonomous vehicles, and more. Thanks to convolutional neural networks (CNNs) and the growth of deep learning, image segmentation has seen significant innovation.

Segment Anything (SAM) arrives, marking a new chapter in image segmentation methodology. It radically simplifies object isolation in images. This powerful model lets users precisely segment any object just by defining specific points and ranges. It combines the best features of UNet, ResNet, and attention mechanisms, offering exceptional segmentation accuracy and efficiency.

With SAM, semantic segmentation, instance segmentation, and object detection reach new heights of possibility. Whether the task is pixel-level classification or intricate segmentation problems, SAM equips users to excel. Exploring advanced segmentation with SAM opens doors to unparalleled advancements in computer vision.

Key Takeaways

- SAM changes the game in image segmentation, allowing users to precisely segment objects by designating points and ranges.

- SAM's neural network design merges UNet, ResNet, and attention mechanisms, resulting in improved accuracy and efficiency.

- Image segmentation plays a vital role in diverse fields, from medicine to autonomous vehicles.

- Deep learning, and specifically CNNs, have pushed image segmentation forward significantly.

- SAM enhances tasks like semantic segmentation, instance segmentation, object detection, and pixel-level classification, offering a wide array of tools for a variety of needs.

Understanding the Power of Segment Anything for Advanced Image Segmentation

The Segment Anything Model (SAM) has transformed image segmentation. It excels in capturing intricate spatial relationships and hierarchical image features. SAM combines the best of deep learning architectures like UNet and ResNet. It provides superior efficiency and accuracy in image segmentation.

SAM stands out by achieving pixel-level classification. It assigns each pixel to a specific category, enabling fine segmentation. It is also proficient in instance segmentation, separating similar objects within the same class. This is critical when analyzing images with multiple, alike objects.

SAM's design includes residual blocks to aid deeper network training and maintain gradient flow. It learns complex features from images. The integration of attention gates further enhances its capabilities. These gates spotlight significant areas in images, prioritizing them during segmentation.

Beyond design, SAM is known for its adaptability. It can handle various segmentation tasks through different prompting methods. This includes point prompts, box prompts, and even natural language descriptions. Users can customize how SAM segments images, guiding it towards their specific needs.

| Model | Key Features | Applications |

|---|---|---|

| Convolutional Neural Networks (CNNs) | Backbone of modern computer vision systems, capturing local and global features in images | Object detection, image classification, semantic segmentation |

| Generative Adversarial Networks (GANs) | Advancements in image synthesis, data augmentation, and style transfer | Image generation, augmented reality, creative applications |

| Transfer Learning Models (ResNet, VGG, EfficientNet) | Utilization of pre-trained models to boost computer vision applications | Fine-tuning for specific tasks, domain adaptation |

| Segment Anything Model (SAM) | Advanced image segmentation with flexible prompting and real-time mask generation | Object detection, instance segmentation, interactive segmentation |

The rise of SAM echoes the trend of foundation models across deep learning. In fields like natural language processing (NLP), models such as GPT-4 have paved the way. They have shown remarkable prowess in understanding and generating human-like language. Similarly, SAM represents a pivotal advancement in computer vision. It places a special emphasis on image segmentation.

With its groundbreaking capabilities, SAM is leading the charge in advanced image segmentation. It can manage sophisticated datasets and tailor results to various prompts. These features make SAM invaluable from medical imaging to augmented reality. SAM signifies a bright future for image segmentation's role in computer vision and deep learning.

Exploring the Capabilities of the SAM Model

The Segment Anything Model (SAM) is a revolutionary advance in image segmentation and computer vision. It excels in complex segmentation tasks with the use of deep learning and convolutional neural networks. Its exceptional abilities can be seen in tasks like tumor detection, organ segmentation, and retinal vessel segmentation.

The SAM model's main strength lies in assigning each pixel in an image to a specific class. This pixel-level classification allows for the accurate outlining of objects and areas within images. It also performs well in instance segmentation, distinguishing and delineating objects even of the same class.

Pixel-Level Classification and Instance Segmentation

The SAM model combines convolutional neural networks and recurrent neural networks for working with images. CNNs are pivotal in modern computer vision, aiding machines in pattern recognition in images. By leveraging CNNs, SAM successfully does pixel-level classification, assigning each pixel to a specific class.

Besides, SAM stands out in instance segmentation. It excels in identifying and outlining individual objects that might look similar in images. This skill is critical in distinguishing multiple instances of objects like in medical imaging or autonomous driving.

Semantic Segmentation and Object Detection

Adding to its abilities, the SAM model is strong in semantic segmentation and object detection. Semantic segmentation labels each pixel with a category, like "person" or "car," offering a deeper understanding of the image. SAM is adept at this, giving insight into what the image depicts.

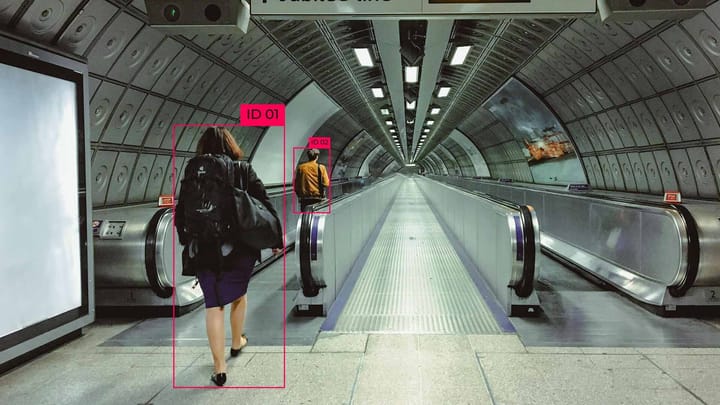

For object detection, SAM finds and categorizes items in images with bounding boxes. With its deep learning and convolutional neural networks, SAM is precise in spotting and locating items. This makes it invaluable for applications including autonomous vehicles, surveillance, and robotics.

| Segmentation Task | Description | Applications |

|---|---|---|

| Pixel-Level Classification | Assigning each pixel to a specific class or category | Fine-grained segmentation, precise object delineation |

| Instance Segmentation | Identifying and segmenting individual objects within an image | Distinguishing multiple instances of the same object |

| Semantic Segmentation | Assigning each pixel to a predefined class | Understanding scene composition, high-level image analysis |

| Object Detection | Locating and classifying objects within an image | Autonomous vehicles, surveillance systems, robotics |

The SAM model is highly versatile and effective in image segmentation across industries. It's making a big impact in medical imaging, autonomous driving, agriculture, and robotics. SAM is changing the computer vision field, bringing new opportunities for intelligent image analysis.

Setting Up the Segment Anything Environment

Preparing the Segment Anything Model (SAM) for challenging image segmentation requires setting up a specialized environment. This includes adding necessary dependencies and libraries. It also means downloading and initializing the SAM model. Such setup enables the SAM to function at its best, suited for accurate image segmentation across different fields, like computer vision and deep learning.

Installing Dependencies and Required Libraries

Before diving deep into SAM's image segmentation features, ensure your system has all essential packages. This involves setting up popular tools like pandas and numpy for data handling. You also require TensorFlow for deep learning, OpenCV for image work, and matplotlib for plotting.

To ease setup, employ package managers like pip or conda. These tools facilitate library management, ensuring you get the correct versions quickly. With a few simple commands in the terminal, you can swiftly and efficiently install what's necessary, saving time and hassle.

Downloading and Loading the SAM Model

The next vital step is acquiring the SAM model. You can find this model on GitHub, where you'll fetch the pre-trained weights. These weights are packed with the model's training insights, enabling high-quality image segmentation.

After obtaining the model, it’s time to integrate it into your working space. This calls for importing the needed modules, like PyTorch. Then, you initialize the SAM model with the downloaded weights and customize its location, perhaps your GPU. Proper loading stages the model for your segmentation tasks.

| Step | Description |

|---|---|

| Install Dependencies | Use package managers like pip or conda to install the required libraries, such as pandas, numpy, TensorFlow, OpenCV, and matplotlib. |

| Clone SAM Repository | Clone the Segment Anything Model repository from GitHub to access the pre-trained model weights and necessary files. |

| Download Model Weights | Download the pre-trained SAM model weights, which represent the learned knowledge and patterns for image segmentation tasks. |

| Import Libraries | Import the required libraries, such as PyTorch or TensorFlow, depending on the chosen implementation of the SAM model. |

| Instantiate SAM Model | Create an instance of the SAM model using the downloaded weights, allowing it to be utilized for image segmentation tasks. |

| Move Model to Device | Move the instantiated SAM model to the appropriate device, such as a GPU, for accelerated computations and improved performance. |

Correctly setting up your Segment Anything environment opens doors to advanced image segmentation. With SAM, you're equipped to take on complex segmentation challenges. It's an opportunity to innovate within the realms of computer vision and deep learning-based image analysis.

Mastering Point Prompts for Precise Segmentation

The Segment Anything Model (SAM) brings an innovative tool: point prompts. This feature allows for precise image segmentation. Users place points strategically on images, achieving detailed segmentation results.

Specifying Foreground and Background Points

Using point prompts in SAM helps distinguish foreground from background. Place foreground points on the object of interest and background points elsewhere. This action gives context, leading SAM to create accurate, focused masks. It excludes irrelevant areas.

For a person against a busy background, add points on the person and elsewhere. This action helps SAM precisely separate the person from their surroundings.

Leveraging Multiple Point Prompts for Enhanced Accuracy

Multiple point prompts elevate accuracy significantly. By placing both foreground and background points cleverly, you improve scene comprehension.

Consider situations such as:

- Dealing with intricate objects? Use more foreground points to capture details better.

- Handling occlusions? Place points strategically to resolve them accurately.

- Need fine-grained segmentation? Place points on each instance to guide SAM meticulously.

SAM also allows iterative refinement, refining masks stage by stage. This method is key for complex tasks or subtle adjustments.

| Prompt Type | Description | Benefits |

|---|---|---|

| Foreground Points | Points placed on the object of interest | Guides SAM to focus on the desired object and generate accurate masks |

| Background Points | Points placed on the surrounding regions | Helps SAM exclude irrelevant areas from the segmentation masks |

| Multiple Point Prompts | A combination of foreground and background points | Provides a more comprehensive understanding of the scene, enhancing segmentation accuracy |

| Iterative Refinement | Using the output from one stage as input for the next | Allows for progressive improvement of segmentation masks, fine-tuning results to achieve desired accuracy |

Mastering point prompts unleashes SAM's power in precise, detailed image segmentation. This tool is valuable for varied tasks, from object detection to detailed analysis, by providing flexibility and control.

Utilizing Box Prompts for Region-Based Segmentation

The Segment Anything Model (SAM) brings a new dimension to segmentation with box prompts. This tool allows users to precisely segment an image by selecting specific regions. By highlighting the areas of focus, SAM enhances segmentation accuracy and detail for objects within these regions.

A bounding box is placed around objects to guide SAM’s segmentation process. This method is ideal for images with complex scenes or multiple focal points. It refines the area of interest, leading to more detailed segmentation results within the specified boundaries.

The process is direct when utilizing box prompts for segmentation. SAM takes in the bounding box coordinates, which can come from manual tagging or automated detection. With these inputs, SAM applies its advanced segmentation technologies to process the selected region.

Box prompts not only improve accuracy but also streamline processing. They allow SAM to focus effort solely on necessary areas, reducing computational load. This targeted strategy brings quicker segmentation outcomes, especially for extensive or intricate images.

Furthermore, box prompts enhance segmentation flexibility and interactivity. Users can adjust the bounding boxes to refine results. This iterative process enables precise tailoring of segmentation, meeting specific project needs.

| Segmentation Approach | Key Features | Typical Use Cases |

|---|---|---|

| Point Prompts |

|

|

| Box Prompts |

|

|

Box prompts revolutionize region-based segmentation with their focus and efficiency. For tasks like object detection and analysis, they significantly boost the segmentation process. SAM's support for box prompts marks a step forward in segmenting images swiftly and accurately.

Unlock SAM's full potential with box prompts, for achieving intricate and precise segmentation in specific image areas.

Combining Point and Box Prompts for Optimal Results

The Segment Anything Model (SAM) shines when you blend point and box prompts. Doing so enhances image segmentation significantly. It allows SAM to precisely delineate objects, even within crowded and intricate scenes.

Strategies for Effective Prompt Combination

To maximize the utility of point and box prompts, employ these key tactics:

- Analyze the image content and identify the objects of interest that require segmentation.

- Place point prompts on the foreground objects to specify the desired regions for segmentation.

- Use box prompts to define the bounding regions around the objects, providing SAM with additional context.

- Experiment with different combinations of point and box prompts to find the optimal balance for each specific image.

Strategic use of points and boxes ensures SAM delivers segmentation masks that are both exact and thorough.

Handling Complex Scenes with Multiple Objects

Confronting scenes replete with diverse objects requires careful prompt selection. In this case, the approach involves:

- Identifying the specific objects that demand segmentation.

- Accurately delineating the foreground of each object using point prompts.

- Outlining these objects with bounding boxes, thus providing explicit boundary guidelines to SAM.

- Using the precision of point prompts to further refine object boundaries within bounding boxes.

This method allows SAM to differentiate and accurately segment objects within complex and crowded images.

| Prompting Technique | Advantages | Ideal Use Cases |

|---|---|---|

| Point Prompts | Fine-grained control, precise segmentation | Objects with irregular shapes or fine details |

| Box Prompts | Region-based segmentation, object localization | Objects with well-defined boundaries or rectangular shapes |

| Combination of Point and Box Prompts | Optimal segmentation results, handling complex scenes | Images with multiple objects or intricate compositions |

Both point and box prompts offer indispensable advantages. By using them together, you guide SAM to address specific areas while imparting the precision needed for accurate segmentation across different scenarios.

Combining point and box prompts is key to mastering the Segment Anything Model, delivering precise segmentation in complex scenes.

Advanced Segmentation Techniques with SAM

The Segment Anything Model (SAM) introduces advanced segmentation methods. These techniques empower users to precisely segment objects in images. Leveraging deep learning and attention mechanisms, SAM ensures fine-tuned segmentation for accurate object boundaries.

Fine-Tuning Segmentation with Negative Point Prompts

Negative point prompts are a key feature of SAM for refining boundaries. They help exclude certain areas from the segmentation, enhancing precision. Placing negative points strategically directs SAM, focusing it on areas of interest.

This feature is invaluable in complex scenes or with objects featuring detailed boundaries. It overcomes challenges like overlapping or background clutter. By providing exclusion prompts, users refine SAM's focus, attaining segmentation that mirrors actual object boundaries closely.

Iterative Refinement for Precise Object Boundaries

SAM's iterative refinement process incrementally enhances segmentation accuracy. It involves using outputs from prior stages as inputs for subsequent refinements. This progressive approach captures intricate details, resulting in highly precise object boundaries.

The technique sequentially sharpens the segmentation, refining boundaries with each step. It’s highly effective for objects with complex shapes or fine details. SAM adapts to the object’s specific features through these iterative refinements, improving its segmentation accuracy significantly.

| Technique | Description | Benefits |

|---|---|---|

| Negative Point Prompts | Indicate areas to be excluded from segmentation | Fine-tune segmentation results and achieve precise object boundaries |

| Iterative Refinement | Progressive improvement of segmentation masks | Capture fine details and produce highly accurate segmentation results |

Combining negative point prompts and iterative refinement, SAM equips users for complex segmentation tasks. These tools are essential for challenging scenarios and objects with intricate boundaries. With SAM’s precision and adaptability, users can tailor segmentation to meet their application's unique needs, ensuring the creation of accurate object masks.

Integrating SAM with Other Computer Vision Techniques

The Segment Anything Model (SAM) enhances image segmentation. Its integration with diverse computer vision tools boosts performance. This merger enables the accomplishment of complex tasks, elevating image analysis's frontiers.

Combining SAM with Inpainting for Object Removal

Using SAM with inpainting eases object removal in images. Inpainting techniques fill removed sections with plausible data, ensuring visual coherence. When guided by SAM's precise segmentation, removing desired objects becomes straightforward.

Following object isolation, inpainting models come into play. These include sophisticated systems like Generative Adversarial Networks (GANs), which inject realistic content in the void. SAM and inpainting models together excel at producing flawless object removal outcomes.

Enhancing SAM Segmentation with Deep Learning Architectures

Integrating SAM with top-tier deep learning networks, such as Convolutional Neural Networks (CNNs), improves segmentation accuracy. CNNs excel in semantic understanding. When combined with SAM, they enhance the model's insight, leading to finer segmentation.

Addition of transformer architectures, especially Vision Transformers (ViT), contributes to better segmentation. Transformers' ability to capture global image context enriches SAM's segmentation precision. This synergy results in more coherent segmentation outcomes.

| Technique | Benefit |

|---|---|

| Inpainting | Seamless object removal and filling with plausible content |

| Convolutional Neural Networks (CNNs) | Rich feature extraction and semantic understanding |

| Transformer-based Architectures | Capturing long-range dependencies and global context |

Integration with deep learning models amplifies SAM's potential. This combination allows tackling intricate segmentation challenges with precision and detail. It fosters advancements in diverse fields like medicine, self-driving technology, and special effects.

Real-World Applications of Advanced Segmentation with SAM

The Segment Anything Model (SAM) has changed the game in computer vision. Its cutting-edge segmentation abilities are making a mark in areas like medical imaging and autonomous driving. SAM is altering how we process and interpret visual data across different fields.

In the medical imaging world, SAM stands out with its precise segmentation. It helps spot tumors, outline organs, and map out retinal vessels. With SAM's help, healthcare experts get clearer views for diagnosing diseases and planning treatments. This leads to better care for patients.

Autonomous vehicles are benefiting greatly from SAM's skills. It dissects road scenes, identifying crucial items like vehicles and pedestrians. This aids in driving more safely and smoothly, thanks to SAM's quick, on-the-spot analysis of surroundings.

But SAM doesn't stop there. It's also making waves in satellites, aiding in land classification and environmental checks. By outlining land features and structures, SAM assists in building sustainable practices and studying climate change.

The Segment Anything Model (SAM) is proving its worth in many areas. Its complex segmenting features are ushering in new eras in healthcare, transport, and environmental protection. This marks a significant step forward for society.

SAM is built for tackling tricky scenes and tailoring to different needs effortlessly. Its unique design makes it a star in a range of segmentation tasks. Be it spotting objects for cars or detailing medical images, SAM is always on point.

| Application Domain | SAM's Role | Key Benefits |

|---|---|---|

| Medical Imaging | Tumor detection, organ segmentation, retinal vessel segmentation | Improved disease diagnosis and treatment planning |

| Autonomous Driving | Segmenting road scenes, identifying vehicles, pedestrians, and road markings | Enhanced safety and reliable navigation for self-driving vehicles |

| Satellite Imagery Analysis | Land cover classification, urban planning, environmental monitoring | Insights for sustainable development, resource management, and climate change studies |

The potential for SAM's segmentation tech is vast and growing. As experts push its boundaries, new, game-changing applications will emerge. SAM's role is vital in numerous fields, promising a future full of advanced visual data analysis and innovation.

Best Practices and Tips for Effective Segmentation with SAM

To get the best results from the Segment Anything Model (SAM), it's key to adopt best practices and tips. Integrating these methods will allow you to unlock SAM's full power. This leads to obtaining segmentation masks that are not only very accurate but also detailed.

Preprocessing Images for Optimal Segmentation Results

Image preprocessing plays a crucial role in achieving top-notch segmentation outcomes with SAM. Part of this process includes making the images a uniform size, normalizing their pixel values, and adding data augmentation. Such steps increase the model's accuracy and the quality of the generated segmentation masks markedly.

Outlined below are some preprocessing techniques to consider:

- Resize images to a consistent size, like 512x512, for overall dataset uniformity.

- Normalize pixel values down to [0, 1] or [-1, 1] to ease training convergence.

- Employ data augmentation methods, like random crop, flip, and rotation, for a wider variety in training.

Handling Challenging Scenarios and Edge Cases

The path of using SAM can lead you towards difficult scenes and cases that need special handling for segmentation. Think of cases including many objects on top of each other, those with complex edges, or images with lighting and contrast variations.

For such challenges, the following strategies might prove helpful:

- Mixing point and box prompts can help direct SAM towards the key areas. Correctly using these prompts can make SAM focus better on objects of interest, reducing confusion in complex scenes.

- Distribute the prompts smartly based on image information. For fine details, place them closer to the object's edge. In cases of overlap or occlusion, prompt placement must clearly separate each object.

- Iteration in refining the segmentation can lead to sharper and more accurate object boundaries. Using each segmentation stage's output as the next stage's input allows for a step-by-step enhancement of results.

| Technique | Description | Benefits |

|---|---|---|

| Point Prompts | Set foreground and background points for segmentation guidance | Gives better control and enhances accuracy |

| Box Prompts | Place a bounding box around the object you want to focus on | Makes SAM concentrate on specific regions for improved segmentation |

| Iterative Refinement | Take one stage's output to refine object boundaries in the next stage | Improves the sharpness of the object's edges and captures more details |

By adhering to these best practices and tips, you can efficiently use SAM for meticulous and accurate segmentation in various scenarios. Always experiment with new approaches, tailor your strategies to your project's needs, and consistently assess and improve your segmentation process for the best results.

Evaluating and Visualizing Segmentation Results

Evaluating the Segment Anything Model (SAM) is key to understanding its efficiency. It helps us pinpoint areas for better image segmentation. By diving into SAM's segmentation outcomes, we extract valuable clues. These show not just its strong points but also where it can do better. Thus, we can enhance its performance.

Quantitative Metrics for Segmentation Performance

Quantitative metrics are essential in the assessment of segmentation tasks. They offer clear, objective insights into the quality and precision of SAM's results. Key metrics used in this evaluation include:

- Intersection over Union (IoU): IoU checks the match between the model's segmentation and the true object. This shows the model's capability to outline object edges accurately.

- Dice coefficient: This metric gauges how alike the model's segmentation is to the actual object. It considers both overlap and region size.

- Pixel accuracy: This measures how many pixels are correctly identified as part of the foreground or background. It's a direct accuracy indicator for segmentation.

By using these metrics, you can compare SAM's segmentation against the ideal. You can also stack it against the latest segmentation approaches. This lets you understand SAM's efficiency. It indicates where it's strong and where it might need enhancement.

Visualizing and Interpreting Segmentation Masks

Visual inspection of segmentation masks is crucial in evaluation. Overlaying these masks on the original images lets you see the segmented areas clearly. This gives you a feel for how well the model performs.

Use of various visualization methods enhances result interpretation:

| Visualization Technique | Description |

|---|---|

| Color-coding | It assigns unique colors to each segmented area. This aids in distinguishing between different objects or classes. |

| Alpha blending | This approach makes the segmentation transparent over the original image. It provides context and lets you compare it to the true segmentation. |

| Contour drawing | Drawing outlines around segmented objects highlights their boundaries. It makes it easier to understand their shapes. |

Using these visualization methods offers deep insights into the model. You can identify strong and weak points clearly. This helps in making solid choices for enhancing and perfecting SAM's performance in segmentation tasks.

Future Directions and Potential Enhancements for SAM

In the critical research realm, Segment Anything (SAM) shows enormous potential growth. Exploring SAM's fusion with advanced deep learning models, such as transformers or graph networks, stands as a key innovation. Such a hybrid approach would refine SAM's segmentation abilities through better encasing of the scene's context and dependencies.

A prospective area of growth lies in SAM's extension towards video segmentation. Deployment of SAM's insights into dynamic scenes across frames could optimally enhance segmentation's accuracy. It would usher in SAM's applications in video and action analysis, besides bolstering object tracking.

Focusing on refining SAM for efficient, real-time segmentation on modest resources is another upshot. Streamlining SAM's architecture would not only improve practical utility but also broaden its application horizons. This focus on efficiency may well position SAM for more widespread usage.

An exciting possibility is extending SAM's handling to encompass a broader array of objects, even rare ones. Accomplishing this through techniques like few-shot learning could exponentially boost SAM's adaptability. From medical imaging to autonomous cars, SAM would find new and valuable roles.

| Potential Enhancement | Description | Impact |

|---|---|---|

| Integration with advanced architectures | Combine SAM with transformer-based models or graph convolutional networks | Improved contextual understanding and segmentation accuracy |

| Video segmentation | Leverage temporal information across frames | Consistent and accurate segmentation in dynamic scenes |

| Efficient and lightweight versions | Optimize architecture and reduce computational complexity | Real-time segmentation on resource-constrained devices |

| Handling rare or novel objects | Utilize few-shot learning or unsupervised domain adaptation | Enhanced generalization capabilities and applicability to various domains |

Advancements in image segmentation and deep learning promise ongoing evolution. They will drive SAM and similar models to redefine the segmentation field. SAM's influence could, indeed, reshape our engagement and understanding of visual data in myriad sectors.

Summary

The rise of advanced segmentation through the Segment Anything (SAM) model is changing image segmentation and computer vision. SAM combines deep learning, focus mechanisms, and adaptable prompting. It does precise and efficient segmentation in images. SAM lowers labeling costs using interactive tools like point selection, bounding box drawing, and polygon tools.

Its usefulness and efficiency span healthcare, autonomous driving, and agriculture. In healthcare, it helps detect tumors and analyze medical scans, especially where data labels are scarce. For autonomous driving, SAM is vital in real-time object identification, which boosts safety on the road. In agriculture, it aids in monitoring crop health and efficiently managing resources through detailed segmentation.

Advancements are continually improving the SAM model and its applications. Combining SAM with transformer models or graph networks can capture more context and understanding. The goal is to make SAM lighter for easier use on devices with few resources. SAM's progress signifies a significant step in the evolution of advanced image segmentation techniques.

FAQ

What is Segment Anything (SAM), and how does it enhance image segmentation?

Segment Anything (SAM) is a sophisticated neural network design. It combines UNet's structure with ResNet's learning approach and attention mechanisms. This fusion achieves precise image segmentation. It allows users to easily specify points and ranges for segmentation. This makes it as simple as using image editing software like Adobe Photoshop.

What are the key capabilities of the SAM model?

The SAM model is highly effective in image segmentation tasks. These include pixel-level classification, instance segmentation, and semantic segmentation. It can assign specific classes to each pixel and identify objects within images. SAM provides a deep understanding of the content in the images.

How do point prompts work in SAM, and what are their benefits?

Point prompts in SAM help by marking foreground and background points to help with segmentation. It’s like telling the system where the object begins and ends. Background points aid in excluding unwanted areas. Using several point prompts can greatly improve segmentation accuracy.

What are box prompts, and how do they contribute to region-based segmentation in SAM?

Box prompts let you draw a bounding box around an object of interest. This directs SAM to focus on that specific region. By using a box prompt, SAM generates more detailed masks for the object. They are useful when the image has multiple objects, or you need to focus on a specific part.

How can combining point and box prompts lead to optimal segmentation results?

Combining point and box prompts in SAM can optimize segmentation. Skillfully placing these prompts based on the image content enhances results. SAM can then accurately segment all objects, even in complex settings. This approach maximizes segmentation quality.

What advanced segmentation techniques can be applied with SAM?

SAM allows for progressive segmentation refinements, involving strategies like negative point prompts and iterative refinement. Negative point prompts refine object boundaries for more precise segmentation. Iterative refinement uses segmentation iterations to improve details, enhancing mask quality.

How can SAM be integrated with other computer vision techniques?

Integrating SAM with other computer vision methods enhances its capabilities. For example, merging SAM with inpainting enables seamless object removal. Deep learning models such as convolutional neural networks (CNNs) can add more contextual understanding, improving segmentation further.

What are some real-world applications of advanced segmentation with SAM?

SAM finds applications in various fields. In medicine, it aids in tumor and organ segmentation, crucial for diagnosis. In autonomous cars, it precisely identifies key elements in road scenes. For satellite images, it supports classifications in urban planning and environmental monitoring.

What are some best practices and tips for achieving optimal segmentation results with SAM?

To get the best from SAM, optimize image data before using it. Resize, normalize, and augment data well. For tricky scenarios, use point and box prompts creatively. Adjust prompt placement and consider iterative refinement for the best results.

How can the performance of SAM segmentation be evaluated and visualized?

Quantitative measures like IoU, Dice coefficient, and pixel accuracy assess SAM's segmentation. They compare results with known standards for objective evaluation. Visualizing results with color coding and overlay techniques enhances the understanding of segmentation quality.

Comments ()