Building Weak Labeling Frameworks: Faster Annotation with Imperfect Signals

These frameworks use multiple data points (datums) to develop a model faster, even in the presence of noise in the control signals. This approach speeds up the learning process and is in line with the trend in machine learning to focus on improving data quality rather than model complexity. Weak labeling structures use many data points (datums) and speed up annotation. This approach facilitates rapid model development, increasing training efficiency.

Learning from large unstructured datasets allows for probabilistic label generation at scale, reducing the time to market for AI projects. This approach also ensures that machine learning models are trained on various data, leading to reliable results.

Key Takeaways

- Weak labeling structures can significantly reduce the time required for annotation.

- Using multiple data points (datums) can speed up model development even in the presence of inaccurate labeling.

- Training on large unstructured datasets reduces the time required for AI projects to reach the market.

- A data-driven approach improves the stability and generalization ability of the model.

Foundations of Weak Labeling: Concepts and Techniques

Weak labeling is a revolutionary approach in machine learning that uses noisy signals to speed up the annotation process. Organizations can significantly reduce the time and cost of data labeling by understanding and effectively using these signals.

Understanding Noisy Supervision Signals

Noisy supervision signals are imperfect or indirect annotations derived from heuristics, such as regex patterns or existing models. These signals are often less accurate than manual labels but can be generated at scale. For instance, in a spam detection task, a labeling function might flag emails containing the word "free" as spam, even though this rule may not always be accurate.

These signals are combined using probabilistic methods, such as Bayesian approaches, to produce more accurate labels. This process is particularly useful when large amounts of unlabeled data are available, as it enables the rapid generation of training datasets.

Pros and Cons of Weak Supervision Techniques

Weak labeling structures are a data annotation method that uses imprecise or incomplete signals to label parts of text or emotions. Weak signals are signals that are not accurate enough for complete annotation. This approach allows you to start training a model without structured data.

Advantages:

- Fast data annotation. Automatic or semi-automatic labeling speeds up the data preparation process.

- Scalability. It allows you to work with large volumes.

- Cost reduction. Reduces time and resources for annotation.

- Flexibility. Uses various sources of weak labels.

Cons:

- Low accuracy of labels. Due to noise in the label, the model can learn from erroneous data.

- Difficulty in assessing quality. It is difficult to determine the correctness of the label without additional validation.

- The need for further training.

Designing an Efficient Annotation Process

Creating an efficient annotation process involves a systematic approach that emphasizes iterative development and continuous refinement. This method ensures that training data is scalable for machine learning models.

Iterative Labeling Function Development

Iterative development of labeling functions focuses on automating the data annotation process and reducing reliance on manual labeling. Start by defining the goals of your labeling functions and testing initial tasks on a small sample of data. Add reliable expert data to ensure that your annotated data is correct. Compare your automated labels with those that were manually verified.

Implement feedback loops. Expert feedback during the annotation process will improve the quality of your training data.

Combining Heuristic Rules with Machine Learning

Weak labeling combines heuristics with machine learning models. Heuristics are a set of simplified rules that are used to make decisions. These rules automate the creation of labels. They produce accurate labels when combined with probabilistic methods such as Bayesian approaches. Bayesian approaches are analysis and decision-making methods based on Bayes' theorem, which allows updating the probabilities of events based on new data. This integration helps when working with large amounts of unlabeled data.

Probabilistic labeling methods assign probability scores to labels. These methods take uncertainty into account, resulting in robust AI models. Probabilistic labels capture the ambiguity between categories in text classification tasks. Using the developed heuristics, a few hours of expert time can produce high-quality training data.

Scaling Annotation: Tools and Techniques

Scaling annotation is the process of accurately annotating large amounts of data. It is important for large machine-learning projects that require working with millions of examples of text, images, or other information. Tools for scaling annotation include automated tools, crowdsourcing platforms, and open-source tools. Some of the popular ones are:

- SpaCy is an NLP library that offers automatic tools for text annotation, including parts of speech and mood.

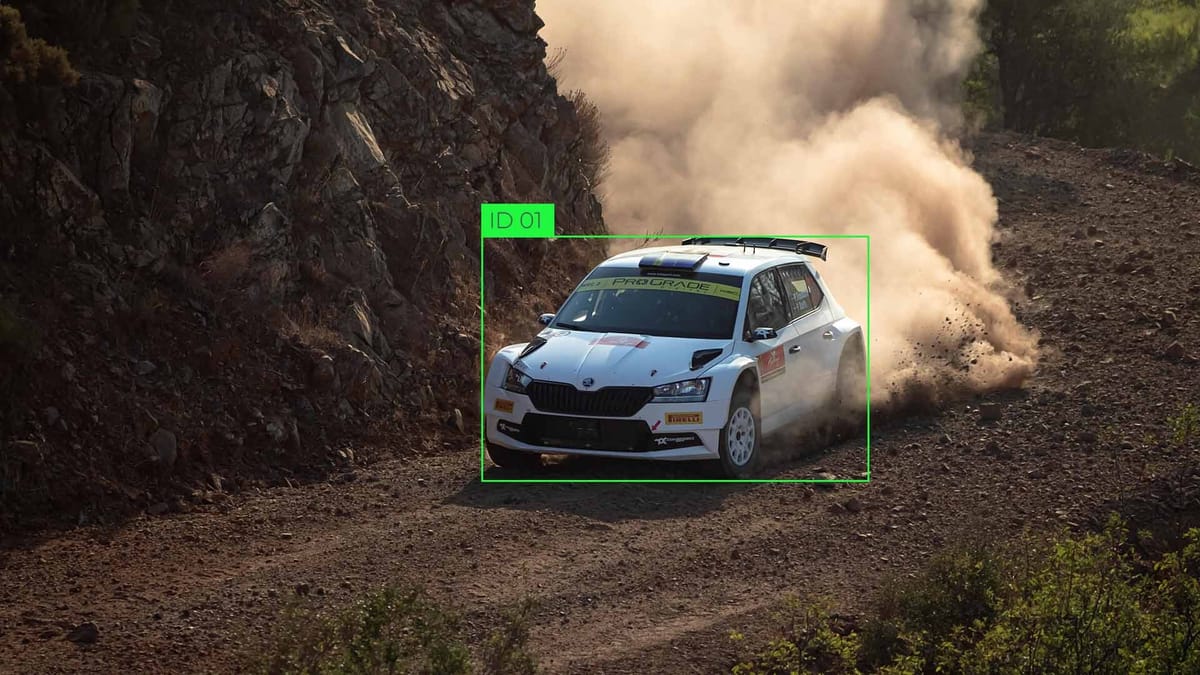

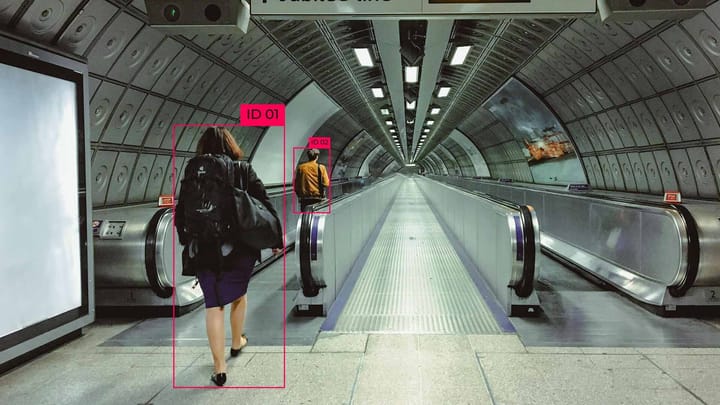

- Keylabs is a platform for creating annotations for images and videos. It offers semi-automated annotation tools.

- Amazon Mechanical Turk (MTurk) is a platform for performing small tasks, such as text and image annotation, with the help of humans.

Scaling annotation methods:

- Automatically training models for pre-annotation.

- Preliminary labels are created using pre-trained models, after which human annotators can check or correct these labels.

- Active learning. Models select uncertain examples for annotation, allowing humans to focus on complex data.

- Weak supervision techniques. Using "weak signals" or incomplete labels to speed up annotation.

Conclusion

Weak labeling frameworks are a great alternative to traditional annotation methods. Using noisy observation signals reduces the need for manual labeling and allows for the creation of large, high-quality datasets with minimal annotator intervention.

One advantage of weak labeling is the ability to generate high-quality labeled data at scale. This is required for training classifiers and allows for creating diverse datasets.

Iterative refinement of labeling rules improves model performance. Weak control methods produce accurate labels by combining heuristic rules with probabilistic methods, resulting in reliable classifiers. This approach is needed in the legal and medical fields.

Weak strategies provide a solid foundation for the future of data annotation. It is involved in transforming artificial intelligence projects, making it an essential tool for any organization.

FAQ

What is weak supervision, and how does it differ from traditional supervision in machine learning?

Weak supervision trains models with imperfect or noisy labels, often derived from heuristics or pre-existing patterns.

How can weak labeling frameworks improve the efficiency of data annotation processes?

Weak labeling frameworks leverage heuristic rules and machine learning to automate the labeling process, reducing the need for manual annotation.

What are the key challenges when implementing weak supervision techniques?

The primary challenges include managing label noise, ensuring model robustness, and balancing the trade-off between annotation speed and accuracy.

How does probabilistic labeling enhance model performance in weak supervision scenarios?

Probabilistic labeling assigns confidence scores to data labels and allows models to learn from uncertain data.

How does active learning complement weak supervision in machine learning workflows?

Active learning selects the informative samples for human annotation, reducing the need for extensive labeled datasets. It optimizes the balance between automation and precision when combined with weak supervision.

Comments ()