Comparing YOLOv10 with Other Object Detection Models

In the rapidly evolving field of computer vision, object detection models are critical for real-world applications. The introduction of YOLOv10 in May 2024 has set a new benchmark for efficiency and performance. It surpasses its predecessors by significantly reducing computational overhead and latency by 70% in its YOLOv10-N variant. This advancement places YOLOv10 at the forefront, making it a compelling subject for comparison.

While YOLOv8 and earlier versions have shown impressive accuracy, YOLOv10 outperforms them in mean Average Precision (mAP) on the MS COCO dataset. The model's architectural innovations are key to its success. The comparison with SSD, Faster R-CNN, and RetinaNet is not just about YOLOv10's performance. It also highlights its significant advancements and parameter reductions compared to YOLOv8 and YOLOv9.

Key Takeaways

- YOLOv10's launch marks a substantial leap in object detection performance, with considerable reduction in latency and computational requirements.

- Accuracy and speed are the new benchmarks set by YOLOv10, surpassing YOLOv8 and YOLOv9, highlighting its suitability for real-time applications.

- The NMS-free training approach and optimization of YOLOv10 propel it ahead in model comparisons with SSD, Faster R-CNN, and RetinaNet.

- YOLOv10’s variants, from nano to extra-large scales, offer various performance metrics tailored to specific computational needs and constraints.

- YOLOv10 sets a new industry standard for object detection models, forging a path for future innovations in the field.

Understanding YOLOv10's Breakthrough in Object Detection

YOLOv10 represents a major advancement in object detection, introducing a novel NMS-free approach and architectural innovations. These enhancements significantly boost its performance. This model excels in real-time object detection, expanding its use and setting new standards for speed and efficiency in computer vision.

YOLOv10's NMS-Free Approach and Real-Time Detection

YOLOv10 adopts a forward-thinking NMS-free approach, using consistent dual assignments during training. This method is key to its high precision and speed. It eliminates the need for non-maximum suppression, a step that often hinders object detection frameworks. This streamlining results in significant latency reductions, making YOLOv10 ideal for real-time object detection. For a detailed exploration of leveraging YOLOv10 for various detection tasks, consider reviewing this insightful tutorial.

Enhanced Architectural Innovations in YOLOv10

YOLOv10's architectural innovations extend beyond its NMS-free training. It features an upgraded CSPNet backbone, significantly improving feature extraction. It also integrates a PAN neck for efficient multi-scale feature fusion and employs separate heads for training and inference. These enhancements ensure YOLOv10 excels in real-time detection, robustness, and accuracy, efficiently addressing diverse object detection demands.

Each YOLOv10 configuration, from Nano to Extra Large, is designed to balance speed and accuracy, meeting various application needs. It handles massive, high-resolution feeds in surveillance systems and provides lightweight models for embedded systems. By reducing parameters and computational overhead, YOLOv10 is a pioneering solution in object detection technology evolution.

With its strategic design choices, YOLOv10 achieves state-of-the-art performance across benchmarks. It showcases how architectural innovations can transform real-time object detection, making it more accessible and effective for a broader range of applications.

An Overview of Object Detection Models Evolution

The journey through the evolution of computer vision is a tale of technological leaps, highlighted by object detection models. From basic feature detection to the advanced deep learning frameworks like YOLOv10, this evolution marks key milestones in AI. It shows how machines have dramatically changed in interpreting visual data.

At first, object detection used handcrafted features and classifiers. The Viola-Jones Detectors in 2001 introduced a major advancement, using haar-like features for real-time face detection. By 2005, the HOG Detector improved upon this, using dense pixel grids for accurate pedestrian identification. These early methods, though groundbreaking, were limited in versatility and accuracy.

The advent of deep learning brought a new era. The YOLO series, starting with earlier versions and evolving to YOLOv10, marked a shift from traditional to neural networks, mainly CNNs. This shift, sparked by RCNN's success in 2014, was a turning point in computer vision. Each version, from Fast RCNN in 2015 to Faster RCNN, brought significant improvements in detection speed through architectural and processing enhancements.

Here's a closer look at how these models have evolved over time:

| Year | Model | Technological Advancements |

|---|---|---|

| 2001 | Viola-Jones Detectors | Real-time face detection using haar-like features |

| 2005 | HOG Detector | Pedestrian detection using dense pixel grids and gradients |

| 2008 | Deformable Part-based Model (DPM) | Part decomposition and multi-instance learning for enhanced accuracy |

| 2014- | RCNN & subsequent models | Introduction of CNNs, revolutionizing object detection through deep learning |

| 2017 | Feature Pyramid Networks (FPN) | Improved small object detection with a structured top-down architecture |

The YOLO series played a significant role in this timeline, simplifying and speeding up object detection. YOLOv10 represents the pinnacle of these efforts, combining advanced neural network enhancements for real-time, precise detections. This evolution is not just about speed and efficiency; it's also about making object detection more sensitive and accurate across various applications.

To explore how deep learning has transformed object detection, visit viso.ai. It provides an in-depth analysis of the significant milestones and current trends in the field.

Looking ahead, the evolution of computer vision will continue to advance with new algorithmic strategies and increased computational power. This will set new standards for how machines understand and interact with their surroundings. The ongoing development of models like YOLOv10 within the YOLO series highlights the dynamic and evolving nature of object detection technologies.

The Significance of Accuracy and Speed in Object Detection Models

The quest for better object detection models has led to the development of YOLOv10. It excels in accuracy in object detection and inference speed. These qualities are vital in today's tech world, where real-time analysis and reliability are key.

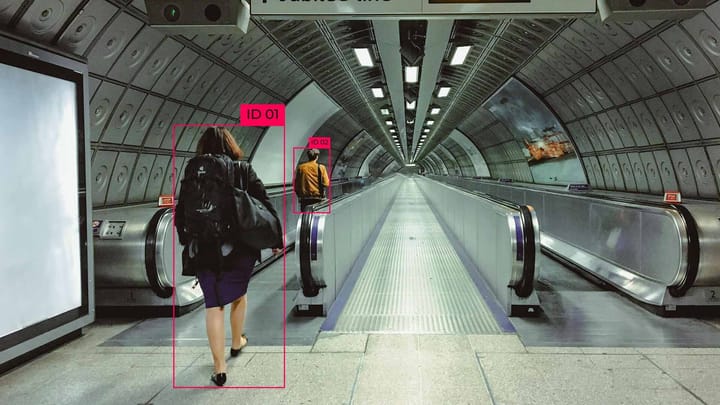

YOLOv10 is at the forefront, achieving a remarkable balance between accuracy and speed. It can process up to 202 frames per second (FPS) while maintaining high detection accuracy. This makes it essential in industries like autonomous driving and real-time surveillance.

Comparing Inference Speed of YOLOv10 and Competitors

When it comes to inference speed, YOLOv10 outshines many competitors. Traditional models like Faster R-CNN offer high accuracy but at the expense of slower speeds. This makes them less suitable for real-time data processing. YOLOv10, on the other hand, ensures superior speed and close accuracy in object detection, essential for real-time applications.

Impact of Model Accuracy on Practical Applications

High model accuracy is vital for reducing false positives and improving reliability. YOLOv10's enhanced detection accuracy is critical in environments where precision is essential. This includes pedestrian detection for autonomous vehicles and obstacle detection for UAVs. YOLOv10's advanced features and optimizations deliver detailed and dependable detection capabilities, tailored for modern technology applications.

Using YOLOv10 in your systems means adopting a model that excels in both speed and accuracy. It strengthens the foundation for robust object detection frameworks that not only perform but also impress. Whether for vehicle detection, traffic monitoring, or advanced surveillance, YOLOv10 offers the capabilities needed to handle complex object detection tasks with ease and remarkable efficacy.

YOLOv10 vs SSD: Bridging the Gap in Performance

In the evolving landscape of object detection models, the performance comparison between YOLOv10 vs SSD is striking. YOLOv10 introduces cutting-edge advancements, boosting accuracy and speeding up detection times. It sets a new benchmark in the field.

YOLOv10's refined architecture eliminates the need for Non-Max Suppression (NMS), streamlining the processing pipeline. This fundamental enhancement allows YOLOv10 to outperform SSD, excelling in real-time processing scenarios.

Let's dive into the numbers to grasp the practical implications:

| Feature | YOLOv10 | SSD |

|---|---|---|

| Accuracy (mAP@50) | Significantly higher | Lower |

| Speed (FPS on embedded devices) | 24.6 FPS | Varies, generally slower |

| Architectural Efficiency | High (no NMS required) | Moderate (relies on NMS) |

| Application Suitability | Real-time detection | Non-real-time tasks |

| Computational Load | Lower | Higher |

YOLOv10 shows substantial superiority in real-time applications. Its ability to maintain high precision while efficiently operating on embedded systems is critical. This is essential in applications like autonomous driving or real-time surveillance.

SSD, while effective, faces challenges in latency and computational efficiency. These issues hinder its performance in time-sensitive tasks. YOLOv10's edge in performance comparison across various object detection models highlights its future-proof status in fields requiring swift and accurate detection.

Understanding the nuances of YOLOv10 vs SSD is key when selecting an object detection model. It aids in making informed decisions and maximizing the value of your technological investment. This is critical in environments where speed and accuracy are essential.

YOLOv10 vs Faster R-CNN: Which Is Better for Your Use Case?

When comparing object detection models, YOLOv10 vs Faster R-CNN stand out. It's essential to grasp their unique strengths and how they fit various use cases. Both are top-tier in object detection, yet their effectiveness differs across scenarios.

YOLOv10 is renowned for its lightning-fast speed, outperforming many rivals in detection rates. Its evolution from earlier versions has made it capable of processing images at up to 155 FPS. This is a game-changer for real-time applications, such as autonomous vehicles or surveillance systems.

Faster R-CNN, on the other hand, shines in precision, a key factor in scenarios where accuracy is essential. Though it's slower than YOLOv10, it excels in detecting small or complex objects. This makes it ideal for medical imaging or quality control, where precision is more important than speed.

| Feature | YOLOv10 | Faster R-CNN |

|---|---|---|

| Use Case Optimization | Suited for real-time processing and applications in need of rapid response. | Preferred for contexts where detail and high accuracy are key. |

| Speed (FPS) | Up to 155 FPS | Lower than YOLOv10, suitable for non-time-critical tasks. |

| Accuracy | High, with strong real-time performance. | Very high accuracy, excels in complex detection tasks. |

| Deployment | Ideal for edge devices and mobile apps due to lower computational needs. | Requires more powerful computing, also suited for edge cases. |

The choice between YOLOv10 vs Faster R-CNN hinges on your use case optimization. Both models excel in their domains but cater to different needs. Your priority—speed or precision—will determine the best model for your object detection needs.

YOLOv10 vs RetinaNet: A Comparative Analysis

In the competitive landscape of object detection models, the comparative analysis between YOLOv10 and RetinaNet reveals critical insights about their model efficiency and detection accuracy. YOLOv10, known for its superior speed and accuracy in various application scenarios, stands out against RetinaNet, specially in demanding real-time environments.

Emerging research showcases that YOLOv10 enhances performance across multiple domains including industrial automation, smart farming, and wildlife monitoring, making it a preferable choice for sectors requiring rapid and accurate object detection.

The technical aspects of both models indicate that while RetinaNet has been a robust model with an impressive mean average precision of 82.89% in controlled tests like pill identification, its frames per second rate lags significantly behind newer models like YOLOv10. This comparative drawback is critical in real-time applications where speed is as significant as accuracy.

Applying these models in a practical context, such as in healthcare for surgical guidance or in industrial settings for quality control, YOLOv10's advantages in processing speed and lower latency provide substantial benefits. This swiftness coupled with high accuracy facilitates more effective monitoring and intervention, which are vital in such high-stakes environments.

Further, smart farming applications, as highlighted in the analyses from researchers at Huddersfield University, benefit immensely from the advancements brought by YOLOv10. Its ability to accurately detect and analyze crop health or pest presence significantly boosts farm management and strategic intervention, aligning with the needs of modern precision farming.

In summary, the YOLOv10 vs RetinaNet comparison elucidates a clear preference for YOLOv10 in scenarios demanding high detection accuracy coupled with the efficiency of real-time processing. Prospective adopters and developers are encouraged to consider these factors in selecting the appropriate model for their specific use cases to maximize the benefits of object detection technology advancements.

Metric-Based Evaluation of YOLOv10 Among Object Detection Models

In the world of object detection, metric-based evaluation is key to measuring model performance. YOLOv10 shines with its advanced features and streamlined architecture. This is evident through detailed model parameters analysis and reduced computational overheads. We explore how these elements boost YOLOv10's performance and the impact of dataset variety on its results.

Analysis of Model Parameters and Computational Overheads

YOLOv10 significantly cuts down model parameters while boosting efficiency. For example, YOLOv10-S boasts 21.6 billion FLOPs and a latency of just 2.49 ms. This shows a leap forward over its predecessors. The decrease in computational needs highlights YOLOv10's efficiency, making it ideal for real-time applications.

It strikes a balance between precision and speed, enabling faster decisions without sacrificing accuracy. This is critical in environments needing rapid data processing.

When compared to RT-DETR-R101, which achieves a 54.3 mAP but with higher latency and FLOPs, YOLOv10's superiority is evident. Its parameter optimization and thinner model profile are key. These advantages are vital in scenarios like autonomous driving and healthcare, where quick and reliable object detection is essential.

Understanding the Impact of Dataset Variety on Model Performance

Different datasets present unique challenges based on their complexity and variety. YOLOv10 is designed to efficiently handle varied datasets without performance drops. Its ability to maintain consistent accuracy across scales, from YOLOv10-S to YOLOv10-X, is noteworthy. YOLOv10-X, despite its higher computational load, excels in diverse dataset challenges, achieving a 54.4 mAP.

YOLOv10's robustness is evident in its performance across different environments. From complex urban scenes to unpredictable natural settings, YOLOv10 remains reliable. This is due to its architectural innovations and ability to handle dataset variety. Its end-to-end training and detection framework, incorporating features like spatial channel decoupled downsampling, play a significant role.

So, the metric-based evaluation of YOLOv10 highlights its excellence in model parameters and computational efficiency. It also showcases its adaptability across diverse scenarios, making it a top choice for advanced object detection tasks.

Detecting Rare Objects: Model Performance on Imbalanced Datasets

Imbalanced datasets are a significant challenge in object detection, with rare object detection being a major hurdle. The YOLOv10 model, known for its efficiency in identifying common objects, faces unique challenges with rare objects. It requires advanced strategies to maintain high accuracy across different scenarios.

Understanding the dataset distribution and YOLOv10's performance is essential. In imbalanced datasets, common objects like 'person' or 'car' dominate, while rare objects like 'truck' have minimal representation. This imbalance poses a significant challenge.

The table below shows performance metrics of a logistic regression model on an imbalanced dataset. These metrics provide insights into YOLOv10's performance under similar conditions:

| Metric | Value |

|---|---|

| Accuracy | 98.20% |

| Precision (Class 1) | 81.25% |

| Recall (Class 1) | 75.58% |

| F1-Score | 78.31% |

These statistics highlight the need for YOLOv10 to adapt to imbalanced datasets. Techniques like class-aware sampling, repeat factor sampling, and loss weighting are critical. They help balance the dataset, improving YOLOv10 performance.

Practical applications show that undersampling can be harmful, while oversampling and data augmentation can enhance YOLOv10's rare object detection. These methods focus on the minority class, ensuring rare objects are well-represented during training. This is essential for robust model performance.

In conclusion, optimizing YOLOv10 for rare object detection in imbalanced datasets requires careful adjustments. These include balancing training samples, refining loss calculations, and improving input data representation. Such tailored strategies ensure YOLOv10 excels in both common and rare object detection, critical in specialized fields.

Summary

The field of object detection models, with YOLOv10 at its forefront, marks a significant leap in AI optimization. It has achieved unparalleled performance in real-world scenarios. YOLOv3's success on the COCO dataset paved the way for YOLOv10's advancements. YOLO's role in traffic management systems showcases its reliability, even in tough conditions.

Comparative studies, like the Detectron2's Model Zoo and EfficientDet-D7's results, highlight ongoing efforts for better precision and efficiency. These efforts underscore the relentless pursuit of excellence in object detection.

Published surveys reveal the ongoing challenges in object detection: localization, classification, recognition, and accurate view estimation. While some solutions address specific issues, YOLOv10 integrates historical knowledge with modern technology. It tackles these challenges head-on, significantly improving upon previous models.

Your exploration of AI solutions has brought you to a critical juncture. Now, you can see YOLOv10's suitability for your needs. By embracing YOLOv10 object detection models, you're preparing for a future where object detection seamlessly fits into various industries and applications.

FAQ

How does YOLOv10 compare to SSD in object detection?

YOLOv10 outperforms SSD in both precision and swiftness, making it superior for real-time object detection. It achieves this by eliminating the need for non-maximum suppression (NMS). This, along with architectural enhancements, boosts its latency and computational efficiency.

What are the advantages of YOLOv10 over Faster R-CNN?

YOLOv10 excels in real-time detection, combining high accuracy with low latency. It's perfect for scenarios requiring immediate analysis. In contrast, Faster R-CNN might be more suitable for tasks needing extreme precision, even if it's slower.

Is YOLOv10 more efficient than RetinaNet?

Yes, YOLOv10 offers better efficiency and accuracy than RetinaNet. Its optimized architecture and NMS-free inference process reduce latency and save computational resources.

What breakthroughs does YOLOv10 introduce in object detection?

YOLOv10 introduces a groundbreaking NMS-free training method, boosting real-time detection while maintaining high accuracy. It also features an enhanced CSPNet backbone and a PAN neck for superior feature extraction and multiscale fusion.

Can YOLOv10 detect rare objects effectively in imbalanced datasets?

YOLOv10 is designed to maintain consistent accuracy across diverse datasets, including rare objects. Yet, its performance on rare objects can be affected by dataset imbalances. Continuous refinement in training processes is essential to enhance its detection of rare objects.

What impact does the evolution of the YOLO series have on object detection models?

The evolution of the YOLO series, culminating in YOLOv10, has significantly advanced object detection models. It addresses key challenges like NMS reliance and architectural inefficiencies. This evolution has led to more efficient and accurate models for real-time object detection.

How significant are the accuracy and speed of YOLOv10 in practical applications?

The accuracy and speed of YOLOv10 are critical for practical applications. They directly impact the ability to analyze and respond in real-time. YOLOv10's enhanced features enable precise object detection with lower latency, essential for applications like autonomous driving, surveillance, and real-time analytics.

What model parameters and computational overheads does YOLOv10 reduce?

YOLOv10 reduces model parameters and computational overhead by optimizing components and streamlining gradient flow. This minimizes redundancy, resulting in a more efficient model that offers fast and accurate detection at a lower computational cost.

How does dataset variety affect the performance of YOLOv10?

Dataset variety significantly impacts YOLOv10's performance. Models must be robust to accurately detect objects across different contexts. YOLOv10 has shown resilience by maintaining accuracy across diverse datasets, including those with imbalances or a wide variety of object categories.

Comments ()