Core Algorithms and Techniques in YOLOv10

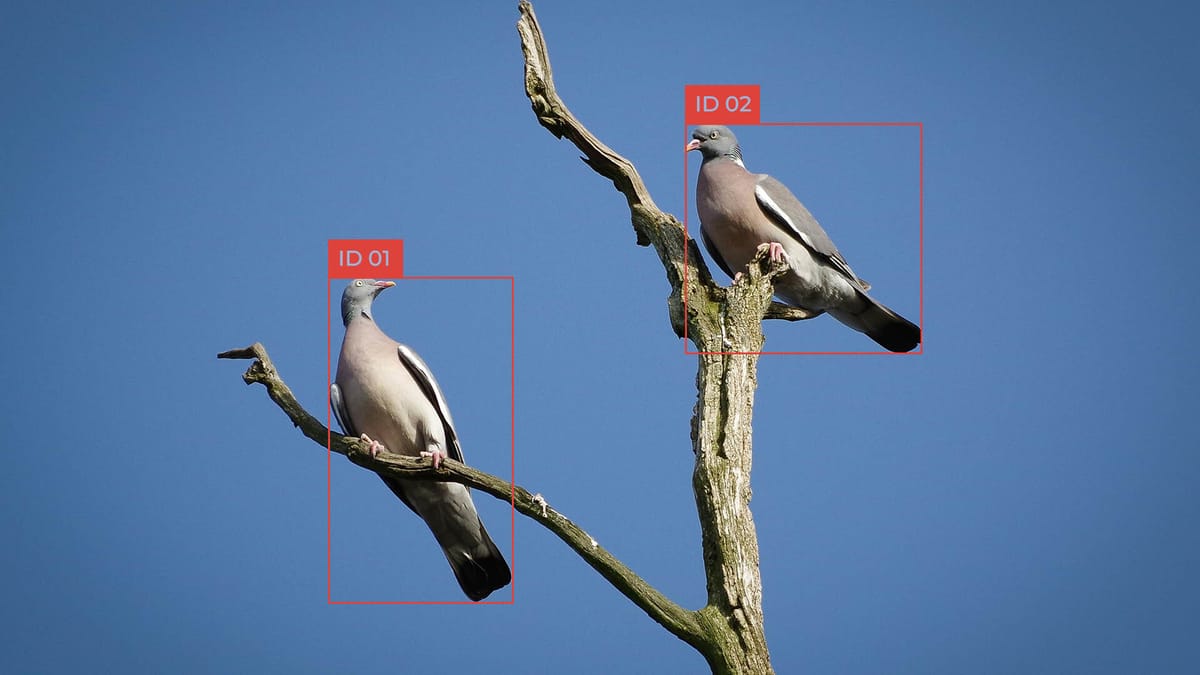

YOLOv10 represents a major advancement in real-time object detection. It builds upon the success of its predecessors, introducing new techniques that significantly enhance its performance across various applications. This latest version of the YOLO family showcases the power of deep learning and efficiency.

The architecture of YOLOv10 includes NMS-free training and consistent dual assignments. These innovations lead to unparalleled performance while minimizing computational overhead. Furthermore, it offers six sizes (N/S/M/B/L/X), catering to the diverse computational requirements of different scenarios.

Exploring YOLOv10's core algorithms reveals its prowess in real-time object detection. This model exemplifies the evolution of deep learning in computer vision. It delivers enhanced accuracy and efficiency, making it ideal for edge deployment.

Key Takeaways

- YOLOv10 is the latest in the YOLO family for real-time object detection

- Introduces NMS-free training and consistent dual assignments

- Achieves state-of-the-art performance with reduced computational needs

- Available in six sizes to suit various application scenarios

- Enhances deep learning capabilities for improved object detection

- Pushes boundaries in performance efficiency and accuracy

Introduction to YOLOv10 and Its Evolution

The YOLO family has transformed real-time object detection since its beginning. YOLOv10, the latest addition, builds on its predecessors for better performance and efficiency. This groundbreaking model brings significant advancements, expanding real-time object detection capabilities.

The YOLO Family: A Brief History

The YOLO journey started with a straightforward yet impactful concept: viewing object detection as a single regression task. Each version has introduced enhancements, with YOLOv9 adding new techniques like Programmable Gradient Information and Generalized Efficient Layer Aggregation Network just before YOLOv10's launch.

YOLOv10's Position in Real-Time Object Detection

YOLOv10 excels in real-time object detection by striking a balance between efficiency and precision. It surpasses its predecessors and rivals. For example, YOLOv10L outperforms GoldYOLOL with 32% less latency, 1.4% AP improvement, and 68% fewer parameters.

Key Advancements in YOLOv10

YOLOv10 boasts groundbreaking features that distinguish it:

- NMS-free training strategy

- Consistent matching metric

- Lightweight classification head

- Spatial channel decoupled downsampling

- Rank-guided block design

- Large kernel convolutions

- Partial self-attention (PSA)

These innovations lead to models that require 28% to 57% fewer parameters and 23% to 38% fewer calculations than earlier versions, yet show a 1.2% to 1.4% boost in Average Precision. This makes YOLOv10 perfect for real-time applications in autonomous vehicles, surveillance systems, healthcare, retail, and robotics.

Understanding the YOLOv10 Architecture

The YOLOv10 architecture represents a major advancement in real-time object detection. Introduced in May 2024, it brings forth significant improvements in convolutional neural networks and feature extraction methods. The YOLOv10 architecture is structured around three primary elements: a backbone for extracting features, a neck for fusing features, and a head for detecting objects.

At its essence, YOLOv10 employs a CSPNet backbone, refined with multiple spatial pyramid pooling blocks. This setup amplifies the model's capacity to handle and process visual data across diverse scales. The neck incorporates a Path Aggregation Network module, along with additional up-sampling layers, to enhance feature fusion and information dissemination across the network.

YOLOv10's core innovation lies in its lightweight classification head. This element minimizes computational redundancy, thereby enhancing operational efficiency. Additionally, the model introduces spatial-channel decoupled downsampling, which streamlines feature extraction by reducing computational costs and parameters.

| Component | Function | Innovation |

|---|---|---|

| Backbone | Feature Extraction | CSPNet with SPP blocks |

| Neck | Feature Fusion | PAN with up-sampling layers |

| Head | Object Detection | Lightweight classification |

YOLOv10's architecture features a rank-guided block design, ensuring efficiency by pinpointing and reducing redundancy. This method, combined with large-kernel convolution, boosts the model's ability to capture features without incurring excessive computational overhead. Consequently, YOLOv10 delivers a more efficient and effective system for object detection.

NMS-Free Training: A Game-Changing Approach

YOLOv10 introduces a revolutionary NMS-free training approach, setting a new standard for object detection efficiency. This innovative technique addresses the limitations of traditional Non-Maximum Suppression (NMS) post-processing, which often slows down inference speed.

Limitations of Traditional NMS

Traditional NMS methods have long been a bottleneck in real-time object detection. They require additional computational resources, hindering performance on edge devices. This limitation becomes particularly evident in applications requiring rapid processing, such as autonomous driving or real-time surveillance systems.

How NMS-Free Training Works in YOLOv10

YOLOv10's NMS-free training employs a consistent dual assignments strategy. This approach allows multiple predictions on a single object, each with its own confidence score. During inference, the system selects the bounding box with the highest Intersection over Union (IOU) or confidence, effectively reducing inference time without compromising accuracy.

Benefits of NMS-Free Training for Edge Deployment

The NMS-free training approach offers significant advantages for edge computing applications:

- Reduced computational overhead

- Faster inference speeds

- Improved real-time performance

- Enhanced object detection efficiency in resource-constrained environments

By eliminating the need for post-processing NMS, YOLOv10 achieves remarkable speed improvements while maintaining high accuracy. This breakthrough makes it an ideal choice for edge deployment scenarios where computational resources are limited but real-time object detection is crucial.

"NMS-free training in YOLOv10 marks a significant leap forward in object detection technology, paving the way for more efficient and responsive edge computing applications."

Consistent Dual Assignments Strategy

YOLOv10 introduces a groundbreaking consistent dual assignments strategy. This method combines one-to-many and one-to-one label assignments, transforming object detection. The model incorporates a new one-to-one head, mirroring the structure and optimization of the one-to-many branch.

This dual label assignments technique provides rich supervision during training and enables efficient, end-to-end inference. The consistent matching metric aligns the one-to-one and one-to-many branches. This enhances supervision and boosts model performance.

The benefits of this strategy are substantial. YOLOv10-S is 1.8 times faster than RT-DETR-R18 with similar AP on COCO. It has 2.8 times fewer parameters and FLOPs. YOLOv10-B shows a 46% reduction in latency and 25% fewer parameters compared to YOLOv9-C, maintaining equivalent performance.

| Model | Speed Improvement | Parameter Reduction |

|---|---|---|

| YOLOv10-S vs RT-DETR-R18 | 1.8x faster | 2.8x fewer |

| YOLOv10-B vs YOLOv9-C | 46% less latency | 25% fewer |

This innovative approach in YOLOv10 minimizes reliance on NMS for post-processing, reducing computational overhead. The consistent dual assignments strategy results in better efficiency and performance. It sets a new standard in real-time object detection.

Algorithms and Techniques in YOLOv10

YOLOv10 introduces groundbreaking techniques, enhancing efficiency and model design. Let's explore the core YOLOv10 methods that elevate it in real-time object detection.

Lightweight Classification Head

The lightweight classification head in YOLOv10 revolutionizes object detection. It employs depthwise separable convolutions to significantly reduce computational needs. This approach ensures YOLOv10 can process images swiftly without compromising accuracy.

Spatial-Channel Decoupled Downsampling

YOLOv10's spatial-channel decoupled downsampling technique is a testament to efficiency. It diminishes image resolution while augmenting channels, achieving a harmonious balance between detail preservation and computational efficiency. This method enables YOLOv10 to outperform its predecessors in processing speed.

Rank-Guided Block Design

The rank-guided block design is pivotal in YOLOv10's efficiency optimization. This method strategically allocates blocks across the network, ensuring each segment enhances the model's overall performance effectively.

Large-Kernel Convolution Implementation

YOLOv10 selectively employs large-kernel convolutions in deeper network stages. This strategic implementation boosts the model's ability to detect complex features while maintaining inference efficiency. It exemplifies YOLOv10's balanced approach to model architecture.

| Technique | Purpose | Impact |

|---|---|---|

| Lightweight Classification Head | Reduce computational demand | Faster inference times |

| Spatial-Channel Decoupled Downsampling | Efficient image processing | Quicker image analysis |

| Rank-Guided Block Design | Optimize network structure | Improved overall performance |

| Large-Kernel Convolution | Enhance feature capture | Better detection of complex objects |

These YOLOv10 techniques synergize to produce a model that excels in speed, accuracy, and efficiency. The outcome is a robust tool for real-time object detection, surpassing its predecessors in both velocity and precision.

Efficiency-Driven Model Design in YOLOv10

YOLOv10 is a leader in real-time detection, focusing on model efficiency and computational optimization. This version offers notable improvements in performance while cutting down on resource use.

The YOLOv10 series features models like YOLOv10-N to YOLOv10-X, each tailored for different scenarios. These models strike a balance between speed and precision.

| Model | Parameters (M) | FLOPs (G) | mAPval50-95 | Latency (ms) |

|---|---|---|---|---|

| YOLOv10-N | 2.3 | 6.7 | 39.5 | 1.84 |

| YOLOv10-S | 7.2 | 21.6 | 46.8 | 2.49 |

| YOLOv10-M | 15.4 | 59.1 | 51.3 | 4.74 |

| YOLOv10-L | 24.4 | 120.3 | 53.4 | 7.28 |

| YOLOv10-X | 29.5 | 160.4 | 54.4 | 10.70 |

YOLOv10's design focuses on efficiency without sacrificing accuracy. For example, YOLOv10-S is 1.8 times faster than RT-DETR-R18 with similar accuracy on COCO. It uses 2.8 times fewer parameters and FLOPs. This efficiency makes YOLOv10 perfect for real-time detection in environments with limited resources.

The model's architecture includes lightweight classification heads, spatial-channel decoupled downsampling, and rank-guided block designs. These features enhance YOLOv10's parameter efficiency. It outperforms previous models in computation-accuracy trade-offs across various scales.

YOLOv10's design shows that powerful object detection doesn't always need more computational resources. By balancing speed and precision, YOLOv10 sets a new benchmark for real-time detection in various applications.

Accuracy-Driven Model Enhancements

YOLOv10 introduces major advancements in object detection, significantly enhancing accuracy while preserving swift processing speeds. Its design is centered on refining feature extraction and integrating sophisticated attention mechanisms.

Partial Self-Attention Module

The Partial Self-Attention (PSA) module stands out in YOLOv10, leveraging a self-attention mechanism to improve global representation efficiently. This module selectively focuses on object relationships within images, enhancing the model's ability to capture complex interactions.

Optimized Feature Extraction Techniques

YOLOv10's performance is bolstered by cutting-edge feature extraction methods. It employs spatial-channel decoupled downsampling, a technique that minimizes computational overhead while retaining critical image details. This method enables YOLOv10 to process images rapidly without compromising on detection precision.

The model's efficiency is clearly reflected in its performance metrics:

- YOLOv10-S exhibits a 1.8% boost in Average Precision (AP) with a mere 0.18ms latency increase

- YOLOv10-M achieves 51.3% AP at a latency of 4.74ms

- YOLOv10-X leads with 54.4% AP, showcasing unparalleled accuracy

These advancements highlight YOLOv10's capacity to harmonize speed with precision, making it ideal for real-time applications requiring high detection accuracy. The synergy between enhanced feature extraction and attention mechanisms propels YOLOv10 to excel in object detection tasks.

Performance Benchmarks and Comparisons

YOLO benchmarks highlight significant advancements in object detection performance. YOLOv10 marks a leap forward, setting new benchmarks in balancing speed and accuracy. This section explores how YOLOv10 compares to its predecessors and other leading models.

YOLOv10 vs. Previous YOLO Versions

YOLOv10 surpasses earlier YOLO versions in various aspects. It demonstrates higher average precision (AP) than YOLOv8, using fewer parameters and exhibiting lower latencies. For instance, YOLOv10 models show AP gains of 1.4% to 0.5%, reducing parameters by 36% to 57% and latencies by 65% to 37% across different sizes.

| Model | AP Improvement | Parameter Reduction | Latency Reduction |

|---|---|---|---|

| YOLOv10-S | 1.4% | 36% | 65% |

| YOLOv10-M | 0.5% | 41% | 50% |

| YOLOv10-L | 0.3% | 44% | 41% |

| YOLOv10-X | 0.5% | 57% | 37% |

Comparison with State-of-the-Art Models

YOLOv10 distinguishes itself from other leading models. YOLOv10-S is notably faster than RT-DETR-R18 on COCO, achieving similar AP with fewer parameters and FLOPs. YOLOv10-B exhibits a 46% latency reduction and 25% fewer parameters than YOLOv9-C, while maintaining performance.

Analysis of Speed-Accuracy Trade-offs

The speed-accuracy trade-offs in YOLOv10 are striking. Its NMS-free training approach contributes to competitive performance with low inference latency. Large-kernel convolutions and partial self-attention modules enhance global representation learning, improving object detection accuracy without compromising speed.

YOLOv10's architectural enhancements, including consistent dual assignments and one-to-one head combined with the original one-to-many head, contribute to its superior balance of computational efficiency and detection accuracy. These advancements position YOLOv10 as a leading choice for real-time object detection across diverse applications.

Applications and Use Cases for YOLOv10

YOLOv10, the latest in the YOLO series, introduces substantial advancements in real-time object detection. Its enhanced efficiency and performance make it ideal for a broad spectrum of computer vision tasks. The model's capability to run on edge devices unlocks new possibilities for real-time object detection applications across various industries.

In autonomous vehicles, YOLOv10 stands out for its exceptional obstacle detection, improving safety and navigation. The healthcare sector benefits from its swift analysis of medical images, while retail employs it for inventory management and tracking customer behavior. Surveillance systems see enhanced accuracy in identifying potential security threats. Robotics applications utilize YOLOv10's speed for more effective interaction with their environment.

YOLOv10's variants (N, S, M, L, X) cater to diverse needs, offering improvements in Average Precision while utilizing fewer parameters. For example, YOLOv10-S exhibits a 1.4% AP improvement with 36% fewer parameters and 65% lower latency than YOLOv8. This versatility makes YOLOv10 perfect for edge computing scenarios, where resources are scarce but real-time performance is essential.

The model's efficiency-driven design, featuring a lightweight classification head and rank-guided block design, addresses previous inefficiencies. This strategy not only boosts performance but also broadens YOLOv10's applicability. It's now suitable for a wide range of applications, from mobile devices to industrial systems, cementing its role as a versatile tool in computer vision.

FAQ

What is YOLOv10?

YOLOv10 is the latest in the YOLO family, known for its real-time object detection capabilities. It introduces several groundbreaking techniques. These include NMS-free training, consistent dual assignments, and holistic model design. This results in state-of-the-art performance with reduced computational overhead.

How does YOLOv10 differ from previous YOLO versions?

YOLOv10 builds on the success of YOLOv5 and YOLOv8, offering significant improvements in accuracy and efficiency. It features NMS-free training, spatial-channel decoupled downsampling, and large-kernel convolutions. These advancements make it ideal for edge deployment scenarios.

What is NMS-free training, and how does it benefit YOLOv10?

Traditional NMS post-processing slows down inference speed. YOLOv10 eliminates NMS with a novel NMS-free training approach. This allows multiple predictions on an object with confidence scores. During inference, the bounding box with the highest IOU or confidence is selected. This reduces inference time without sacrificing accuracy, making it beneficial for edge deployment.

What is the consistent dual assignments strategy in YOLOv10?

YOLOv10 uses a consistent dual assignments strategy, combining one-to-many and one-to-one label assignments. An additional one-to-one head is added to the architecture, identical in structure and optimization to the one-to-many branch. This allows the model to benefit from rich supervision during training while enabling efficient, end-to-end inference.

What are some of the key algorithms and techniques implemented in YOLOv10?

YOLOv10 incorporates several innovative algorithms and techniques. These include a lightweight classification head using depthwise separable convolutions, spatial-channel decoupled downsampling, rank-guided block design, and selective implementation of large-kernel convolutions. These enhancements boost model capability without affecting inference efficiency.

How does YOLOv10 balance computational efficiency and detection accuracy?

YOLOv10's efficiency-driven design reduces parameters and GFLOPs significantly. Accuracy-driven enhancements, such as the Partial Self-Attention (PSA) module and optimized feature extraction techniques, boost Average Precision (AP) with minimal latency increases. This balanced approach makes YOLOv10 suitable for real-time applications and resource-constrained environments.

How does YOLOv10 perform compared to other state-of-the-art models?

YOLOv10 outperforms previous YOLO versions and other models in speed-accuracy trade-offs. For instance, YOLOv10-S and X are faster than RT-DETR-R18 and R101, respectively, with similar performance. YOLOv10-B reduces latency by 46% compared to YOLOv9-C while maintaining performance. YOLOv10-L and X surpass YOLOv8-L and X with fewer parameters.

What are some potential applications and use cases for YOLOv10?

YOLOv10's improved performance and efficiency make it suitable for various real-time object detection applications. These include autonomous vehicles, surveillance systems, augmented reality, robotics, mobile applications, IoT implementations, and embedded systems. It excels in real-time detection with limited computational resources.

Comments ()