Curating Alignment Test Sets: Evaluating Whether Models Follow Instructions

Alignment test sets are essential for verifying that models understand and execute tasks as intended. These instructions and evaluations are a cornerstone of reliable AI systems. As we explore the significance of alignment test sets, we'll see how they enhance AI model instructions and NLP model evaluation.

Key Takeaways

- Alignment test sets ensure AI models perform tasks as intended.

- These test sets play a critical role in AI model instructions and evaluation.

- A practical test set curation leads to reliable AI systems.

- Advancements in AI metrics and models are closely tied to robust test sets.

Understanding Alignment Test Sets and Their Importance

Such datasets evaluate the performance of AI systems to ensure that they meet the goals and standards set by developers. Conformance tests are needed in various industries, from automotive to healthcare, where they are important and have a wide impact.

Role in AI Model Evaluation

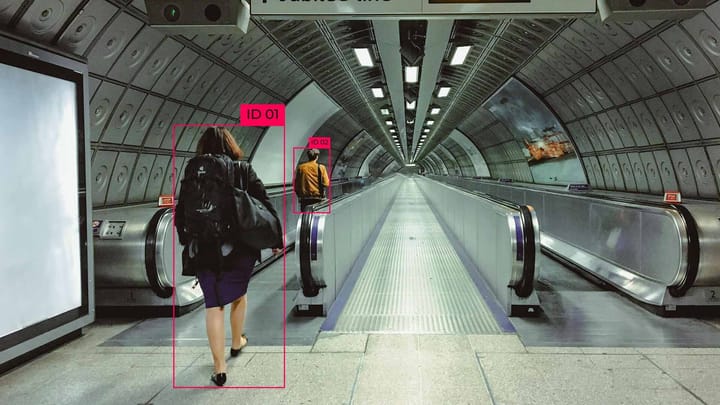

Tests help verify that models can understand and perform tasks correctly. For example, modern wheel alignment testing machines use internal computers and high-resolution cameras to make precise measurements. This is similar to the standards that AI models must meet to ensure reliability and accuracy.

Conformance tests also help improve the accuracy of AI systems. Developers can be confident that their models will work properly in real-world conditions if they apply strict protocols when testing future systems. Industry leaders such as Keymakr demonstrate the high quality and accuracy that continuously improve machine learning methods for AI models.

Diversity of Instructions

Thanks to the wide range of tasks, different real-world situations can be modeled, which is necessary for better annotation in other cases in real-world applications.

It also allows you to identify weaknesses or biases in the model, as the results of AI performance under different conditions can show these shortcomings.

Clarity and Precision

When instructions are correctly understood, AI models are less likely to make mistakes in their interpretation. Providing clear instructions improves the accuracy of the test.

Establishing Clear Objectives

To create compelling test suites, it is essential to set clear goals for what specific aspects of the model need to be evaluated.

For example, knowing what results you want to achieve allows you to determine the type and complexity of the instructions, which helps improve the accuracy of the scores.

- Identify key performance metrics to evaluate.

- Understand the instructional goals and outcomes.

- Define a structured approach for assessment.

Incorporating Feedback Mechanisms

Effective feedback mechanisms in AI are another critical practice. These mechanisms continuously monitor and improve AI model performance. They involve collecting data on model responses, analyzing it for patterns, and refining the model and test sets based on feedback.

This iterative process enhances accuracy and reliability in alignment.

- Collect and analyze model performance data.

- Identify error patterns and areas needing improvement.

- Iteratively refine test sets based on feedback.

Ambiguity in Instructions

When instructions are unclear or open to interpretation, AI models may misinterpret them and produce incorrect outputs.

For example, in machine learning, a test instruction like "Classify the images accurately" is ambiguous because it does not define the classification criteria. One model might prioritize color, while another might focus on object shapes. If the instructions were more specific, such as "Classify images based on animal species," models would have a clear guideline, leading to more consistent and reliable evaluations.

Bias in Dataset Composition

One of the main problems is the deviation in the dataset, which can significantly affect the results of the AI model. If the dataset is not diverse and representative, it can lead to inaccurate predictions of the model in the real world. To deal with such hidden biases, latent contradictory learning, and mechanistic interpretability help identify and eliminate hidden data issues.

Balancing Automation and Human Insight

Finding the right balance between automation and manual review is essential to achieve the best results. Automated systems such as Deep RL, which consider preferences, are effective at handling complex visual tasks, but adding feedback significantly improves their effectiveness by introducing context and flexibility. A balanced approach that combines evaluation with additional security measures is a good strategy.

Automation is great for processing large datasets and repetitive tasks, but manual review in AI is indispensable for detecting subtle errors and understanding the situation.

Qualitative Assessments

Qualitative evaluations of AI focus on aspects that are difficult to quantify. They include human reviews and contextual assessment.

- Tolerance to Errors: The system's ability to deal with data errors and imperfect records manifests the importance of qualitative evaluations.

- Empirical Evaluations: Compared to clean data, testing algorithms under real-world conditions shows their ability to adapt.

- Hybrid Approaches: Combining different methods to evaluate a text emphasizes the importance of various qualitative metrics.

A balanced approach between quantitative and qualitative AI evaluations is key to thoroughly vetting test sets for compliance. This dual strategy allows for the consideration of numerical performance and contextual nuances, which are important for the reliable evaluation of AI models.

The Impact of Advancements in AI

Recent advances in AI are changing how we process and analyze data, especially in areas such as molecular biology. New methods for studying biological sequences provide more accurate results, allowing us to understand the structure of proteins better.

New approaches include step-by-step validations and combining different methods to improve the accuracy of the analysis. As biotechnology evolves rapidly, processing methods need to be updated.

Ethical Considerations for Alignment

The development of AI brings both benefits and ethical challenges. Ensuring the ethical use of AI in compliance testing is an important task. This includes combating bias, protecting user privacy, and maintaining transparency in AI performance.

For example, improving multi-sequence alignment (MSA) methods requires careful approaches to errors and biases in protein function predictions. This requires an ethical framework to govern the use of AI in these areas to ensure fairness and accuracy. An example of the ethical use of AI is Reinforcement Learning from AI Feedback (RLAIF), which reduces dependence on medical experts and improves dialog models in healthcare.

A balanced implementation of technical advances and ethical principles is key to ensuring AI development that is both technologically advanced and ethically responsible, building trust in these systems.

Conclusion: The Path Forward in Alignment Testing

Effective alignment testing is vital for AI progress. It combines diverse instructions, clarity, and precision and requires a balance between automation and human insight. Each element is critical for ensuring AI models accurately follow instructions.

FAQ

What are alignment test sets?

Alignment test sets are datasets designed to check if AI models can follow instructions accurately. They are essential for verifying that models perform tasks as intended.

Why are alignment test sets important in AI model evaluation?

Alignment test sets are vital in AI model evaluation. They help developers gauge a model's ability to understand and execute tasks. This ensures the reliability of AI systems.

What makes a practical alignment test set?

A practical alignment test set must include diverse instructions and clear commands. This ensures the robustness and reliability of AI evaluations. It accurately tests the model's capabilities.

How should one design alignment test sets?

Designing alignment test sets requires setting clear objectives and determining what to assess. Incorporating feedback mechanisms is also key to continually enhancing AI model performance.

What are some common challenges in creating alignment test sets?

Challenges include ambiguity in instructions, which can confuse AI models. Biases in datasets can also affect the model's fairness and objectivity.

What role does human review play in alignment evaluation?

Human review is indispensable in alignment evaluation. It offers subjective analysis and adds context that automated processes might overlook. It ensures a balance between automation and human insight.

What technologies and tools are available for test set creation?

Developers can use AI-driven solutions and open-source frameworks to efficiently create and manage alignment test sets, which in turn help generate practical test sets.

Are there any successful examples of alignment testing?

Yes, research institutions and industry leaders have showcased successful alignment testing. These case studies provide valuable insights into best practices and lessons learned.

What metrics are used to evaluate alignment test sets?

Quantitative and qualitative metrics assess alignment test sets, ensuring a thorough and fair evaluation of AI model performance.

What future advancements are expected in alignment test set development?

Future advancements may include new AI technologies that influence test set design. There will also be a focus on ethical considerations for responsible AI deployment.

Comments ()