Curriculum Labeling: Structuring Data to Teach Models in Stages

This approach is changing the way AI models are trained. It offers structured methods to improve the accuracy of AI models.

Curriculum labeling is a method in semi-supervised learning that structures data annotations to optimize AI training results. Unlike traditional data labeling, curriculum labeling reruns the model parameters before each self-training cycle. This reduces concept drift and improves the training of AI models. This approach improves model performance and speeds up training.

Key Takeaways

- Curriculum labeling improves AI training with structured data annotations.

- Curriculum labeling retrains model parameters to avoid concept drift and improve accuracy.

- A step-by-step approach in the curriculum speeds up training time and improves model convergence.

What is pseudo-labeling?

Pseudo-labeling is a technique in semi-supervised learning where a model creates labels for uncertain data based on its predictions.

Understanding the Basics of Pseudo Labeling

- Training on labeled data. The AI model is first trained on a small dataset with the correct labels.

- Predicting labels. The AI model applies its knowledge to a new unlabeled dataset and predicts its labels.

- Appending to the training set. The predicted labels are added to the initial labeled dataset for further training.

- Iterating the process. The AI model gradually improves its predictions.

A study by the University of Virginia has shown the benefits of pseudo-labeling across various datasets. Experiments on CIFAR-10 and SVHN have shown that pseudo-labels improve model accuracy, especially when combined with techniques like data augmentation.

Labeling the curriculum structure

The data is divided into levels of complexity for gradual learning, from simple examples to more complex ones.

The basic level includes simple examples. The medium level includes more complex cases with some uncertainty. The high level of complexity has atypical or complex data. The model reruns its parameters before each new training cycle to avoid excessive memorization. This sets the learning process in place to maintain stability.

The AI model receives a modified data sample at each self-training cycle to adjust its parameters. To ensure the effectiveness of the labeling, it is tested using test samples.

Algorithmic Details and Percentile Thresholding

Algorithmic Details and Percentile Thresholding a percentile-based thresholding method for gradually including unlabeled data. A percentile is a statistical measure showing which data set values exceed other values. This approach supports the diversity and complexity of data required for practical classification tasks. The algorithm ensures that the next training cycle builds on the previous one, avoiding concept drift and increasing the stability of the learning.

Loss Function and Regularized Empirical Risk Minimization

The loss function measures how well an AI model makes predictions. It determines how much the predicted values deviate from the actual values. The loss function is formulated using regularized empirical risk minimization. ERM is a method for training an AI model that minimizes the average loss on the training data set. This method maintains the learning structure and adapts the labels. This allows you to focus on complex examples in training. Regularized empirical risk minimization helps to obtain generalized models that perform well on new data.

Experimental Results and Evaluations

Experiments using unlabeled data from unrelated classes showed minimal performance degradation. Maintaining the test error rate within 1% of the distribution results highlights the method's practicality for real-world applications where unlabeled data can vary significantly.

Data augmentation improved the model performance. Random cropping and color variation improved the test accuracy by 1.2% on CIFAR-10, demonstrating their importance in curriculum labeling systems.

Adaptive thresholding improved SSL results by dynamically including unlabeled data. This ensured a balanced training process.

In-Depth Ablation Study

We conducted an ablation study comparing training program labeling with traditional pseudo-labeling methods to understand the method's effectiveness. Ablation is a research method used to evaluate the impact of individual components of an AI model or algorithm by removing or changing them. This helps to understand which system parts are important for correct operation.

Traditional pseudo-labeling relies on fixed thresholds to select samples with high confidence. However, these static approaches can limit the performance of an AI model. This is seen in datasets such as CIFAR-10 and SVHN, where dynamic selection of pseudo-labels leads to better generalization.

Model Reinitialization Compared to Finetuning Approaches

Unlike traditional finetuning methods, re-initializing the model parameters starts training from scratch for each training cycle. This approach stabilizes the training process and improves accuracy on control datasets.

This shows the advantages of labeling curricula over conventional methods and offers a robust and efficient approach to semi-supervised learning. Check out the complete study to better understand the technical details and experimental results.

Curriculum Labeling in Modern Semi-Supervised AI Training

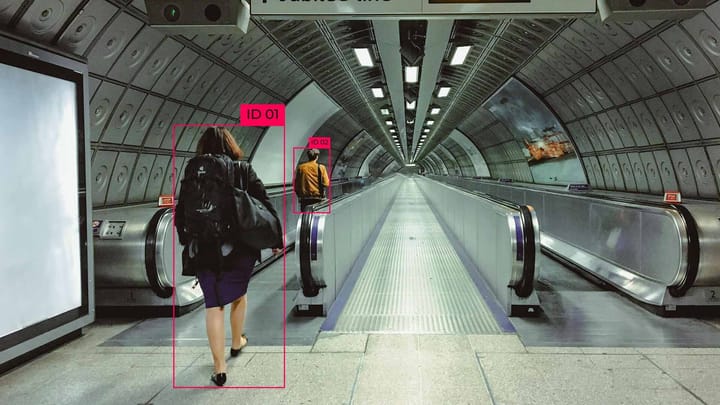

This approach is used in image classification tasks.

- Curriculum labeling image classification improves accuracy and reduces training time.

- Curriculum labeling provides better image classification results by annotating structured data. Its effectiveness has been proven through testing datasets.

Evaluation and Real-World Scenarios

The evaluations show the robustness of curriculum labeling when processing complex data. Real-world scenarios help improve performance, especially in semi-supervised learning (SSL) contexts.

- The evaluations show the method's robustness in processing diverse and complex datasets.

- Real-world applications prove the effectiveness of the approach in improving SSL results.

For an example of how curriculum labeling is applied and its impact on AI training, see the project on GitHub.

Summary

Curriculum labeling is a semi-supervised learning approach that improves the efficiency and consistency of AI models. This method ensures stable learning processes through iterative retraining and adaptive thresholding.

Experimental evaluations and ablation studies show the superiority of curriculum labeling over traditional pseudo-labeling methods. Its ability to handle complex datasets such as CIFAR-10 and SVHN demonstrates its practical application in real-world scenarios.

FAQ

What is curriculum labeling, and how does it enhance model training?

Curriculum labeling is a method in semi-supervised learning that structures data annotations to optimize AI training results.

How does pseudo-labeling work in semi-supervised learning?

Pseudo-labeling involves assigning labels to unlabeled data based on the predictions of an AI model. This technique uses unlabeled data to generalize an AI model.

How is SSL applied in computer vision tasks?

SSL methods like pseudo-labeling are widely used in computer vision to reduce reliance on labeled data. They enable models to learn from unlabeled data through techniques like consistency training and regularization.

What factors influence image classification performance in SSL?

The quality of pseudo-labels, data augmentation techniques, and the chosen model architecture influence performance. Additionally, the size and diversity of the unlabeled dataset play a significant role.

How does data augmentation impact AI model training?

Data augmentation increases dataset diversity, helping AI models generalize better.

Comments ()