Data Annotation Types Used for Autonomous Vehicles’ AI

There are two kinds of AI that companies use in autonomous vehicles. One is for inside the vehicle and is meant to monitor and aid any human passengers. The other is what most people think of and really want to see in a self-driving car: the AI that can drive the car without the need for a human driver. That will be the focus of this article. Specifically, we are looking at what kinds of data and data annotation are needed to train such an AI.

The data, in this case, is a large collection of videos and images of everything the AI might encounter while driving. This includes other vehicles, road obstacles, signs and signals, and pedestrians of various sizes and situations, including those in a stroller or wheelchair. Done right, data annotation makes the difference between an AI that can drive safely and one that runs over children during tests.

The more data that can be included in the final dataset, the better. Data annotation for self-driving cars requires several tools and techniques to create a useful dataset for the machine learning model. This is always a large, highly detail-oriented job. Our data annotation outsourcing services can handle it all for you. That way, you can focus on making the affordable, street-legal, safe self-driving car we all want.

AI programmers need detailed knowledge about the kinds of data annotation used and what they are good for in this context. Driving requires processing a lot of information fast and accurately and reacting as quickly as possible. Many of these details are small and can change the context of the situation entirely. For example, a child running out into the street ahead of the vehicle demands a different reaction than a child running past down the sidewalk.

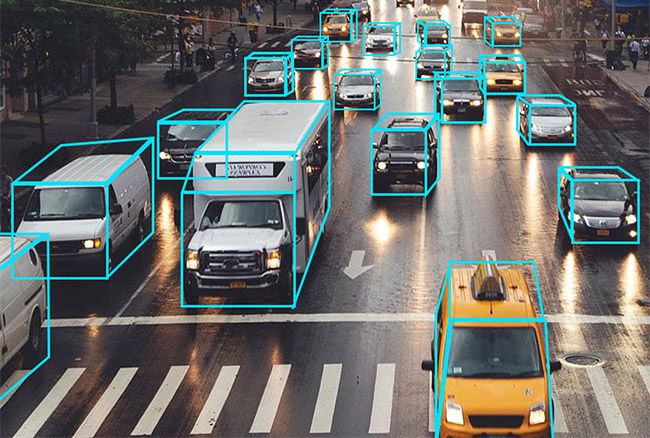

2D bounding boxes and rotating boxes are the simplest kind of data annotation and tend to cost the least. Bounding boxes are for mapping, localizing, and identifying various objects. Common objects labeled with bounding boxes include street signs, traffic lights, parking spaces, pedestrians and other vehicles.

Because 2D bounding boxes lack depth, they are not always the right thing to use. 3D cuboid annotation adds that depth of information. Based on the angle of the image and the kind of object identified, it is possible to make an accurate prediction of where the missing edges are. This provides a good estimate of the dimensions of the object. That is important for judging the object's distance and position in relation to other objects. It is vital for training a self-driving car's AI.

Kinds of Video Labeling for Autonomous Cars

- 2D Bounding Box Annotation

- 3D Cuboid Annotation

- Polygon Annotation

- Semantic Segmentation

- Lane Annotation

- Lines and Splines Annotation

More Annotation Types and Their Uses

Because we don't live in a world made up of voxels like Minecraft, we need to use polygon annotation for greater detail and precision than cuboids. Polygons give precise object localization. However, this increased accuracy comes at a price, as it takes more time and resources than other kinds of annotation. The road is full of objects with irregular shapes and varying speeds, so the extra precision is well worth it.

Semantic segmentation takes even more computational resources and labor to provide. That is because it classifies and labels every pixel in the image. Objects are classified into different categories; nothing is ignored or left out. As a result, semantic segmentation takes the highest degree of detail and accuracy. It also does the most to help an AI to understand and navigate the physical world around it using computer vision.

It would not be right to discuss video labeling for autonomous cars without discussing lane annotation. Lane annotation is crucial for vehicle safety and compliance with road rules. Avoiding collisions or cutting off other drivers requires the AI to recognize and stay in designated lanes. Therefore, making an AI for a self-driving car without lane annotation data would not be a good idea.

The annotation of lines and splines is closely related to lane annotation, sometimes called plumb lines. They are primarily useful for teaching an AI to recognize boundaries and lanes. As the name suggests, annotators simply draw a line along the lanes and boundaries the AI must recognize. This allows the AI to learn the basics of staying in its lane and off the sidewalk.

A poorly-programmed AI is the death of a self-driving vehicle project. It can potentially be the death of pedestrians and other drivers. To avoid this, AI designers must ensure that their project has robust and detailed data annotation of the correct types.

Comments ()