Embedding-Based Annotation: Leveraging Vector Spaces for Automatic Labeling

The basic idea behind embedding-based annotation is to represent data (e.g., text, images, or other complex objects) as vectors in a continuous, multidimensional vector space. These vectors capture the semantic meaning or relevant characteristics of the data, and their relationships in this space are structured so that similar data points are closer together. This spatial relationship allows machine learning models to infer new data based on these proximities.

In the context of automatic labeling, the embedding-based approach compares the similarity between the embedding of an unlabeled data point and a set of embeddings associated with predefined categories or labels. If an unlabeled document, for example, has a vector representation close to the vector of a particular label, the system can automatically assign that label to the document.

Key Takeaways

- Embedding-based annotation offers precise and efficient automatic labeling by utilizing vector spaces.

- Transitioning from traditional methods to embedding-based techniques enhances model adaptability and performance.

- This article combines theoretical knowledge with practical coding examples for a holistic understanding.

Introduction to Embedding-Based Annotation

Embedding-based annotation is an innovative machine learning and natural language processing (NLP) technique that harnesses the power of vector spaces to label or annotate unstructured data automatically. Traditionally, data annotation has been time-consuming and requires human effort to assign meaningful labels to large data sets. This can be particularly challenging in text classification, image tagging, and sentiment analysis, where large amounts of data must be processed quickly and accurately.

The basic idea behind embedding-based annotation is to represent data, such as words, sentences, or images, as vectors in a high-dimensional vector space. These vector representations, known as embeddings, capture the semantic meaning or central characteristics of the data in a way that allows the model to understand the underlying relationships between different pieces of data. By placing similar data points close to each other in this vector space, embedding allows for automatic label assignment based on proximity to existing labeled examples.

In NLP, for example, Word2Vec, GloVe models, and newer transformer-based models such as BERT generate embeddings of words or sentences that reflect contextual meaning. These embeddings can then be used to annotate new unannotated data by measuring the similarity between the embeddings of the unannotated data and the pre-labeled categories.

Understanding the Role of Embeddings

In traditional machine learning, data is often represented in sparse or discrete formats, such as single-operator encoding for words or image pixel values. However, these representations do not capture the inherent relationships between data points, such as word similarities or standard features between images. Embeddings, on the other hand, represent data in a way that reflects these relationships.

Embeddings are learned to optimize meaningful relationships. In Word2Vec, for example, the model learns embeddings by predicting surrounding words (context) based on a given word (target), resulting in a representation that reflects the usage and meaning of a word in different contexts. This process allows for embedding a wide range of linguistic features, including syntax, semantics, and even more abstract relationships, such as analogy.

Embedding is not limited to words, and it can also represent sentences, paragraphs, or entire documents, capturing more complex structures and contexts. For images or other data types, embedding is learned using convolutional neural networks (CNNs) or other deep learning models, creating vector representations that capture visual characteristics such as shapes, colors, and textures.

Best Practices for Embedding-based labeling

- Label refinement and iterative feedback. Although embedding-based annotation is automated, it should not be completely inactive. Regularly reviewing and refining the labels generated by the model is essential. For example, some outlier cases or ambiguous data points may be misclassified by automatic annotation. Incorporating human feedback in the form of active learning or periodic manual checks can provide higher accuracy and eliminate possible misclassifications. Iterative improvements can also be made by retraining the model on newly annotated data, improving its ability to make better predictions.

- Deal with class imbalance. Class imbalance can be problematic with embedding-based labeling when specific categories or labels are underrepresented in the data. The model may not annotate the underrepresented categories effectively if the embedding is not correctly balanced across classes. Techniques such as over-sampling under-represented classes, under-sampling over-represented courses, or using weighted loss functions during training can help mitigate this problem and lead to more balanced labeling.

- Regular evaluation and monitoring of the model. Consistent assessment of the labeling process is critical to maintaining accuracy over time. This includes tracking performance metrics such as precision, recall, and F1 score to assess the quality of the labels generated by the embedding model. It is essential to monitor how the model performs on new or changing data, especially when the distribution of the data changes, which can affect embedding performance. Periodic re-evaluation helps ensure the system remains robust when new data is added.

- Eliminate noise in the data. Data quality is a crucial factor for embedding-based labeling. Noisy or inconsistent data (e.g., spelling errors, outdated terminology, or irrelevant features) can confuse the model and lead to incorrect labels. Preprocessing steps, such as text normalization, object recognition, or filtering out irrelevant data, help reduce noise and ensure that the generated embeddings reflect the essential characteristics of the data.

Integrating Regressors and Classifiers for Enhanced Annotation

- Using regression to estimate confidence. In some annotation tasks, it is not enough to assign a label or category; you also want to quantify the confidence or certainty associated with that label. Regression models can use classifiers to derive confidence scores or probability distributions over labels.

- Hybrid models for multitasking. In some scenarios, tasks may involve both categorical and continuous outcomes. A hybrid model combining classification and regression can be trained to predict multiple related outcomes simultaneously.

- Refine labels with constant predictions. Sometimes, the labels must be refined or adjusted based on additional information. For example, a classifier may provide an initial label in a supervised learning task that categorizes documents. However, by using a regressor, you can refine the purpose of the labels by adding continuous predictions, such as the probability of a document belonging to a category or its relevance score for a particular task.

- Dynamic adaptation with feedback. In annotation tasks, models can adapt based on feedback, and this feedback often takes both categorical and continuous forms. For example, a model can be continuously trained on newly labeled data. The classifier can update its category predictions, while the regressor can adjust the confidence or other continuous characteristics associated with those predictions.

- Improving scalability with multiple label sources. Sometimes, annotation tasks involve multiple label sources. For example, a video may be annotated with several categories (action, genre, mood) and continuous features (e.g., scene duration or average mood score). By combining regressors and classifiers, the model can scale more efficiently to handle different labels.

Optimizing Model Performance with Embeddings

Optimizing model performance through embedding is a key approach in modern machine learning, particularly in natural language processing (NLP), computer vision, and other areas that require high-dimensional data representation. It helps models understand the underlying patterns and relationships in the data, improving their ability to make predictions, classify items, or cluster data points. To maximize model performance, embedding optimization focuses on improving the quality of these vector representations to capture complex data characteristics better.

One of the main ways to optimize embedding is to choose the right technique for the task at hand. Similarly, for images, convolutional neural networks (CNNs) or vision transformer models such as CLIP can generate embeddings that capture visual features such as shapes, colors, and textures. Choosing the right embedding models adapted to the data type is essential to optimizing performance.

Another critical aspect of optimizing embedding-based models is efficiently handling high-dimensional spaces. Embedding models typically represent data points in multidimensional vector spaces, where each dimension represents a data feature. However, the "curse of dimensionality" can occur in large spaces, leading to inefficiency and overfitting. To mitigate this, dimensionality reduction techniques such as Principal Component Analysis (PCA) or t-SNE can reduce the number of dimensions while retaining as much information as possible. In addition, techniques such as autoencoders or Siamese networks can help create more compact and efficient embeddings that still capture essential relationships and patterns in the data.

Regularization techniques, such as L2 regularization or dropout, help prevent overfitting by controlling the complexity of the model and encouraging it to generalize better. In the context of embedding, regularization can promote the model to learn more robust representations that do not rely too heavily on noisy or irrelevant features in the data. For example, embedding layers can be regularized to avoid learning trivial or redundant representations, thereby improving their ability to generalize to unseen data.

Combining Embeddings with CLIP for Image Annotation

Combining embedding with CLIP (Contrastive Language Image Pre-training) for image annotation is a powerful approach that exploits the synergy between visual and textual data to improve the image annotation process. CLIP, developed by OpenAI, is a model that can understand images and text in a common embedding space, allowing it to perform various tasks that require both modalities, such as image classification, captioning, and search.

CLIP is trained to align images and text descriptions in a shared vector space, where each image and its corresponding text (e.g., a caption) are projected into similar regions of space. This dual learning allows CLIP to link textual and visual representations.

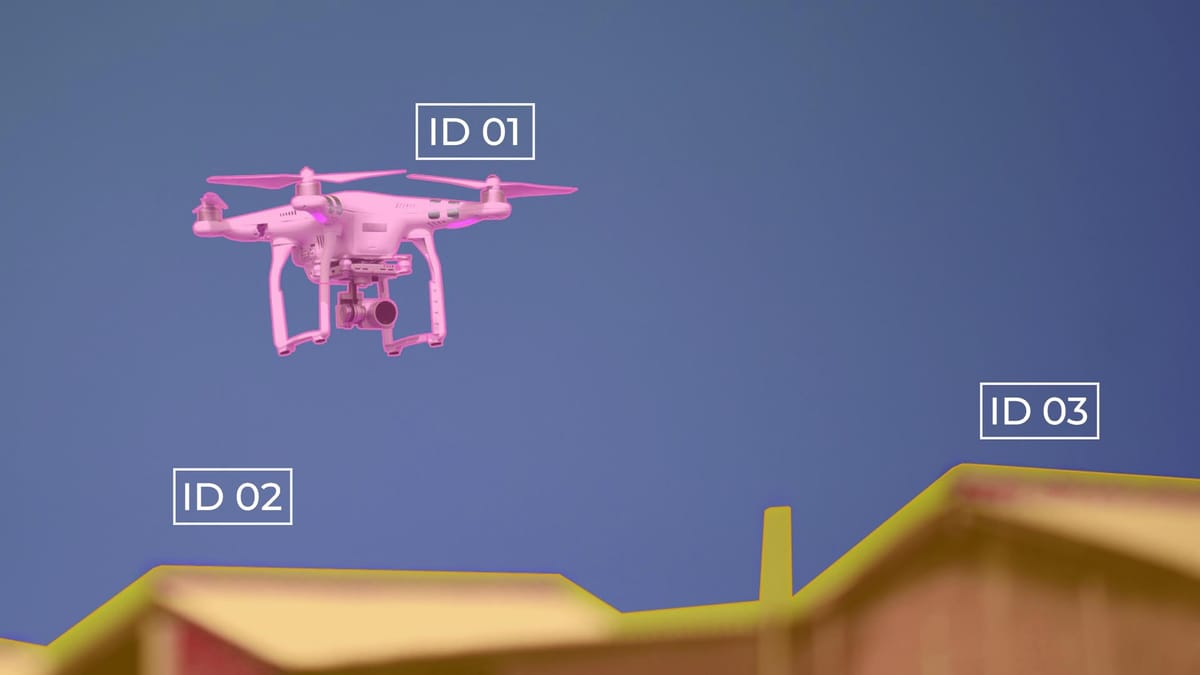

In the context of image annotation, CLIP can automatically assign appropriate tags or captions to an image by matching it with descriptive text. The model generates embeds for both the picture and the text description, and the most relevant annotations are selected based on their proximity to the shared embed space.

One key advantage of combining embedding with CLIP is that the model can generate embeddings for both images and text, allowing for more detailed and dynamic annotations. By presenting images and text in the same embedding space, CLIP enables tasks such as image captions, keyword highlighting, and visual question answering (VQA) by displaying textual queries and visual content in a shared space where their relationships are clearly defined.

Summary

Embedding-based annotation harnesses the power of vector spaces to automate the process of labeling data. Embedding models capture the underlying relationships and semantic meanings between data elements by representing data points (e.g., words, images, or sentences) in continuous, multidimensional vector spaces. This method provides automatic labeling by measuring the proximity of data points in the vector space, which facilitates the assignment of appropriate annotations based on patterns found in the data. Whether the data is text, images, or other forms of data, embedding-based annotation simplifies and speeds up the labeling process by using machine learning models that can understand complex data relationships.

The essence of embedding-based annotation is the ability to transform data into embeddings that can be compared in a shared space. These embeddings are often generated using pre-trained models, such as Word2Vec for text or CLIP for images, that have learned to map data into spaces that preserve semantic relationships.

FAQ

What is embedding-based labeling?

Embedding-based labeling is a technique that automates the labeling process using vector representations (embeddings) of data.

How do embeddings improve model performance?

Embeddings capture complex relationships and patterns in data, providing richer representations than traditional methods.

Are embeddings suitable for real-time applications?

Yes, embeddings can be used in real-time applications. However, choosing the embedding method and model optimization are crucial to ensure low latency and efficient processing.

How is quality assurance maintained in automatic labeling processes?

Quality assurance uses validation methods like cross-validation and confusion matrix analysis. Techniques for error detection and correction are employed. Metrics like precision and accuracy assess labeling system effectiveness.

What are some best practices for implementing embedding-based labeling systems?

Best practices include following data preparation guidelines and using model evaluation criteria. Implementing consistent maintenance protocols is essential.

Comments ()