History of YOLO: From YOLOv1 to YOLOv10

The YOLO (You Only Look Once) family has transformed computer vision since its debut in 2016. Joseph Redmon and his team introduced this groundbreaking framework, altering the real-time processing landscape in artificial intelligence.

YOLO's inception started with YOLOv1, employing convolutional neural networks for swift object detection in images. This model's real-time processing and high accuracy distinguished it from earlier two-stage detectors.

As YOLO progressed, each iteration introduced notable enhancements. YOLOv2 and YOLOv3 enhanced the architecture, and subsequent versions like YOLOv4 and YOLOv5 further elevated performance. The latest versions, including YOLOv10, continue to boost efficiency and precision in detecting objects.

Key Takeaways

- YOLO revolutionized real-time object detection in computer vision

- The framework evolved from YOLOv1 to YOLOv10, each version improving performance

- YOLO uses convolutional neural networks for efficient image processing

- Recent versions like YOLOv10 focus on reducing computational costs

- YOLO's development has significantly impacted various real-world applications

Introduction to YOLO: Revolutionizing Object Detection

YOLO, short for You Only Look Once, has revolutionized the field of computer vision with its groundbreaking approach to object detection. This framework has significantly altered how machines interpret and analyze visual data. It has opened up numerous opportunities in image recognition and deep learning applications.

The birth of real-time object detection

YOLO introduced a single-stage detector approach, simultaneously handling object identification and classification in one network pass. This innovation enabled faster processing and smaller model sizes. It made the framework ideal for real-time applications and edge devices with limited computing power.

YOLO's impact on computer vision

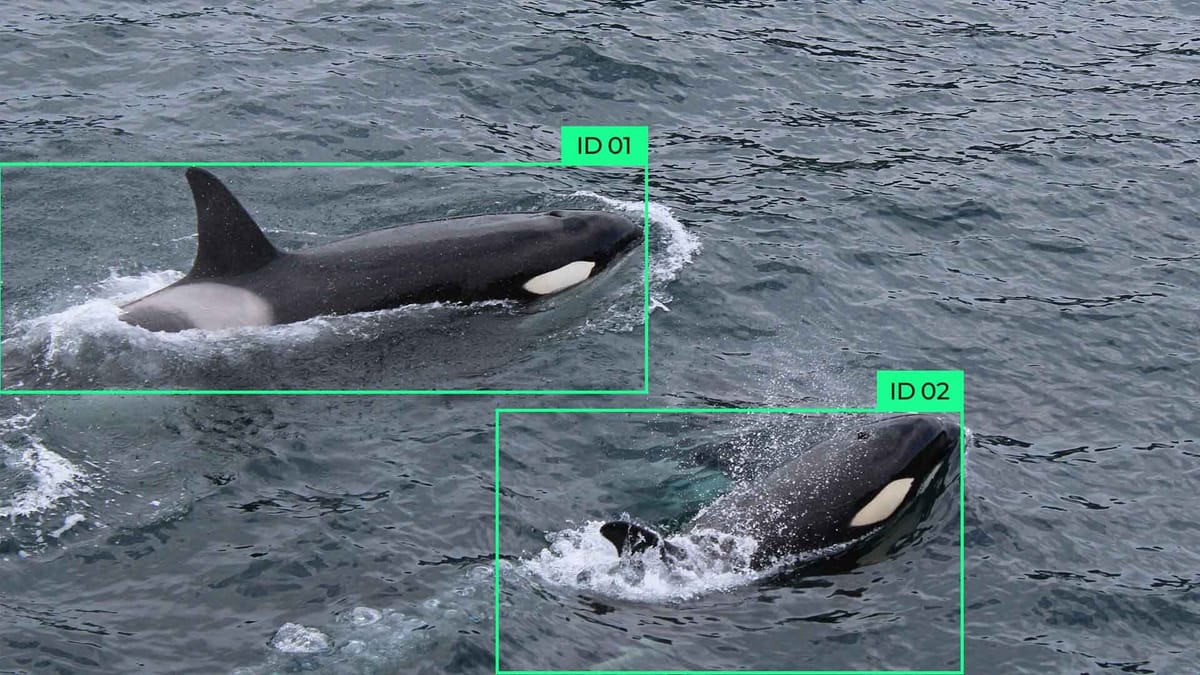

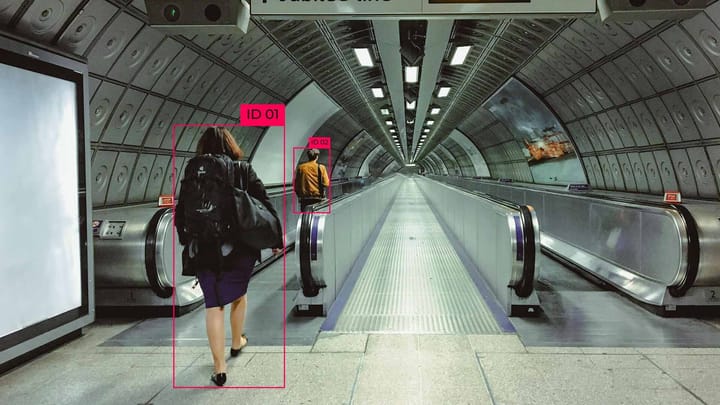

The YOLO framework has transformed computer vision by enabling rapid and accurate object detection. Its speed and precision are ideal for various real-time applications, including:

- Vehicle detection in autonomous driving

- Animal identification in wildlife conservation

- Safety monitoring in industrial settings

- Retail inventory management

Key features of the YOLO framework

YOLO's success is attributed to its unique approach and continuous enhancements. Key features include:

- Grid-based prediction system

- Single network pass for detection and classification

- Real-time processing capabilities

- Adaptability to various computational requirements

The evolution of YOLO from version 1 to 10 has consistently improved performance and expanded capabilities in object detection. For instance, YOLOv10 showcases remarkable efficiency with up to 57% fewer parameters while improving Average Precision by 1.4% compared to previous models.

As YOLO continues to evolve, it remains at the forefront of computer vision technology. It drives innovation in deep learning and image recognition across diverse industries and applications.

YOLOv1: The Groundbreaking Beginning

In 2015, Joseph Redmon and his team introduced YOLOv1, a revolutionary approach to object detection. This groundbreaking model transformed the landscape of computer vision by treating detection as a regression problem. YOLOv1's unique architecture combined bounding boxes prediction and class probability estimation in a single network, paving the way for real-time object detection.

YOLOv1's innovative design divided input images into an S × S grid. Each grid cell predicted B bounding boxes along with confidence scores. This approach significantly reduced background errors compared to region proposal-based methods. The model's architecture consisted of 24 convolutional layers followed by two fully connected layers, enabling efficient processing.

Operating at an impressive 45 frames per second, YOLOv1 outpaced real-time processing requirements. A faster variant, aptly named Fast YOLO, could handle up to 155 frames per second, albeit with a slight trade-off in accuracy. This speed made YOLOv1 particularly suitable for integration with popular computer vision libraries like OpenCV and TensorFlow.

"YOLOv1 showcased remarkable generalizability when tested on artwork, surpassing other detection methods like DPM and R-CNN."

The introduction of YOLOv1 marked a significant milestone in object detection, setting the stage for future iterations and advancements in the field. Its ability to process images in real-time while maintaining accuracy made it a game-changer for various applications, from autonomous vehicles to quality control in manufacturing.

YOLOv2 and YOLOv3: Refining the Architecture

The YOLO framework experienced notable enhancements with its second and third iterations. These versions refined the architecture, boosting real-time processing and object detection accuracy.

YOLOv2: Introducing Anchor Boxes and Darknet-19

YOLOv2 introduced anchor boxes, a significant upgrade that improved the detection of objects with diverse sizes and shapes. The Darknet-19 architecture, a convolutional neural network with 19 layers, enhanced the model's feature extraction capabilities.

YOLOv3: Multi-scale Predictions and Darknet-53

YOLOv3 elevated object detection to new levels. It employed multi-scale predictions, enabling the model to detect objects at various sizes more effectively. The Darknet-53 architecture, with its 53 convolutional layers, further amplified the model's feature learning capacity.

Performance Improvements over YOLOv1

Both YOLOv2 and YOLOv3 surpassed their predecessor in performance:

- Improved accuracy in detecting smaller objects

- Enhanced real-time processing speeds

- Better handling of multiple objects in a single image

These advancements made YOLO more versatile and applicable across various industries, from autonomous vehicles to wildlife monitoring.

The evolution from YOLOv1 to YOLOv3 marked a significant leap in object detection technology. Each iteration introduced new features and improvements, cementing YOLO's leading position in computer vision.

The Transition: From Redmon to Community-Driven Development

In 2020, a pivotal shift marked the YOLO project. Joseph Redmon, the original architect, departed from computer vision research. This move paved the way for community-driven development, ushering in a new chapter for YOLO.

This transition catalyzed rapid progress in deep learning and image recognition. Multiple teams delved into various facets of the YOLO framework. Some refined hyperparameters, while others ventured into neural architecture search.

The community-driven ethos led to a proliferation of accessible articles and a plethora of improvements to YOLO. For instance, YOLOv5 emerged on June 10, mere weeks post-YOLOv4. This version introduced a paradigm shift, migrating from Darknet to PyTorch for enhanced model development.

- Integration of CSP (Cross-Stage Partial) modules

- FP16 precision for faster inference

- Enhanced data augmentation techniques

- Novel bounding box anchor generation

However, this community-driven approach also ignited debates. Queries surfaced regarding the authenticity of new versions and the veracity of their performance claims. This controversy underscored the complexities of open-source development within cutting-edge AI technologies.

| Version | Release Date | Key Features |

|---|---|---|

| YOLOv4 | April 23, 2020 | 50 FPS on Tesla P100 |

| YOLOv5 | June 10, 2020 | 140 FPS on Tesla P100, 27MB model size |

The era of transition underscored the potential of community engagement in AI innovation. It also emphasized the importance of transparency and peer review within the dynamic realm of deep learning research.

YOLOv4 and YOLOv5: Pushing the Boundaries

The YOLO framework is evolving, with YOLOv4 and YOLOv5 leading the charge in object detection and computer vision. These versions have made substantial strides in performance, accessibility, and real-world applications.

YOLOv4: Optimizing hyperparameters and loss functions

YOLOv4 has honed in on optimizing network hyperparameters and introduced an IOU-based loss function. This version has enhanced the YOLO framework's image processing capabilities by dividing images into grids and predicting for each cell. This results in better accuracy in detecting objects, particularly smaller ones.

YOLOv5: Improved anchor finding and accessibility

YOLOv5 has made significant strides with its improved anchor finding algorithms and a focus on accessibility. It offers various variants to suit different computational needs, from nano for limited resources to extra-large for high accuracy. YOLOv5 has achieved notable results, boasting a 37.4 mAP on the COCO dataset and a 6.4ms inference time on a V100 GPU.

Comparing YOLOv4 and YOLOv5 performance

Both versions have significantly boosted YOLO's performance, but YOLOv5 is particularly notable for its faster training and easier deployment. It leverages advanced data augmentation techniques like AutoAugment and Mosaic Augmentation, enhancing generalization and small object detection. YOLOv5 also offers export options to ONNX, CoreML, and TensorRT for seamless deployment across various platforms.

| Feature | YOLOv4 | YOLOv5 |

|---|---|---|

| Focus | Hyperparameter optimization | Accessibility and versatility |

| Key Improvement | IOU-based loss function | Improved anchor finding |

| Variants | Limited | Multiple (n, s, m, l, x) |

| Deployment | Standard | Enhanced export options |

YOLOv4 and YOLOv5 mark major advancements in the YOLO framework, offering enhanced object detection capabilities and computer vision performance. These advancements are setting the stage for more efficient and accurate applications in areas such as self-driving cars, video surveillance, and drone object detection.

YOLOR and YOLOX: Expanding YOLO's Capabilities

In 2021, YOLOR and YOLOX emerged, extending YOLO's capabilities in object detection. These models introduced new approaches to boost accuracy and versatility in computer vision tasks.

YOLOR, developed by the YOLOv4 team, focused on multi-task learning. It combined classification, detection, and pose estimation into one network. This integration led to more efficient processing of complex visual data, enhancing YOLO-based systems' performance.

YOLOX, developed by Megvii Technology, took a unique path. It reintroduced an anchor-free process, making the detection of bounding boxes simpler. This change made YOLOX more adaptable to various object sizes and shapes.

Both models marked significant advancements in the YOLO framework. They enabled more efficient real-time object detection, essential for applications like autonomous driving and surveillance. The innovations in YOLOR and YOLOX inspired further research, leading to versions like YOLOv7 and beyond.

These developments highlighted the versatility of YOLO architecture. By broadening its capabilities, YOLOR and YOLOX made YOLO more versatile for diverse computer vision scenarios. They also paved the way for future enhancements, including integration with deep learning frameworks like TensorFlow, further boosting YOLO's accessibility and performance.

YOLOv1 introduced a groundbreaking grid-based method, dividing images into cells for simultaneous bounding box and class prediction. This was followed by subsequent versions that brought about substantial enhancements:

- YOLOv2: Anchor boxes for enhanced object localization

- YOLOv3: Multi-scale predictions and an improved backbone

- YOLOv4: Optimized hyperparameters and loss functions

- YOLOv5: Improved anchor finding and accessibility

Contributions from Research Teams

The YOLO journey has been a collaborative effort. Following Joseph Redmon's initial work, various teams have made significant contributions:

- Ultralytics: Developed YOLOv5 and YOLOv8

- Baidu: Introduced PP-YOLO

- Deci AI: Created YOLO-NAS

Impact on Real-World Applications

YOLO's evolution has profoundly impacted numerous industries. In agriculture, it's transforming crop monitoring, disease detection, and yield estimation. The manufacturing sector benefits from automated quality inspection, overcoming human limitations in bias and fatigue.

| Application | Impact |

|---|---|

| Autonomous Vehicles | Real-time obstacle detection |

| Agriculture | Crop health monitoring |

| Manufacturing | Quality control automation |

As YOLO continues to evolve, its applications in object detection are expected to expand, driving innovation across various sectors.

Yolov6 to Yolov10

Each version of YOLO comes with improvements and advancements, taking the algorithm to new heights. In this article, we will delve into the journey from YOLOv6 to YOLOv10, highlighting the key features and changes along the way.

However, it had some limitations, such as struggles with detecting small objects accurately.

To overcome these challenges, subsequent versions like YOLOv6 incorporated enhancements and refinements. YOLOv6 made significant progress by utilizing advancements in frameworks like OpenCV and TensorFlow. It introduced improvements in detecting smaller objects, achieving better precision without sacrificing speed.

Moving forward, YOLOv7 introduced more advanced techniques such as anchor boxes and feature pyramid networks, which further enhanced the model's ability to detect objects at various scales.

The latest version in this evolving series is YOLOv10. With each iteration, YOLO evolves to deliver higher accuracy, faster inference times, and improved object detection capabilities. YOLOv10 continues to build on the strengths of previous versions and pushes the limits of real-time object detection even further.

We have a separate overview of YOLOv10 and a brief run through the latest versions that you can find here.

YOLO's Applications

YOLO's real-time processing has transformed computer vision across industries. In agriculture, YOLO variants have revolutionized crop monitoring and livestock management. The CucumberDet algorithm, a YOLO variant, has boosted detection accuracy by 6.8%. It now achieves a mean Average Precision of 92.5% with 38.39 million parameters. This model processes at 23.6 frames per second, making it perfect for real-time agricultural tasks.

YOLO's deep learning framework extends beyond farming to factory security, traffic monitoring, and workplace safety. In photovoltaic (PV) manufacturing, YOLO variants are key for quality control, supporting sustainable energy solutions. They outperform two-stage models like R-CNNs in inspecting manufacturing quality and detecting defects.

YOLO's applications also include autonomous vehicles, sports analysis, medical fields, and wildlife conservation. Its evolution from YOLOv1 to YOLOv8 over nine years has enhanced performance and broadened its use. By using YOLO and its variants, industries can improve efficiency and quality while meeting the growing need for advanced object detection.

FAQ

What is YOLO, and how did it revolutionize object detection?

YOLO, or You Only Look Once, is a groundbreaking computer vision model introduced in 2016. It transformed object detection by integrating bounding box drawing and class label identification within a single network. This approach was faster and more efficient than earlier methods, marking a significant leap in the field.

What are the key features of the YOLO framework?

YOLO's innovation lies in its single-stage detector architecture. It simultaneously handles object identification and classification during a single network pass. This design enables YOLO models to be faster and smaller, facilitating rapid training and deployment on resource-constrained devices.

How did YOLOv1 set the foundation for future YOLO iterations?

YOLOv1, launched in 2015, laid the groundwork for the YOLO framework. It introduced a novel architecture that viewed detection as a regression task. This approach combined bounding box prediction and class probability estimation into a unified network, revolutionizing real-time object detection.

What were the key improvements in YOLOv2 and YOLOv3?

YOLOv2 enhanced the model with anchor boxes, BatchNorm, and the Darknet-19 architecture. YOLOv3 built upon these advancements with multi-scale predictions and the Darknet-53 architecture. These improvements significantly boosted YOLO's performance, especially in detecting smaller objects.

How did the transition from Redmon's leadership to community-driven development impact YOLO's evolution?

After Joseph Redmon ceased his computer vision research in 2020, the YOLO project transitioned to community leadership. This shift led to more accessible development resources and a variety of approaches to enhance the framework. The community-driven phase has since accelerated advancements and introduced diverse YOLO architectures.

What were the key contributions of YOLOv4 and YOLOv5?

YOLOv4 focused on refining network hyperparameters and introducing an IOU-based loss function. YOLOv5, developed by Ultralytics, brought further improvements with an enhanced anchor finding algorithm and a focus on accessibility. These versions offer faster training and simpler deployment options.

How did YOLOR and YOLOX expand YOLO's capabilities?

YOLOR explored multi-task learning for classification, detection, and pose estimation. YOLOX reintroduced an anchor-free approach. These innovations have broadened YOLO's scope, enabling it to tackle new challenges and improve accuracy across various tasks.

What are some key milestones and contributions from various research teams in YOLO's evolution?

Major milestones include the introduction of anchor boxes in YOLOv2 and multi-scale predictions in YOLOv3. The shift to community-driven development post-YOLOv3 has been pivotal. Teams like Ultralytics, Baidu, and Deci AI have made significant contributions, including YOLOv5, YOLOv8, PP-YOLO, and YOLO-NAS.

How has YOLO impacted real-world applications beyond object detection?

YOLO variants have revolutionized fields like agriculture, enhancing crop monitoring and livestock management. They've also made significant impacts in factory security, traffic monitoring, wildfire detection, and workplace safety, leveraging their real-time object detection capabilities.

Comments ()