Red-Teaming Abstract: Competitive Testing Data Selection

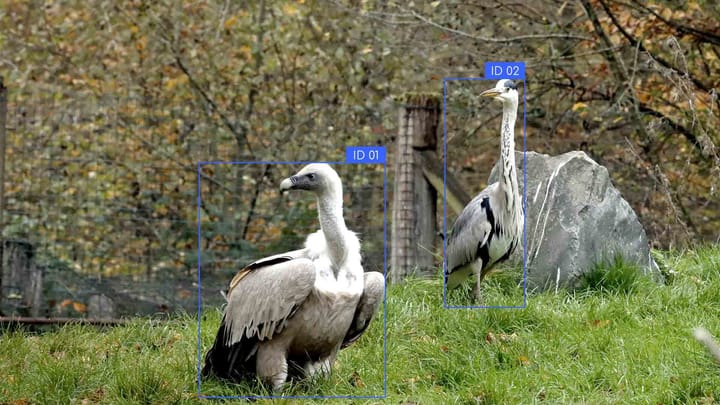

A competitive testing abstract is a systematic stress testing of systems against real-world threats.

Modern language models face risks of misinformation leaks and malicious actions by fraudsters. Therefore, a Red-Teaming abstract is needed, where teams simulate attackers to reveal vulnerabilities in an AI model.

Leading companies like Microsoft and IBM