Psychologists, physicists, and geometric engineers work together to make robots more intelligent

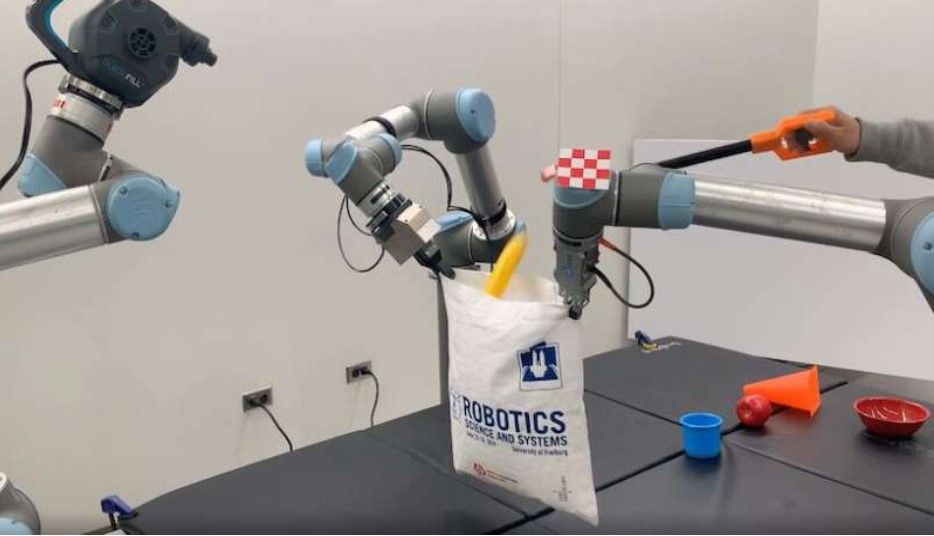

The self-supervised learning framework Columbia Engineers call DextAIRity learns to effectively perform a target task through a sequence of grasping or air-based blowing actions. Using visual feedback, the system uses a closed-loop formulation that continuously adjusts its blowing direction.

We are surrounded by robots everywhere, from drones that film videos in the sky to robots that serve food in restaurants and disperse bombs during emergencies. The effectiveness of existing robots is increasing with regards to simple tasks, but more developments will be required in both the areas of mobility and intelligence to handle more complex requests.

Researchers at Columbia Engineering and Toyota Research Institute are developing algorithms that can help robots adapt to their surroundings and learn how to function independently by exploring psychology, physics, and geometry. A robot's ability to address new challenges, such as those associated with an aging society, and to provide better support, particularly to the elderly and those with disabilities, depends on this work.

Learning how to teach robots about occlusion and object permanence

The concept of object permanence has long been a challenge in computer vision, a concept that is well-known in psychology that implies that the existence of an object is separate from its visibility at any given moment. Understanding our dynamic, ever-changing world is fundamental to robots. Occlusions are often ignored by computer vision applications, and objects that are temporarily hidden from view are often lost in the process.

Carl Vondrick, an associate professor of computer science and recipient of the Toyota Research Institute Young Faculty Award, stated that some of the hardest problems facing artificial intelligence are the easiest for humans. You may be able to relate this phenomenon to toddlers playing peek-a-boo and the fact that their parents do not disappear when they cover their faces. As opposed to humans, computers are unable to recall where an object went once it is blocked or hidden from view.

In order to overcome this problem, Vondrick taught neural networks the basic physical concepts that are inherent in both children and adults. A machine was developed that can watch many videos in order to learn about physical concepts in a similar manner to how a child learns physics through the observation of events in their surroundings. In this approach, the computer is trained to predict the future appearance of the scene. A machine automatically develops an internal model of how objects move in typical environments when it is trained to solve this task across many examples. When, for instance, a soda can disappears from sight inside the refrigerator, the machine learns that it still exists as long as the door is opened again once it reappears.

As a Ph.D. student, Basile Van Hoorick has worked with Vondrick to develop a framework that can recognize occlusions as they occur, and he said that despite working with images and videos before, getting neural networks to work with 3-D information is surprisingly challenging. Computers do not naturally understand the three-dimensionality of our world, unlike humans. As part of the project, the data from cameras was not only converted seamlessly into 3-D, but also the entire configuration of the scene was reconstructed beyond what is visible.

As a result of this work, home robots might be able to perceive a wider range of objects. There is always something that is hidden from view in an indoor environment. The robot must therefore be capable of interpreting its surroundings in an intelligent manner. One example is the situation in which a soda can has been placed inside a refrigerator. Although it is obvious how vision applications will be enhanced if robots are able to make use of their memory and object permanence reasoning skills in order to keep track of both objects and humans as they move throughout the house.

The assumption of a rigid body is being challenged

In order for robots to function correctly, they are typically programmed with a set of assumptions. Assumed rigid bodies are solid and do not change shape is one of the rigid body assumptions. As a result, a great deal of work can be simplified. In robotics, the robot's motion is all that needs to be considered, not the physics of the object with which the robot is interacting.

Columbia's Artificial Intelligence and Robotics (CAIR) Lab has been conducting research into robotic movement in a new way under the direction of Computer Science Assistant Professor Shuran Song. She is primarily interested in deformable, non-rigid objects, which can be folded, bent, or changed in shape.

Shuran Song, who is also a Toyota Research Institute Young Faculty awardee, describes the purpose of his research as investigating how humans intuitively perform activities. Her team developed an algorithm that allows the robot to learn from experience instead of trying to account for every possible parameter, resulting in a more generalizable algorithm that requires less training data. Using a rope to hit a target required the group to rethink the way they perform an action. Instead of considering the trajectory of the string, we tend to focus on hitting the object first, then adjust our movements accordingly. In the field of robotics, Song noted that the use of this new perspective was essential to solving this difficult problem.

For the algorithm they developed, Iterative Residual Policy (IRP), her team was awarded the Best Paper Award at the Robot Science and Systems Conference (RSS 2022). Using inaccurate simulation data, an IRP model was trained to deal with repeatable tasks with complex dynamics. In robotic experiments, the algorithm was able to measure sub-inch accuracy with unfamiliar ropes and demonstrate its ability to generalize.

The robot previously had to perform the task 100 to 1,000 times in order to achieve this level of precision, according to Cheng Chi, a third-year Ph.D. student who worked with Song on IRP. The system is capable of performing the task within ten times, which is about the same performance as a human.

Despite the researchers' efforts, their robot still had some limitations when it came to flinging. Despite the effectiveness of the flinging motion, it is limited by the speed of the robot arm, which means that large items cannot be handled. Not to mention that fast flinging motions can be dangerous around others.

By using actively blown air, Song's team developed a new method of manipulating them. An air pump was attached to their robot, which was capable of unfolding a large piece of cloth or opening a plastic bag quickly. Through a series of grasping and blowing actions, DextAIRity, their self-supervised learning framework, learns to efficiently perform a target task. With the assistance of visual feedback, a closed-loop algorithm is used to continuously adjust the direction in which the system blows.

In the CAIR Lab, Zhenjia Xu, a fourth-year Ph.D. student working with Song, described one of the interesting strategies the system developed when it came to the task of opening a plastic bag. It learned the skill without being annotated or trained in any way.

Is there anything that can be done to make robots in our homes more useful?

Currently, robots are capable of maneuvering through a structured environment with clearly defined areas and accomplishing a single task simultaneously. A useful home robot should be able to perform a variety of tasks, be able to work in an unstructured environment, such as a living room with toys scattered around, and be able to handle a variety of scenarios. As well as identifying tasks and the order in which they must be completed, these robots must also know how to identify subtasks. It will also be necessary for them to know what to do if they fail at a job and how to adapt to the next steps necessary to achieve their goals.

Dr. Eric Krotkov, advisor to the University Research Program, states that Carl Vondrick and Shuran Song have made significant contributions to Toyota Research Institute's mission through their research. Research conducted by TRI in robotics and beyond is focused on developing the capability and tools necessary to address socioeconomic challenges such as aging and labor shortages as well as sustainable manufacturing. It will be possible for robots to improve the quality of life for all by providing them with the capability to understand occluded objects and handle deformable objects."

They intend to collaborate to create robots that assist people in the home by combining their respective expertise in robotics and computer vision. By teaching machines to understand household objects such as clothes, food, and boxes, it could enable robots to assist people with disabilities and enhance the quality of everyday life.In order to make these applications possible in the future, the team intends to increase the number of objects and physical concepts that robots are capable of learning.

Src: Columbia University School of Engineering and Applied Science

Comments ()