Putting it to the Test: Segment Anything vs. Traditional Segmentation Tools

The SA-1B Dataset is huge, with 11 million images and 1 billion masks. It trains the Segment Anything Model (SAM). This data size has boosted SAM's abilities a lot, setting a high bar in image segmentation.

Image segmentation has evolved a lot in computer vision. It groups similar parts of images together using specific labels. Today, advanced methods have made segmentation crucial for detailing images with spot-on data.

SAM, a new model, uses special networks for creating detailed segment maps. It works much better than older methods, improving how models like SAM can be used. Comparing Segment Anything with older techniques shows a clear advantage in modern models.

Key Takeaways

- The Segment Anything Model (SAM) uses the SA-1B Dataset, a vast set with over 11 million images and 1 billion masks.

- SAM is designed to understand new data without extra training, making it very innovative.

- Thanks to an Apache 2.0 license, SAM's learning dataset and code are open for research and growth.

- Even in tricky cases, SAM quickly makes many accurate masks, which old methods couldn't do well.

- SAM's accuracy is often better than what's fully supervised, making it a top choice for detailed image analysis.

Introduction to Image Segmentation

In computer vision, image segmentation breaks an image into parts. It groups pixels that are alike. This lets us find objects better and helps with knowing what's in an image. By looking at how segments compare, we make imaging tasks smarter and faster.

Definition and Importance

Image segmentation is key in analyzing images. It cuts images into meaningful parts. This strategy is crucial for many tasks, from checking medical images to enhancing virtual reality. Learning how to compare these parts helps us make better models.

Historical Background

The past 50 years have seen big changes in how we segment images. Early methods like region growing started the journey. Now, with CNNs and GANs, we get to really detailed segments. This helps in making images better than ever before.

The Segment Anything Model (SAM) is an important step in this progress. It can segment unknown objects, making our models more advanced. Today, we can tackle big segmentation challenges thanks to these advances.

What is Segment Anything Model (SAM)?

Segment Anything Model (SAM) is an advanced tool for cutting out objects in images. It uses new ways of learning that don't need people to label the data. Thanks to this, it can find objects and patterns in pictures on its own without help.

Overview of SAM

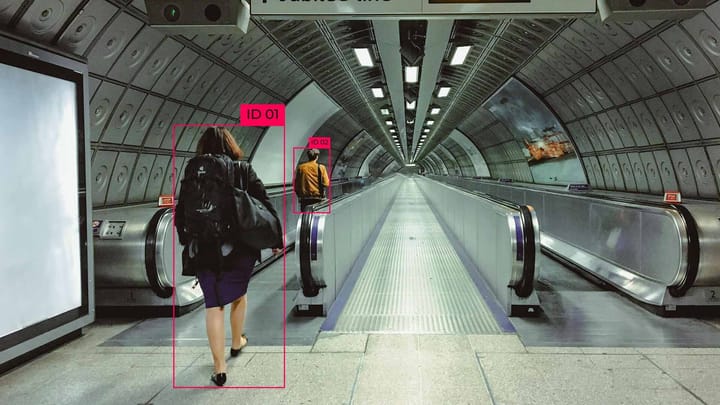

SAM can isolate objects in photos by different means like clicking or drawing. This makes it great for various tasks and users. It can handle unclear scenes and make sure everything in a picture gets a mask.

How SAM Works

With just a request, SAM quickly creates layers for objects in a photo. It learned from a huge dataset with millions of photos and over a billion masks. This training means it works really well even without direct instructions.

Innovations and Advantages

SAM is different from older tools because it's built on new ideas. Meta AI has shared its model and code for free to encourage progress. SAM can work fast in web browsers but might need more time for complex tasks.

The team behind SAM is also using special methods to improve how it works. For example, a special model can get very good results by just clicking on points in a photo, better than SAM. This shows how the field is always finding new and better ways to do things.

Traditional Segmentation Tools

Since the early 1970s, traditional segmentation tools have been crucial in image processing. Although they may seem basic now, modern techniques have grown from their foundations. They include thresholding, region-based segmentation, edge detection, and clustering-based segmentation. Each method is special in how it groups pixels and defines segments.

Thresholding

Thresholding might be simple, but it's highly effective in image processing. It sorts image pixels based on their intensity levels, either above or below a set threshold. It's great for separating the main content of an image from its background. This makes it a powerful data segmentation tool for working with binary images.

Region-Based Segmentation

Region-based segmentation groups pixels that share similar characteristics. It uses statistical criteria or measures of pixel likeness. Techniques like region-growing and region-splitting/merging are used. They help to create clear segment maps by looking at how pixels are connected and a region's overall homogeneity.

Edge Detection

Edge detection finds the borders within an image by spotting changes in pixel intensity. The Canny edge detector is one example of an algorithm that's great at this. It's crucial for identifying where objects start and stop in an image. It's key for defining the outlines of objects for detailed analysis.

Clustering-Based Segmentation

Clustering-based segmentation, through algorithms like K-means, groups pixels based on their attributes such as color or texture. This makes it well-suited for segmenting images in a more intelligent way. Such clustering techniques are particularly good at dealing with complicated patterns and a variety of data. They pave the way for even more advanced segmentation methods.

| Technique | Key Feature | Applications |

|---|---|---|

| Thresholding | Pixel intensity value separation | Binary image segmentation |

| Region-Based Segmentation | Grouping similar properties | Medical imaging, object detection |

| Edge Detection | Boundary identification | Contour detection, shape analysis |

| Clustering-Based Segmentation | Adaptive pixel grouping | Complex pattern recognition |

Comparative Analysis: Segment Anything vs. Traditional Segmentation Tools

When we compare the Segment Anything Model (SAM) with other tools, we see big differences. SAM uses the latest in machine learning to be very accurate with little human help. This makes it stand out in precision, efficiency, and how much it can handle.

Precision and Accuracy

Compared to the old ways, SAM shines in being very precise. It's great at working with all kinds of data. Thanks to self-supervised learning, SAM quickly creates detailed segmentation masks, something traditional tools find hard without lots of manual work. The Segment Anything comparison shows SAM is strong in keeping things precise, even with complex images.

Efficiency and Speed

Another win for SAM is how fast and efficient it is. Normal tools need a lot of time for setup and manual work. But SAM is fast, making needed masks right away after getting image info ready. This quick work shows SAM is better at spotting differences between segments compared to old methods.

Scalability

SAM is built to work with big datasets without problems. It can jump into new tasks without needing to be trained each time. On the other hand, regular tools find it hard to grow as needed, needing special settings and extra training for new jobs. The Segment Anything comparison clearly shows how SAM beats the old ways in handling big jobs and keeping up its top performance in many situations.

Advantages of Segment Anything Model

The Segment Anything Model (SAM) does better than older ways in many cases. It changes how we deal with data, especially when we need to be very accurate and adaptable.

Data Efficiency

SAM is really good at data efficiency. It doesn't need a lot of big, marked data to work well. Instead, it uses a kind of learning that doesn't depend on lots of labels to perform. This means it's useful in a wide range of fields, like healthcare and making self-driving cars better.

Self-Supervised Learning

SAM uses a smart method of learning called self-supervised learning. This method lets SAM learn from a lot of data that isn't labeled. It's very useful for comparing items and lets SAM work with new data on its own. SAM can find and outline objects by drawing shapes or selecting points. This makes it really versatile.

Handling Complex Scenes

SAM is excellent at dealing with complicated images. While other tools might struggle with confusing pictures, SAM can find and mark different objects in various images. This feature makes it very good at things like looking at Earth from space or checking content on social media.

What's really cool about SAM is that it uses some pretty advanced models. These models come from making computers understand human language better. Due to these advancements, SAM can show which objects are in an image almost instantly after looking at the image itself. This makes SAM very useful in many different areas.

| Feature | SAM | Traditional Models |

|---|---|---|

| Data Requirement | Minimal due to self-supervised learning | Extensive labeled datasets needed |

| Adaptability | High, with zero-shot transfer learning | Limited adaptability |

| Handling Complexity | Handles complex scenes with multiple masks | Struggles with intricate scenarios |

| Real-Time Interaction | Provides instant segmentation masks | Delayed response due to manual processing |

SAM is made with clever segmenting methods that make it very flexible and efficient. This means we don't need lots of different models for specific tasks. By making things simpler, SAM is becoming the top choice for up-to-date image segmenting needs.

Challenges with Traditional Segmentation Tools

Traditional segmentation tools have paved the way for new advances. Yet, they face problems in accuracy, efficiency, and cost. They use methods like region growing, thresholding, and clustering for segmentation.

Annotation Complexity

Annotation is a big challenge, especially for supervised methods. To train deep learning well, we need a lot of detailed data. This data should have precise outlines. But, making these annotations can be hard and takes a lot of time. Even with tools to help, it's still complex.

Human Error

Traditional segmentation can suffer from human mistakes. When done manually, it might not be consistent. This causes errors in the final segmented images. Newer models, like SAM, can reduce these errors by automatically spotting patterns.

Cost and Time-Consuming

Using old methods can be costly and time-consuming. Large datasets and detailed annotations are needed. This makes the process hard and expensive. But, newer models require less manual work. They are more efficient and don't need as much human effort.

Segment Anything Comparison: A Detailed Look

The Segment Anything Model (SAM) is changing how we use computer vision through its cutting-edge features. It was made by Meta AI and the FAIR lab. SAM stands out because it can work with many different kinds of images with minimal setup. It's based on existing technologies, using their strengths and improving with fresh ideas. These include CNNs, GANs, as well as advanced learning methods with systems like ResNet and VGG.

Applications Across Various Domains

SAM's use is wide, serving many fields well. In health care, it helps doctors spot key body parts accurately, without needing lots of manual work. For self-driving cars, SAM makes sure the vehicle 'sees' the road clearly, finds what might be in the way, and helps the car move safely.

Implementation in Real-World Scenarios

When put to work, SAM's easy ways to interact, like picking points or drawing boxes, are very handy. Meta has shared SAM for free under the Apache 2.0 license, making it easy for others to use. This has led to useful tools being made. For instance:

- Roboflow Inference server uses SAM together with models like YOLOv5, making process smoother for developers.

- Supervision package brings SAM in directly from version 0.5.0, providing strong help for many needs.

Future Prospects

Looking ahead, SAM's future is bright. It's part of a growing field of AI tools. Newer versions, like FastSAM, show a push for better, quicker ways to understand images. With SAM, marking pictures by hand gets a lot quicker and cheaper. This makes SAM really important for future projects. Development continues. We can expect better ways to segment images as time goes on.

Deep Learning-Powered Segmentation Tools

Today, tools like SAM and DinoV2 are changing the way we handle images. These advanced tools use deep learning to make our lives easier in many fields. They highlight a big step forward in artificial intelligence.

Recent Progress and Developments

The Segment Anything Model (SAM) is a great leap in technology. Developed by the Field AI Research (FAIR) lab, SAM lets users interact with images. You can click, draw, or use a polygon to quickly predict what an image shows.

It can find and outline objects in an image using modern tech like convolutional neural networks (CNNs). These networks also help with recognizing what's in an image. Generative Adversarial Networks (GANs), since 2014, make fake images look real. They help in areas like medicine by creating synthetic images for training.

Transfer learning and models like ResNet and EfficientNet boost how fast these tools work. Because they learn from lots of images, they can spot things faster and better.

Applications in Different Industries

These tools are changing several industries. In medicine, tools like SAM make it easier to look at medical images. They aid doctors in making better diagnoses and planning treatments. In cars, these tools help vehicles see better. This boosts safety and how well they can get around.

Besides, they're used in farming to keep an eye on crops, in shops to manage what they have, and in security to watch over places. Their wide use shows just how important they are.

Advanced segmentation tools mixed with deep learning are bringing big changes. Tools like SAM are really good at telling different parts of an image apart. They make work smoother by needing less manual help. This makes them popular in many areas.

| Segmentation Tool | Key Features | Applications |

|---|---|---|

| SAM | Interactive selections, real-time model predictions, multiple valid masks | Healthcare, Automotive, Agriculture |

| DinoV2 | Synthetic image creation, data augmentation, style transfer | Retail, Security, Entertainment |

As these tools progress, it's key to see how they work best in different fields.

Foundation Models: SAM vs. DinoV2

In the field of image segmentation, we've seen big steps forward. With models like the Segment Anything Model (SAM) and DinoV2, things are changing. These models use self-supervised learning to get better at comparing segments faster.

Key Features

Developed by Meta AI Research in 2023, SAM and DinoV2 are at the top of their game. SAM tackles scenes with many objects overlapping. DinoV2, however, boosts performance and saves data in specific tasks. SAM is great for any case, like medical images or driving cars alone. Meanwhile, DinoV2 is perfect for certain jobs.

Performance Metrics

SAM shows it can handle any kind of image without much human help. It does this better than the old Mask-RCNN model. Even though the exact numbers don't always show this, SAM shines in getting tasks done fast.

Use Cases

SAM and DinoV2 serve different needs in image work. SAM shines in dealing with complex images accurately. This makes it key for jobs needing precision. DinoV2, designed for high performance and data saving, works best in certain tasks like finding unknown objects.

| Model | Year Introduced | Developed By | Key Features | Use Cases |

|---|---|---|---|---|

| SAM | 2023 | Meta AI Research | Versatility, complex scenes handling | Medical Imaging, Autonomous Driving |

| DinoV2 | 2023 | Meta AI | Optimized performance, data efficiency | Open-Set Object Detection |

Integration of SAM in Data Labeling Tools

Putting SAM into tools like Kili is a big step forward in data annotation. It improves the way we add labels to images by spotting and outlining object shapes. This is much better than the old way of using shapes or boxes.

SAM-Kili Integration

Label Studio, a custom data labeling tool, now includes SAM for smarter labeling. This was done by a community member, Shivansh Sharma, to make labeling more accurate and quicker. Now, Label Studio can handle many different tasks like refining labels, figuring out what's in videos, and pulling captions from objects.

Benefits and Efficiency

By mixing SAM with Label Studio, we get better at spotting objects and sorting them correctly. A big plus is the addition of placing points on objects to help with precise outlining. With SAM's help, making sure we select just the right parts of objects is a breeze, which speeds things up a lot.

In the future, SAM will let us delete parts of objects we don't need, making our annotations even sharper. This combination of advanced tech and easy-to-use tools is a win for anyone working with data. It boosts both how fast and how well we categorize objects in images.

| Feature | Traditional Tools | SAM Integration |

|---|---|---|

| Annotation Complexity | High | Lower due to automated processes |

| Human Error | Prone | Minimized with SAM's precision |

| Cost and Time | Expensive and Time-Consuming | More Efficient and Cost-Effective |

Learning about SAM and how it works with tools like Kili shows us big gains in how we tag images. This mix of cutting-edge models and easy-to-use tools really lifts the game, stepping up the quality and uniformity in dealing with big piles of images.

The old way of sorting images has its flaws. But, SAM in today's labeling tools changes everything for the better. It's a major leap in making our data annotation smarter and more accurate overall.

Conclusion

The Segment Anything Model (SAM) is a game-changer when it comes to image segmentation. It's a product of Meta and FAIR, known for their work in natural language processing. SAM is great at understanding prompts and segmenting objects well. You can mark objects by clicking, drawing boxes, or using shapes.

One cool thing about SAM is how it handles uncertainty in segmenting objects. It can create many correct masks for an object, where other tools struggle. Plus, SAM's design lets it learn new tasks without starting from scratch.

It comes with a "data engine" that overcomes the lack of enough data. SAM is all about better precision, faster work, and growing as you need it. With its quick image analysis and the ability to learn on the go, SAM really shines in the world of segmentation tools. It's open for all, with shared datasets and models. This means more powerful and efficient image tools are on the way.

FAQ

What is image segmentation and why is it important?

Image segmentation breaks down pictures into parts. This helps computers see details better. It's key in finding and telling things apart in medicine, driving cars alone, and more. These tasks need to know what's in an image and where it is.

What are traditional image segmentation tools and how do they work?

There are old ways to break images into pieces, like using set colors, comparing nearby parts, finding edges, or grouping alike parts. These methods jump-started image understanding on computers and are useful for special jobs today.

What is the Segment Anything Model (SAM)?

The Segment Anything Model (SAM) is a new way to understand images. It learns from lots of unmarked images online to find patterns. SAM is great at figuring out images that have many different things and lighting.

How does SAM differ from traditional segmentation tools?

Compared to old tools, SAM is better, quicker, and works with no help from people. Traditional tools need a lot of detailed work by humans, which takes time and can be wrong. SAM doesn't need as much help, making it faster with difficult images.

What are the advantages of using SAM for image segmentation?

SAM brings many benefits. It uses data better, doesn’t need as much work from people, and is good with hard images. These strengths make SAM a top choice for many image jobs, thanks to its efficiency and adaptability.

What challenges do traditional segmentation tools face?

The old tools deal with tough jobs like perfect tagging and avoiding mistakes. Doing this often requires a lot of people and a big budget for the right data. SAM has made these traditional issues seem less of a problem.

What are the applications of SAM across various domains?

SAM makes a big difference in many areas. In medicine, it helps examine closely. In auto-driving, it makes sense of crowded views. Its accuracy changes the game in these industries.

How do recent deep learning-powered segmentation tools compare to traditional methods?

New tools using deep learning, like SAM, do a lot better than before. They're more precise, efficient, and can handle more jobs. This is a big win for fields needing exact and quick image work, such as health and auto tech.

How do SAM and DinoV2 compare in terms of features and performance metrics?

SAM and DinoV2 are good models but are used differently. SAM is great with any complicated scenes. DinoV2, however, is focused on being best in certain areas. They each have their place in getting the job done right.

What are the benefits of integrating SAM with data labeling platforms like Kili?

Bringing SAM and Kili together makes tagging tasks better. This mix lifts the standard of image work where clear labels are a must. SAM's clever understanding, combined with the human touch via Kili, means better, precise results.

Comments ()