Real-Time Object Detection with YOLOv10

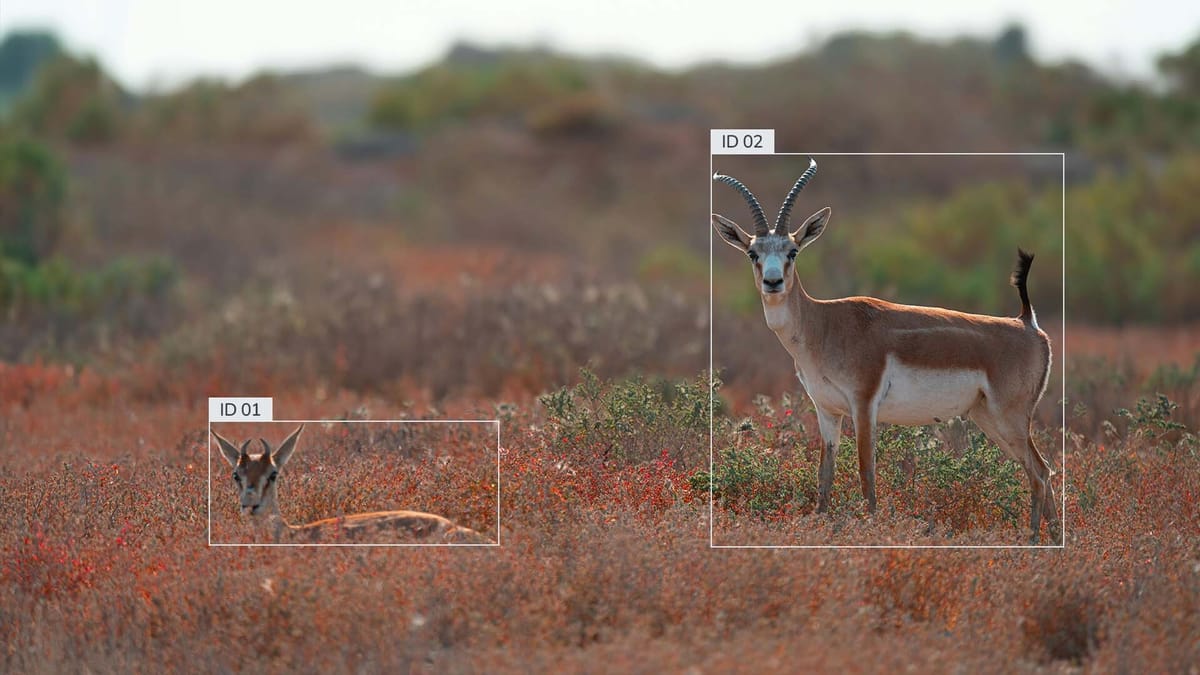

YOLOv10 represents a groundbreaking advancement in computer vision technology. It significantly enhances real-time object detection capabilities. By leveraging advanced neural networks and deep learning, YOLOv10 achieves unmatched speed and precision.

Accessible on GitHub, YOLOv10 garnered 8.8k stars and 780 forks at the time of writing. It's licensed under the AGPL-3.0, welcoming developers and researchers. The model is available in six sizes, from Nano to Extra Large, catering to diverse application requirements.

YOLOv10 addresses critical post-processing and model architecture limitations. It excels in processing images swiftly, with the smallest variant processing frames in just 1 millisecond. This capability makes it perfect for real-time video processing on edge devices.

YOLOv10 outshines its predecessors and rivals in several aspects. For example, YOLOv10-S is 1.8 times faster than RT-DETR-R18 while maintaining similar accuracy. YOLOv10-B reduces latency by 46% and cuts down on parameters by 25% compared to YOLOv9-C, yet performs equally well.

Key Takeaways

- YOLOv10 offers six model sizes for different application needs

- Processes images at speeds up to 1000fps for real-time detection

- Outperforms previous YOLO versions in speed and efficiency

- Supports various export formats for flexible deployment

- Ideal for applications in autonomous vehicles, security, and industrial automation

Introduction to YOLOv10 and Its Significance in Object Detection

YOLOv10 represents a major advancement in image recognition and object tracking. This version of the YOLO algorithm significantly enhances real-time object detection capabilities. It showcases the rapid evolution of this technology.

The evolution of YOLO algorithms

YOLO algorithms have transformed video analytics since their debut. Each iteration has built upon the previous one, culminating in YOLOv10. This version offers substantial efficiency improvements, highlighting the field's swift advancements.

Key features and improvements in YOLOv10

YOLOv10 stands out with its innovative features for object detection. It utilizes NMS-Free training, which removes the need for non-maximum suppression after processing. This innovation greatly enhances inference speed and efficiency.

| Model | AP Improvement | Parameter Reduction | Latency Reduction |

|---|---|---|---|

| YOLOv10 (vs YOLOv8) | 1.4% | 36% | 65% |

| YOLOv10L (vs GoldYOLOL) | 1.4% | 68% | 32% |

Impact on real-time object detection applications

YOLOv10's advancements significantly impact real-time object detection. Its enhanced efficiency and accuracy are perfect for applications in autonomous vehicles, surveillance, and industrial automation. This model's versatility makes it highly useful in various sectors, demonstrating its broad application in video analytics.

YOLOv10's leading performance in real-time object detection offers efficient solutions across industries. Its ability to balance accuracy with computational costs marks a significant shift in image recognition technology.

Understanding the Architecture of YOLOv10

YOLOv10 represents a major advancement in real-time object detection. This version of machine learning algorithms brings significant improvements to artificial intelligence in computer vision tasks.

Backbone: Enhanced CSPNet for Feature Extraction

At the heart of YOLOv10 is an enhanced CSPNet backbone. This component is adept at extracting essential features from images, laying a strong foundation for precise object detection. The CSPNet's improved design facilitates more efficient processing of visual data. This results in reduced computational overhead while maintaining high accuracy.

Neck: PAN Layers for Multiscale Feature Fusion

YOLOv10's neck incorporates Path Aggregation Network (PAN) layers. These layers seamlessly fuse features across different scales. This enables the model to detect objects of various sizes with precision. Such a multiscale approach significantly boosts the model's capability to handle complex scenes and diverse object types.

Head: Lightweight Classification for Efficient Inference

The model's head features a lightweight classification design. This design balances accuracy with computational efficiency. YOLOv10 can perform rapid inference without sacrificing detection quality. This makes it perfect for real-time applications.

| Component | Function | Key Improvement |

|---|---|---|

| Backbone (CSPNet) | Feature Extraction | Enhanced efficiency in processing visual data |

| Neck (PAN Layers) | Multiscale Feature Fusion | Improved detection across various object sizes |

| Head (Lightweight) | Classification | Rapid inference with maintained accuracy |

YOLOv10's architecture combines these components seamlessly, creating a powerful yet efficient object detection model. Its design focuses on balancing computational resources with detection accuracy. This makes it a top choice for advanced AI applications in real-time scenarios.

Real-Time Object Detection YOLOv10: Performance Metrics and Benchmarks

YOLOv10 revolutionizes real-time systems in object detection, setting new benchmarks. Its performance metrics show marked improvements over earlier versions and rivals.

The YOLOv10-S variant leads in speed and efficiency. It outpaces RT-DETR-R18 by 1.8 times in processing images, yet matches its accuracy. This efficiency is achieved with a significant reduction in model size, featuring 2.8 times fewer parameters and FLOPs.

| Model | Speed Comparison | Parameter Reduction |

|---|---|---|

| YOLOv10-S vs RT-DETR-R18 | 1.8× faster | 2.8× smaller |

| YOLOv10-B vs YOLOv9-C | 46% less latency | 25% fewer parameters |

In the domain of image recognition, YOLOv10 stands out for its efficiency. The YOLOv10-L and YOLOv10-X models surpass YOLOv8 by 0.3 AP and 0.5 AP respectively, yet use up to 2.3 times fewer parameters. This efficiency is vital for real-time object detection applications.

YOLOv10's prowess extends beyond mere speed. It introduces groundbreaking features like NMS-free training and consistent dual assignments, boosting efficiency and accuracy. These innovations position YOLOv10 as a pivotal solution for applications demanding rapid and accurate object detection.

NMS-Free Training: A Game-Changer in Object Detection

YOLOv10 introduces a groundbreaking method for object detection through NMS-free training. This breakthrough significantly advances deep learning and neural networks. It changes the landscape of real-time object detection.

Consistent Dual Assignments for Efficient Inference

The method uses consistent dual assignments for training. It combines one-to-many and one-to-one assignments. This ensures each object is represented by a single, high-quality bounding box. This approach boosts accuracy and speed.

Eliminating Non-Maximum Suppression

YOLOv10 eliminates the need for non-maximum suppression (NMS) post-processing. This real-time object detection technique cuts down on computational steps. It leads to faster and more efficient performance.

Impact on Inference Latency and Computational Cost

The impact of NMS-free training is significant:

- YOLOv10-S reduces end-to-end latency by 4.63ms while keeping Average Precision (AP) at 44.3%.

- The model design cuts 11.8 million parameters and 20.8 GFLOPs for YOLOv10-M, lowering latency by 0.65ms.

- Accuracy-focused design increases AP by 1.8 for YOLOv10-S and 0.7 for YOLOv10-M with minimal latency increase.

These improvements highlight YOLOv10's efficiency in neural network operations. It stands out for applications needing swift and precise object detection.

| Model | AP | Latency (ms) |

|---|---|---|

| YOLOv10-S | 46.3 | 2.49 |

| YOLOv10-M | 51.1 | 4.74 |

| YOLOv10-L | 53.2 | 7.28 |

| YOLOv10-X | 54.4 | 10.70 |

YOLOv10's NMS-free training is a major leap in object detection. It offers better speed and accuracy. This innovation opens up more efficient deep learning applications across various fields.

Holistic Efficiency-Driven Design in YOLOv10

YOLOv10 introduces a groundbreaking approach to object detection, enhancing real-time image analysis capabilities. This model, at the forefront of computer vision, redefines efficiency in object detection.

The YOLOv10 architecture is distinguished by its optimized components. It employs spatial-channel decoupled downsampling, separating spatial and channel operations. This approach significantly reduces computational costs without compromising accuracy.

Another pivotal element is the rank-guided block design. It pinpoints regions with high redundancy and substitutes them with compact inverted blocks. This innovation leads to a more efficient machine learning algorithm, stripping away unnecessary information to enhance performance.

Consider these remarkable statistics:

- YOLOv10 surpasses YOLOv9 by 15% and YOLOv8 by 25% in inference speed

- It achieves a mean average precision of 45.6%, outperforming YOLOv9's 43.2% and YOLOv8's 41.5%

- YOLOv10-S is 1.8 times faster than RT-DETR-R18 with similar accuracy

These figures underscore the efficacy of YOLOv10's efficiency-driven design. It not only excels in speed but also maintains high accuracy, significantly reducing computational load. This makes it a transformative tool for various applications in computer vision.

Advanced Features: Large-Kernel Convolutions and Partial Self-Attention

YOLOv10 elevates artificial intelligence and deep learning with its advanced features. This version introduces cutting-edge techniques for better real-time object detection. These innovations significantly boost the model's performance.

Selective Application of Large-Kernel Convolutions

YOLOv10 selectively uses large-kernel convolutions in deep network stages. These 7x7 convolutions enhance the model's ability to detect objects at different scales. Training involves structural reparameterization to optimize these convolutions, improving efficiency without losing accuracy.

Partial Self-Attention for Efficient Global Feature Modeling

Partial Self-Attention (PSA) in YOLOv10 is a major leap in global feature modeling. PSA divides features, processes them selectively, and fuses the results efficiently. This method helps the model capture long-range dependencies without increasing computational costs.

Optimized Placement and Dimension Reduction Strategies

YOLOv10 uses smart strategies to place its advanced features in the network. By strategically positioning large-kernel convolutions and PSA modules, the model balances performance with efficiency. Dimension reduction techniques are applied to reduce overhead while enhancing global feature modeling benefits.

| Feature | Benefit | Implementation |

|---|---|---|

| Large-Kernel Convolutions | Broader spatial context | Selective application in deep stages |

| Partial Self-Attention | Efficient global feature modeling | Feature partitioning and selective processing |

| Optimized Placement | Balance of performance and efficiency | Strategic positioning within network architecture |

These advanced features significantly enhance YOLOv10's performance. For example, YOLOv10-S is 1.8 times faster than RT-DETR-R18 on COCO, with 2.8 times fewer parameters and FLOPs. This highlights the power of deep learning in real-time object detection.

YOLOv10 Model Scales: Choosing the Right Version for Your Needs

YOLOv10 provides a variety of model sizes tailored for diverse real-time systems and object tracking demands. It ranges from lightweight options for mobile devices to robust versions for demanding tasks. This flexibility ensures YOLOv10 meets your specific needs.

Available Model Sizes

YOLOv10 offers six scales, each tailored for distinct use cases:

| Model Size | Parameters (millions) | Ideal Use Case |

|---|---|---|

| Nano (n) | 2.3 | Edge devices, mobile apps |

| Small (s) | 7.2 | Low-power embedded systems |

| Medium (m) | 15.4 | Balanced performance for most applications |

| Big (b) | 19.1 | High-accuracy requirements |

| Large (l) | 24.4 | Complex scene analysis |

| Extra Large (x) | 29.5 | Research and top-tier performance |

Performance Across Scales

YOLOv10 stands out for its speed in real-time object detection. The Nano model can process images at an incredible 1000 frames per second, ideal for real-time video analysis on edge devices. Larger models, however, provide enhanced accuracy for more complex applications, with processing times from 13.4ms to 16.7ms per image at 384x640 resolution.

Choosing Your Model

When selecting a YOLOv10 model, consider your specific requirements. For mobile apps and edge devices, the Nano or Small models are superior. Medium and Big models strike a balance between speed and accuracy for general object tracking. Large and Extra Large scales are best for complex real-time systems needing unparalleled performance and accuracy.

Implementing YOLOv10: A Step-by-Step Guide

Setting up YOLOv10 for computer vision tasks is straightforward. First, verify your GPU status and mount Google Drive. Then, install the YOLOv10 and Supervision libraries. Next, download pre-trained weights for your chosen model scale. YOLOv10 offers six variants, from Nano to Extra Large, each balancing speed and accuracy differently.

For instance, YOLOv10-S boasts 7.2M parameters and 2.49ms latency, achieving 46.3% APval. It's 1.8 times faster than RT-DETR-R18 on COCO, with 2.8 times fewer parameters. The larger YOLOv10-X, with 29.5M parameters, reaches 54.4% APval but takes 10.70ms per inference.

Nano suits resource-constrained devices, while Extra Large excels in high-accuracy scenarios. After setup, you're ready to start detecting objects in real-time.

- Enhanced CSPNet backbone

- Path Aggregation Network layers

- Lightweight classification heads

- Spatial-channel decoupled downsampling

These features contribute to YOLOv10's impressive performance, making it a top choice for real-time object detection in various computer vision applications.

Real-World Applications and Use Cases of YOLOv10

YOLOv10 is transforming real-time systems across industries. Its advanced features are changing the game in video analytics and object detection. Let's delve into some key applications where YOLOv10 is significantly impacting.

Autonomous Vehicles and Traffic Management

In the automotive sector, YOLOv10 boosts safety and efficiency. It offers real-time object detection, aiding autonomous vehicles in navigating complex environments. Traffic management systems also benefit from YOLOv10's quick monitoring of road conditions and hazard identification.

Security and Surveillance Systems

YOLOv10 is revolutionizing security with its high-speed, precise threat detection. Surveillance cameras with YOLOv10 can spot suspicious activities in real-time, enabling swift responses. This technology is essential in video analytics for large-scale security systems.

Industrial Quality Control and Automation

In manufacturing, YOLOv10 enhances quality control. It detects defects swiftly, boosting production efficiency. Its precision in processing visual data makes it crucial for automation tasks.

| YOLOv10 Variant | Parameters (M) | FLOPs (B) | AP (%) | Latency (ms) |

|---|---|---|---|---|

| YOLOv10-S | 7.2 | 21.6 | 46.3-46.8 | 2.39 |

| YOLOv10-M | 15.4 | 59.1 | 51.1-51.3 | 4.63 |

| YOLOv10-X | 29.5 | 160.4 | 54.4 | 10.60 |

YOLOv10's enhanced performance and reduced latency make it perfect for real-time systems across industries. Its quick and accurate processing of visual data opens up new possibilities in video analytics and object detection applications.

Conclusion: The Future of Real-Time Object Detection with YOLOv10

YOLOv10 represents a significant advancement in real-time object detection, expanding the frontiers of artificial intelligence and deep learning. This model has achieved a 46% reduction in latency and a 25% decrease in parameters over YOLOv9-C, while preserving exceptional performance.

The variety of YOLOv10 models, from Nano to Extra Large, surpasses other leading detectors. For example, the YOLOv10-N model boasts a 38.5 Average Precision with just 2.3 million parameters. All YOLOv10 variants demonstrate a 1.2% to 1.4% increase in Average Precision, utilizing up to 57% fewer parameters and 38% fewer calculations.

YOLOv10's efficiency is particularly evident in practical applications. Its superior performance in complex scenarios, facilitated by PSA modules, makes it perfect for autonomous vehicles, surveillance, and industrial automation. This model offers latency reductions of up to 70% over previous versions, poised to transform real-time object detection across diverse sectors.

As artificial intelligence and deep learning progress, YOLOv10 leads the way, setting the stage for more advanced and efficient object detection systems. Its exceptional balance of computational efficiency and accuracy marks it as a pivotal innovation in the field. This positions YOLOv10 for groundbreaking advancements in the future of real-time object detection.

FAQ

What is YOLOv10?

YOLOv10 is the latest version in the YOLO series, aiming to enhance real-time object detection. It introduces new features like NMS-free training and a focus on efficiency. This approach aims to boost performance and efficiency.

What are the key features of YOLOv10?

YOLOv10 boasts several key features. These include consistent dual assignments for NMS-free training and a model designed for efficiency and accuracy. It also features large-kernel convolutions, partial self-attention (PSA), and spatial-channel decoupled downsampling.

How does YOLOv10 perform compared to previous versions and other models?

YOLOv10 outshines its predecessors and rivals in speed and accuracy across various benchmarks. It can process images at high speeds, ensuring both efficiency and precision.

What is NMS-free training, and how does it benefit YOLOv10?

NMS-free training removes the need for non-maximum suppression post-processing during inference. This method uses one-to-many and one-to-one assignments for efficient processing. It cuts down on latency and computational costs while enhancing accuracy.

How does YOLOv10's holistic efficiency-driven design enhance its performance?

YOLOv10's design focuses on optimizing efficiency and accuracy across all components. It employs spatial-channel decoupled downsampling and rank-guided block design. These strategies help eliminate unnecessary information, improving efficiency without compromising on accuracy.

What are the different model scales available for YOLOv10?

YOLOv10 comes in six model scales: Nano, Small, Medium, Big, Large, and Extra Large. These options allow users to select the ideal model for their needs, balancing performance with computational resources.

What are some real-world applications of YOLOv10?

YOLOv10 finds applications in autonomous vehicles and traffic management, security and surveillance, and industrial quality control and automation. Its real-time object detection and tracking capabilities are crucial in these fields.

Comments ()