Red-Teaming Abstract: Competitive Testing Data Selection

A competitive testing abstract is a systematic stress testing of systems against real-world threats.

Modern language models face risks of misinformation leaks and malicious actions by fraudsters. Therefore, a Red-Teaming abstract is needed, where teams simulate attackers to reveal vulnerabilities in an AI model.

Leading companies like Microsoft and IBM use this method to uncover hidden risks in how AI models process language or respond to deceptive data.

This article will examine strategies for creating reliable datasets and methodologies used in structures such as open-source collaborative projects.

Key Takeaways

- Competitive annotation identifies weaknesses in AI systems.

- Language models require specific testing for bias in results.

- Cross-industry collaboration helps identify more threats.

- Structured data management reduces the risk of information leakage in AI applications.

Understanding Red-Teaming in AI and Cybersecurity

The military first used simulated threats to test physical defenses. Today’s teams apply similar tactics to digital landscapes. Where soldiers used to breach fortifications, experts now create deceptive clues to test language models for bias or incorrect results.

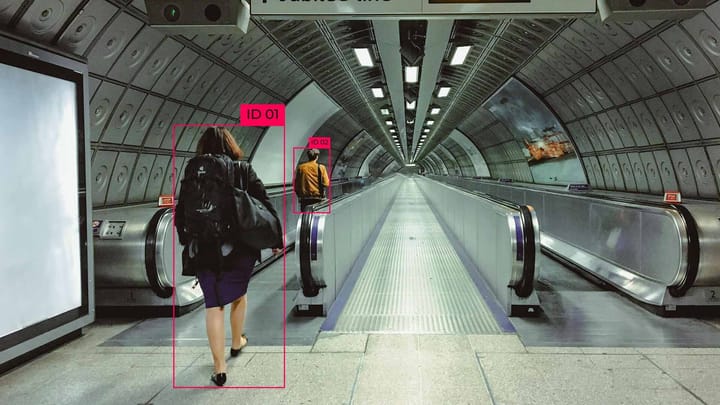

In machine learning and artificial intelligence (AI), Red-Teaming refers to testing AI models for vulnerabilities, bias, or potential for manipulation. This includes:

- Finding weaknesses in AI decision-making.

- Assessing resilience to attacks such as data manipulation.

- Testing the ethics and security of the AI model.

Old Rules, New Tools

Red-teaming Data: Collection and Curation

Red-Teaming uses various sources during data collection, such as real-world attack scenarios, synthetic data, historical incidents, and adversarial data for simulating potential vulnerabilities and attacks. Sources include event logs, network traffic, malicious code samples, and anomalous behavior patterns. Therefore, the data must be diverse and representative to model real-world threats.

Curating data means filtering, normalizing, and categorizing it for use in testing. This includes removing noise, highlighting important patterns, and labeling vulnerabilities and attack techniques. Ethical and confidential data are key considerations when working with data, helping to avoid unintentional security breaches or information leaks.

Planning a Red Team Exercise

First, you need to define clear goals and metrics for success:

- Level of inconsistency in the AI model’s response.

- Quantify the level of detection of new AI attack models.

- Improve the level of benchmarking in testing cycles.

Then you need to create a multidisciplinary team:

- AI ethicists predict upcoming threats.

- Social engineers recreate manipulation tactics in the real world.

- Security architects map the system’s interaction points.

Continuous testing systems have significant advantages over one-off audits. Organizations that conduct quarterly exercises are better at responding to threats than companies that conduct annual reviews.

Performing competitive testing processes

- Planning and reconnaissance. Defining testing objectives and collecting data about the system, architecture, algorithms, and attack points.

- Choosing the right methodologies. Applying social engineering techniques, data manipulation, or automated attacks. Specific attacks on AI models include data poisoning, membership inference attacks, or input manipulation (adversarial attacks).

- Executing the attack. Replaying attack scenarios to identify weaknesses. Simulating attacker actions in a controlled environment and analyzing the response of the AI system.

- Assessing the vulnerability level of the AI system and the impact of attacks on functionality and security. Creating a report based on these indicators with recommendations for strengthening protection.

- Retesting to fix vulnerabilities and create protections according to the problems found.

Well-planned Red-Teaming processes allow organizations to identify vulnerabilities to real-world attacks and strengthen the cybersecurity of AI models before deploying them in a real-world environment.

Using Red Team Insights to Improve the System

Red Team Insights is a set of analytical conclusions and recommendations from competitive system testing. This data eliminates vulnerabilities and improves the security and resilience of the AI system to threats.

Analyzing the results after Red-Teaming, the system’s weaknesses, threats, and the possible consequences of attacks are determined.

Developing recommendations based on the results include authentication mechanisms, access control, and optimization of machine learning algorithms. These methods increase resistance to the influence of attackers and update the security policy and team training.

After changes, the AI system is retested to verify the effectiveness of the implemented measures. This assesses how much the risk has been reduced and whether new vulnerabilities have emerged.

Red Team Insights helps create adaptive AI systems that respond to threats and prevent possible attacks, making them resilient to threats in the real environment.

Summary

Now, defenses need to be constantly improved to stay relevant. This is done through structured collaboration and adaptive testing systems. The shift from network-centric to AI-model-centric testing shows progress in digital security.

Continuous training of company teams reduces threat response time, and cross-functional teams detect more risks than isolated groups.

Military strategies have shaped modern AI defense protocols. These methods help detect threats in language models, from scenario-based attack modeling to dynamic behavior analysis, before the model is used in the real world.

Leading tools and the EU Law on Artificial Intelligence raise industry standards.

FAQ

How is AI red teaming different from traditional cybersecurity testing?

AI Red Teaming identifies vulnerabilities in AI systems, attacks on machine learning models, and data manipulation. Traditional testing protects IT infrastructure, networks, and systems from hacks and unauthorized access.

What makes a red teaming exercise effective for large language models (LLMs)?

Realistic attacks that simulate malicious use and systematic analysis of AI model vulnerabilities.

How do you balance attack simulation with ethical constraints?

You need to define the testing boundaries to avoid violating data privacy or security. You must also obtain prior consent from stakeholders and operate within ethical regulations.

What metrics confirm the return on investment of red teaming for AI systems?

Metrics include reducing the number of vulnerabilities and assessing the effectiveness of AI models by detecting and correcting errors at early stages.

Can automated tools replace human red teams in AI security?

Automated tools can accelerate vulnerability detection and threat analysis. Human experience and intuition assess complex situations and understand nuances.

Comments ()