Safety Benchmarking: Annotating Data to Detect Harmful Content

As language models become more sophisticated, they must be monitored for their responses to harmful behavior. Established safety assessments provide a basis for assessing these risks and ensure that AI models comply with ethical standards.

The foundation of practical evaluation is high-quality annotated data. This uses automated tools and human control to produce accurate results. This approach ensures that benchmarks are robust and comprehensive.

Key Takeaways

- With AI-generated content, content moderation faces new challenges.

- New security tests needed for responsible AI development

- Data annotation processes are a key aspect of effectively detecting harmful content.

Understanding the Need for Safety Benchmarks in AI

To identify risks, AI safety testing evaluates AI systems' reliability, stability, and security. These tests ensure the system operates correctly, even under unpredictable or hostile conditions.

The Rise of Text-to-Image Models and Associated Risks

Text-to-image models have revolutionized the creation of visual content. However, these tools come with inherent risks.

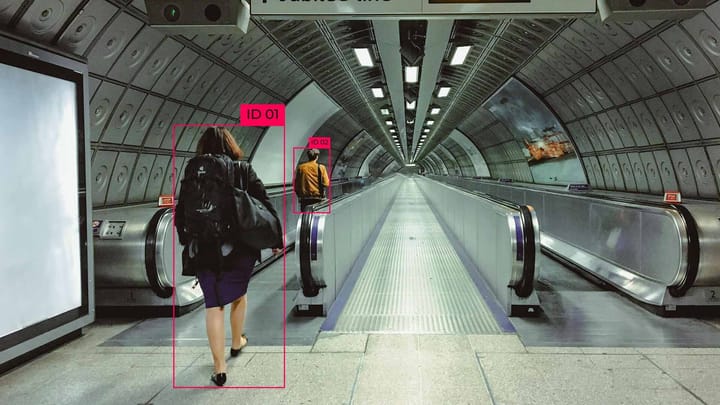

Challenges in Content Moderation for AI-Generated Images

Content moderation teams face challenges when working with AI-generated images. Standard review methods fail to detect toxicity and bias, and AI-generated content's sheer volume and complexity require tools and tests for toxicity detection to identify harmful content correctly.

Developing security standards will address these challenges. They should cover a variety of scenarios and be able to detect nuances in AI-generated images. Developing comprehensive security tests can better protect users and ensure responsible AI development.

Modern security assessment methods

- Security auditing assesses algorithms and protocols for vulnerabilities and possible attacks. Provides a comprehensive analysis of the system.

- Adversarial attacks simulate attacks on an AI model to assess resistance to intentional manipulation.

- Identifies weaknesses in the system.

- Robustness testing assesses the system's ability to withstand external factors and identifies potential points of failure.

- Ethical and fairness assessment analyzes system decisions for bias or ethical violations. Prevents discrimination.

- Real-time monitoring and control involves monitoring a system to identify possible failures or anomalies and responding quickly to potential threats.

T2ISafety Comprehensive Security Test

T2ISafety (Text-to-Image Safety) is a security test of image generation models based on text clues. It eliminates dangerous or unacceptable results from T2I models, ensuring the generated images' safety, ethics, and legal compliance.

T2ISafety testing components

- Check for prohibited queries. Filter out text clues containing unacceptable terms.

- Analyze generated images. Computer vision and object recognition models automatically detect dangerous content.

- Bias assessment. Analyze content for gender, racial, or cultural stereotypes.

- Monitoring and training. Update AI models to prepare for new threats.

T2ISafety benefits

- Protects against dangerous content by automatically filtering out unacceptable results.

- Reduces bias that can negatively impact society.

- Controls the authenticity of content, preventing the creation of fakes, image manipulation, and the spread of misinformation.

- It preserves privacy and prevents the use of images of real people without their consent.

- Supports compliance with ethical standards in the development and use of AI.

- Updates are needed to consider new risks or regulation changes and easily integrate them with other monitoring and protection systems.

Data collection for T2ISafety

Data collection for testing the T2ISafety system begins with selecting a data source. These can be public image databases, images from social networks, or generated using AI.

The second step is to determine the data types. There are the following data types:

- Images with varying degrees of safety.

- Images with unwanted or harmful content.

- Content that violates privacy.

The third step is the classification of tags and metadata. They are divided into the following types:

- Type of danger (violence, hate speech).

- Type of use (public, private).

- Data source and copyright.

- Recommendations for searching and filtering

Annotation process and T2ISafety verification

Data annotation allows you to mark which content is safe and which is not. The annotation team comprises people from different cultures and social backgrounds, ethics and legal experts, and image analysis analysts. Annotation takes place on a unique platform (for example, Keylabs). It allows you to manage data and store and organize annotated data. After annotating the data, they are checked by annotators. There are several types of checks:

- Cross-validation is a check by several annotators who evaluate the same image.

- Consistency assessment is used to identify and correct discrepancies in the annotation.

- Statistical analysis of the accuracy and consistency of annotations.

Evaluation Metrics in T2ISafety

T2ISafety is a robust safety test for evaluating six major text-to-image models across three key parameters: fairness, toxicity, and privacy.

- Fairness assesses an AI model's ability to generate images without bias or discrimination. This ensures the ethics of all data categories.

- Toxicity determines the ability of an AI model to avoid generating images with offensive, violent content. This helps identify AI models that are vulnerable to toxic queries.

- Privacy checks whether the AI model reproduces users' data. This ensures that personal data is protected from being reproduced in the generated images.

Using MLLM to moderate image content

MLLM (Multimodal Large Language Models) are large multimodal models that process text and images. They moderate image content for safety and ethical content.

MLLM capabilities in moderation:

- The models combine text and images, which allows them to recognize images and understand their context accurately.

- The models analyze the context to detect inappropriate content, even in complex cases.

- MLLM can detect content for toxicity and discrimination.

Advantages of MLLM

- Versatile. Supports and works with various data formats.

- Contextual accuracy. Processes text and images to better understand situations.

- Efficiency in moderation. Automatically detects dangerous content.

Future directions of AI security benchmarking

With the popularity and development of multimodal models, there is a growing need to improve them and compare their resistance to attacks in different data formats.

Develop targeted attack scenarios instead of general tests.

Improve the analysis of AI models for discriminatory content and bias. Assess how different models deal with issues of fairness in other cultures.

With the increase in the volume of personal data, there is a need to compare AI models for privacy and data leakage protection. Compare encryption algorithms and differential privacy methods.

Develop systems that automatically analyze risks to detect anomalies and potential threats quickly.

FAQ

What is T2ISafety, and why is it important?

T2ISafety (Text-to-Image Safety) is a security test of image generation models based on text clues. It eliminates dangerous or unacceptable results from T2I models, ensuring the generated images' safety, ethics, and legal compliance.

What are the main challenges in content moderation for AI-generated images?

The main hurdles include spotting subtle toxicity and bias and addressing AI content's unique traits. Traditional moderation methods often fail with AI images, which calls for specialized benchmarks and evaluation methods.

How are Multimodal Large Language Models (MLLMs) used in image content moderation?

MLLM (Multimodal Large Language Models) are large multimodal models that process text and images. They moderate image content for safety and ethical content.

What is the process for creating the T2ISafety dataset?

Data collection for testing the T2ISafety system includes selecting a data source, defining data types, and classifying tags and metadata.

What are the key evaluation metrics used in T2ISafety?

T2ISafety is a robust safety test for evaluating six major text-to-image models across three key parameters: fairness, toxicity, and privacy.

What are some emerging challenges in AI safety benchmarking?

Challenges include adapting to AI advancements, developing dynamic safety methods, and addressing complex harmful content. Benchmarks must evaluate AI systems in real-world, multimodal contexts.

Comments ()