Selective Subset Labeling: Building Targeted Benchmarks Within Large Corpora

Selective subset labeling is a method of creating benchmarks in large data sets.

Companies can optimize machine learning models by focusing on subsets of data. This method ensures accuracy and reduces the time and resources required to annotate data.

Key Takeaways

- Selective subset labeling is a method for optimizing machine learning models.

- Targeted data annotation ensures accuracy and reduces processing time.

- High-quality data labeling is the basis for reliable model performance.

- Active learning techniques ensure high-quality test generation.

Data Labeling Process

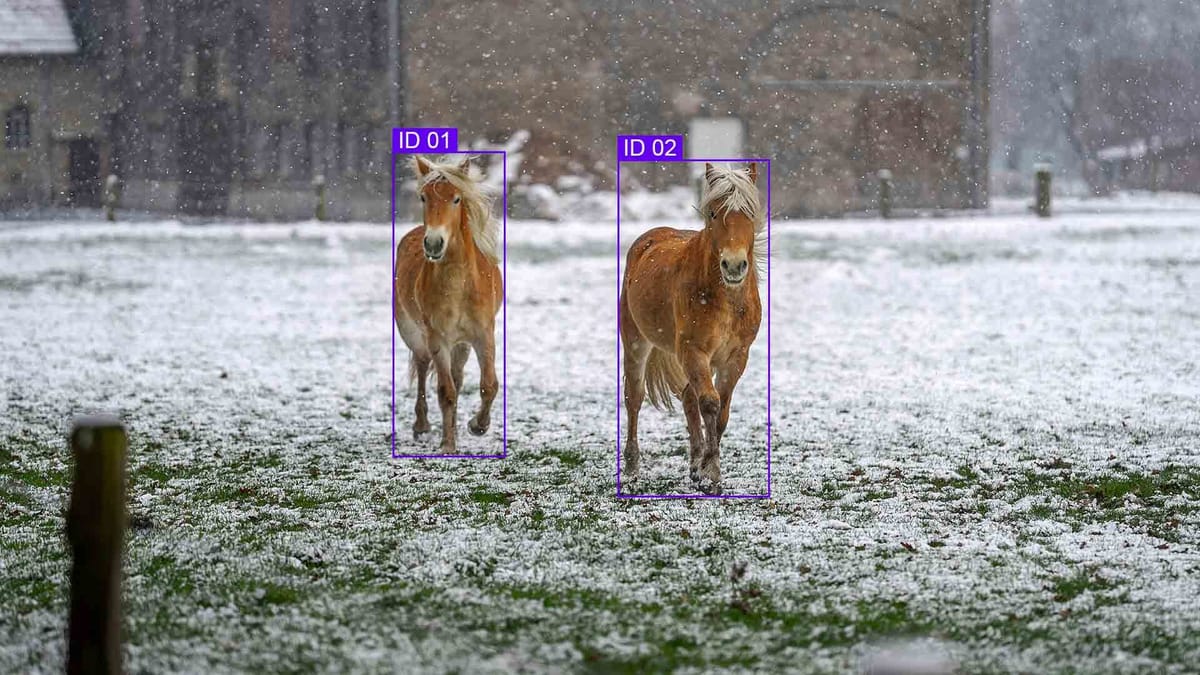

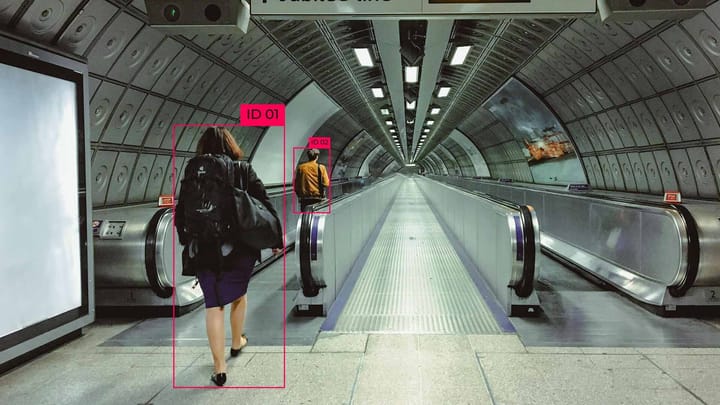

Data labeling is the process of data processing in which information is labeled with labels (tags) to train an artificial intelligence model to recognize objects, categories, or features. Data slices are subsets of the dataset that are analyzed in isolation to test model performance in specific, often extreme, conditions.

This process consists of:

- Collecting raw data from various sources.

- Cleaning data from noise and duplication for processing.

- Selecting the type of labeling depending on the task (classification, segmentation, object recognition, etc.).

- Manual or automated annotation adds labels to the data (for example, outlining objects in an image or classifying text).

- Annotated data is verified by experts or automatically.

- Storing and managing data in a structured format for training models.

Types of annotation and their difficulties

Data Impacts Model Accuracy

The quality of annotated data affects the performance of an AI model. Accurate labels allow AI models to better distinguish between objects in image recognition tasks. Similarly, in NLP, clear annotated data improves the quality of text classification.

Quality Metrics and Standards

Standard metrics for image tasks include precision, recall, and intersection over connections (IoU). Consistency between annotators is also important, ensuring consistency and accuracy of the labeled data. Implementing detailed quality assurance processes maintains high standards. Targeted benchmarks are essential for evaluating model performance in specific contexts, ensuring its robustness across diverse situations.

- Precision shows the number of correctly predicted results among all predictions.

- Recall shows the proportion of cases that were correctly recognized.

- Intersection over connections (IoU) shows how accurately the model predicted the boundaries of an object.

Labeling data subsets for benchmarks

A structured annotation process is key to achieving optimal results. Selective subsets are chosen to ensure the benchmark accurately reflects the performance of the model under varied conditions. Here are some tips to achieve this:

- Define clear goals for the benchmarking process to ensure that the subsets align with the machine learning goals.

- Choose diverse data samples to avoid bias and improve the generalizability of the AI model.

- Implement quality control and refine the annotation process to maintain consistency and accuracy.

These strategies will help you control the annotation process to produce high-quality results.

Data Labeling Approaches

Choosing the right approach to data labeling can optimize your machine-learning workflows.

- In-house labeling precisely controls the uniformity of annotated data. This method is suitable for working with sensitive data that requires expert expertise. However, it is time-consuming and resource-intensive, especially for large datasets.

- Outsourcing tasks saves time and reduces costs. This method is suitable for quickly scaling annotations.

- A hybrid model that combines in-house expertise with external or crowdsourced support can provide a balance of cost, time, and quality.

Each approach has its advantages, and the choice depends on the needs and resources of the project.

Selecting the Labeling Platform

Open source platforms are suitable for small projects due to their customization capabilities but require technical expertise. Commercial platforms provide automation, integration, and scalability that is suitable for large and complex tasks.

Building and Managing Labeling Workforce

To manage a team effectively, roles and responsibilities need to be clearly defined.

- Project managers oversee the workflow.

- Subject Matter Experts (SMEs) provide expertise in a specific area.

- Annotators perform data annotation tasks.

- Quality Assurance (QA) professionals review annotations.

Qualified annotators and their ongoing training are key to a successful project. Hiring qualified annotators is crucial. Regular reviews and feedback help maintain high standards.

A platform like Keymakr has a professional team and many tools. This ensures correct and accurate results.

Integrating advanced methods into data labeling

Synthetic labeling using GAN, VAE, and AR models produces high-quality training data. This method is suitable for projects with limited examples. Synthetic data helps train AI models to recognize rare diseases in medical imaging.

GANs and VAEs generate different types of synthetic data, which allows the datasets to be adapted to specific tasks.

Data programming for weak supervision effectively reduces the need for manual labeling. This method is useful in projects with weak supervision where accurate labels are a challenge.

In NLP tasks, labels can be generated on pre-trained models. This approach speeds up the data annotation process and improves the data's consistency and accuracy.

Integrating synthetic labeling and data programming helps to avoid data scarcity and improve the accuracy of predictions.

Future Trends and Innovations in Data Labeling

One of the innovations is the increase in active learning platforms. These systems annotate data on the most informative examples, which reduces the workload.

Synthetic data generation uses GANs and VAEs to create high-quality training examples. This approach is needed for projects with small amounts of data.

Focus on the dataset's quality, not the model's complexity. This trend shows the importance of accurate labels and diverse data representation.

FAQ

What are the strategies for labeling subsets of data?

Strategies include defining clear annotation guidelines, using active learning techniques, and implementing quality control measures.

How does labeling impact machine learning models?

Accurate data annotations ensure that the learning algorithm can generalize and make reliable predictions.

What are the benefits of using automated labeling tools?

Automated tools can speed up the labeling process, reduce costs, and improve consistency.

How can you ensure consistency in data annotation across a team?

Consistency can be achieved through annotation guidelines, training, and annotators' agreement metrics.

What tools are recommended for data labeling projects?

Open source platform tools as well as commercial solutions. The choice depends on the complexity of the project and requirements.

What are the emerging trends to watch for in data labeling?

The adoption of synthetic data, active learning techniques, and the shift to data-driven AI approaches.

Comments ()