Speed and Efficiency of YOLOv10

YOLOv10, the latest in the YOLO series, brings groundbreaking advancements. It eliminates non-maximum suppression (NMS) and optimizes model components. This results in superior YOLOv10 performance and reduced computational overhead. It addresses key limitations of previous versions, expanding the possibilities in object detection.

With its state-of-the-art accuracy-latency trade-offs across multiple model scales, YOLOv10 caters to a wide range of applications. It's ideal for autonomous vehicles, robotics, or surveillance systems. YOLOv10's enhanced speed and efficiency make it a game-changer in real-time object detection.

Key Takeaways

- YOLOv10 processes up to 1,000 frames per second on high-end GPUs

- Eliminates non-maximum suppression for improved efficiency

- Offers superior accuracy-latency trade-offs across various scales

- Addresses limitations of previous YOLO versions

- Suitable for diverse applications from autonomous driving to surveillance

Introduction to YOLOv10

YOLOv10 represents a major advancement in YOLO evolution, expanding the limits of object detection. Introduced in May 2024, this model significantly enhances real-time performance in computer vision tasks.

Evolution of YOLO Series

The YOLO series has continuously transformed object detection. YOLOv10 leads this evolution, achieving higher mean Average Precision (mAP) scores than its predecessors. It surpasses YOLOv9, YOLOv8, and YOLOv7 in the MS COCO dataset, showing notable gains in Average Precision (AP).

Key Advancements in YOLOv10

YOLOv10 introduces several groundbreaking features:

- Consistent dual assignments for NMS-free training

- Lightweight classification head to reduce computational redundancy

- Spatial-channel decoupled downsampling for optimized feature extraction

- Large-kernel convolution for capturing detailed features

- Effective partial self-attention module for improved accuracy

Impact on Real-Time Object Detection

YOLOv10's advancements significantly impact real-time object detection. It showcases superior performance and end-to-end latency across various scales. Compared to YOLOv8, YOLOv10 boasts higher mAP scores with fewer parameters and calculations.

| Model | Parameters | FLOPs | mAPval50-95 | Latency (ms) |

|---|---|---|---|---|

| YOLOv10-N | 2.3M | 6.7G | 39.5 | 1.84 |

| YOLOv10-S | 8.5M | 24.5G | 45.1 | 2.00 |

| YOLOv10-M | 21.0M | 57.6G | 48.2 | 2.36 |

| YOLOv10-X | 60.0M | 168G | 50.8 | 3.00 |

YOLOv10's efficiency is clear in its performance metrics. For example, YOLOv10-S is 1.8 times faster than RT-DETR-R18 with similar AP on COCO, having 2.8 times fewer parameters and FLOPs. These enhancements make YOLOv10 a pivotal innovation for real-time object detection applications.

YOLOv10 Architecture Overview

YOLOv10 model architecture marks a major advancement in object detection network design. It incorporates new features that boost both efficiency and precision. This latest version builds upon its predecessors, offering significant improvements.

At its heart, YOLOv10 features a refined CSPNet backbone for extracting features. It also includes a PAN neck, which excels in fusing features across multiple scales. The model's dual-head design is unique, combining a One-to-Many Head and a One-to-One Head.

The One-to-Many Head generates multiple predictions for each object during training, enhancing accuracy. On the other hand, the One-to-One Head produces a single prediction per object during inference. This design eliminates the need for Non-Maximum Suppression (NMS), greatly reducing latency.

YOLOv10's lightweight classification head employs two depthwise separable convolutions and a 1×1 convolution. This setup minimizes computational overhead while maintaining high performance. The model also optimizes resource usage by decoupling spatial downsampling and channel transformation using pointwise and depthwise convolutions.

A significant innovation in YOLOv10's design is the rank-guided block. It identifies and replaces redundant stages with a compact inverted block structure. This enhances efficiency without compromising accuracy. Consequently, YOLOv10 surpasses its predecessors, achieving a mean average precision (mAP) of 45.6%, outperforming YOLOv9's 43.2% and YOLOv8's 41.5%.

These architectural enhancements lead to tangible real-world benefits. YOLOv10 shows a 15% increase in inference speed over YOLOv9 and a 25% improvement over YOLOv8. This combination of speed and accuracy makes YOLOv10 a leading solution for real-time object detection tasks.

Efficiency of YOLOv10

YOLOv10 introduces groundbreaking advancements in computational optimization and resource utilization. This latest version of the YOLO series marks a significant leap in YOLOv10 efficiency. It sets new standards for real-time object detection.

Computational Efficiency Improvements

The computational efficiency of YOLOv10 is evident in its streamlined architecture. It employs a lightweight classification head with depth-wise separable convolutions. This approach significantly reduces the computational load. It allows for faster processing without compromising accuracy.

Resource Usage Optimization

YOLOv10's resource utilization is optimized through innovative techniques. The model uses spatial-channel decoupled down-sampling, minimizing information loss while keeping computational costs low. Additionally, its rank-guided block design ensures optimal parameter utilization. This further enhances efficiency.

Speed Enhancements over Previous Versions

The speed enhancements in YOLOv10 are truly impressive. Compared to its predecessors, YOLOv10 demonstrates reductions in latency ranging from 37% to 70%. This significant improvement in speed makes it an ideal choice for applications requiring real-time object detection.

To put these improvements into perspective, let's look at some comparative data:

- YOLOv10-S is 1.8 times faster than RT-DETR-R18 with similar accuracy

- YOLOv10-B has 46% less latency and 25% fewer parameters compared to YOLOv9-C while maintaining performance

- YOLOv10 variants show AP improvements of 1.2% to 1.4% over YOLOv8 counterparts

These enhancements in YOLOv10 efficiency, computational optimization, and resource utilization make it a game-changer in the field of object detection. Its ability to deliver high accuracy with reduced computational requirements opens up new possibilities for applications in agriculture and beyond. It pushes the boundaries of what's possible in real-time image analysis.

NMS-Free Training: A Game-Changing Approach

YOLOv10 introduces a revolutionary NMS-free training approach that transforms object detection. This innovative method significantly reduces object detection latency while maintaining high accuracy levels.

Consistent Dual Assignments

The core of YOLOv10's NMS-free training lies in its consistent dual assignments. This technique combines one-to-many and one-to-one strategies during the training process. By aligning supervision between both strategies, YOLOv10 enhances prediction quality and simplifies deployment.

Elimination of Non-Maximum Suppression

YOLOv10's dual label assignments and consistent matching metric eliminate the need for Non-Maximum Suppression (NMS) during inference. This removal of NMS addresses the computational redundancy issues present in previous YOLO versions, streamlining the detection process.

Impact on Inference Latency

The NMS-free training approach has a profound impact on inference latency. YOLOv10 processes images at an impressive speed of 1 millisecond per image, equivalent to 1000 frames per second. This represents a significant improvement in real-time processing capabilities.

| Model | Latency Reduction | AP Improvement |

|---|---|---|

| YOLOv10-N | 70% | 1.2% |

| YOLOv10-S | 60% | 1.4% |

| YOLOv10-X | 37% | 0.5% |

These improvements in latency and accuracy make YOLOv10 a top choice for real-time object detection tasks. The NMS-free training approach not only enhances performance but also simplifies the overall detection process. This makes it easier to implement and deploy in various applications.

Holistic Model Design Strategy

YOLOv10 model design adopts a comprehensive strategy to balance efficiency and accuracy. It aims to enhance real-time object detection performance. The model's architecture includes several innovative features to achieve this balance.

The core of YOLOv10's strategy is its lightweight classification head. It employs two depthwise separable convolutions with a 3×3 kernel size. This design leads to significant computational efficiency gains without compromising detection accuracy.

Another key feature is the spatial-channel decoupled down-sampling. This technique reduces computational costs while enhancing feature extraction. It's a clever way to improve inference times without compromising on performance.

The YOLOv10 model design also includes a rank-guided block design for efficiency. This approach assesses stage redundancy and optimizes block structure. It's part of what makes YOLOv10 up to 15% faster in inference speed compared to its predecessors.

On the accuracy front, YOLOv10 employs large-kernel convolutions. These expand the receptive field, allowing the model to capture more context. Partial self-attention modules are also incorporated, improving global representation learning with minimal overhead.

| Feature | Purpose | Impact |

|---|---|---|

| Lightweight classification head | Reduce computational overhead | Improved efficiency |

| Spatial-channel decoupled down-sampling | Efficient downsampling | Enhanced feature extraction |

| Rank-guided block design | Optimize model structure | Increased inference speed |

| Large-kernel convolutions | Expand receptive field | Improved accuracy |

| Partial self-attention modules | Enhance global representation | Better detection performance |

This holistic approach to YOLOv10 model design results in a series of variants (N/S/M/L/X) that cater to different needs. From resource-constrained environments to high-performance applications, YOLOv10 offers a solution that maintains an optimal efficiency-accuracy balance.

YOLOv10 Model Variants and Performance

YOLOv10 introduces a variety of model variants, each designed to meet specific needs in object detection. These models cater to different computational resources and accuracy levels. This makes YOLOv10 adaptable for a wide range of applications.

Nano and Small Variants

The nano version, YOLOv10-N, is ideal for environments with limited resources. It has 2.3M parameters and 6.7G FLOPs. On the other hand, YOLOv10-S balances speed and accuracy. It has 7.2M parameters and 21.6G FLOPs, achieving 46.3% APval with a latency of 2.49ms.

Medium and Balanced Versions

YOLOv10-M is suitable for general use, with 15.4M parameters and 59.1G FLOPs. It reaches 51.1% APval at 4.74ms latency. YOLOv10-B, the balanced version, enhances performance while maintaining efficiency.

Large and Extra-Large Variants

YOLOv10-L aims for higher accuracy with 24.4M parameters and 120.3G FLOPs, achieving 53.2% APval at 7.28ms latency. YOLOv10-X, for maximum performance, has 29.5M parameters and 160.4G FLOPs, reaching 54.4% APval with a 10.70ms latency.

| Model Variant | Parameters | FLOPs | APval | Latency (ms) |

|---|---|---|---|---|

| YOLOv10-S | 7.2M | 21.6G | 46.3% | 2.49 |

| YOLOv10-M | 15.4M | 59.1G | 51.1% | 4.74 |

| YOLOv10-L | 24.4M | 120.3G | 53.2% | 7.28 |

| YOLOv10-X | 29.5M | 160.4G | 54.4% | 10.70 |

YOLOv10 model variants show significant advancements over previous versions. They offer up to 46% latency reduction and up to 1.4% AP improvement over YOLOv8. These improvements are seen across all variants, with parameter reductions of 28% to 57% and latency decreases of 23% to 38%. For a detailed look at YOLOv10's custom object detection capabilities, explore further resources.

Benchmarking YOLOv10 Against Other Models

YOLOv10 has set new benchmarks in object detection, surpassing its predecessors and other top models. The YOLOv10 family includes six variants, each designed for specific tasks and computational needs.

Now, let's explore the YOLOv10 benchmarks and compare it with other object detection models:

| Model | APval (%) | Parameters (M) | FLOPs (G) | Latency (ms) |

|---|---|---|---|---|

| YOLOv10-N | 38.5 | 2.3 | 6.7 | 1.84 |

| YOLOv10-S | 46.3 | 8.7 | 21.6 | 2.49 |

| YOLOv10-M | 51.1 | 20.7 | 59.1 | 4.74 |

| YOLOv10-X | 54.4 | 29.5 | 160.4 | 10.70 |

YOLOv10 has made significant strides over its predecessors. For example, YOLOv10-S is 1.8 times faster than RT-DETR-R18, with a 2.8 times reduction in parameters and FLOPs. YOLOv10-B also shows a 46% latency reduction compared to YOLOv9-C, while maintaining similar performance.

The architectural innovations in YOLOv10 contribute to its superior performance:

- Enhanced Backbone using CSPNet

- PAN layers in the Neck for multiscale feature fusion

- Dual One-to-Many and One-to-One Heads for training and inference

- NMS-Free Training, eliminating the need for non-maximum suppression

These advancements lead to faster image processing without a significant increase in parameters. This makes YOLOv10 perfect for real-time applications. While YOLOv8 excels in accuracy and robustness, YOLOv10's speed gives it an edge in time-sensitive scenarios.

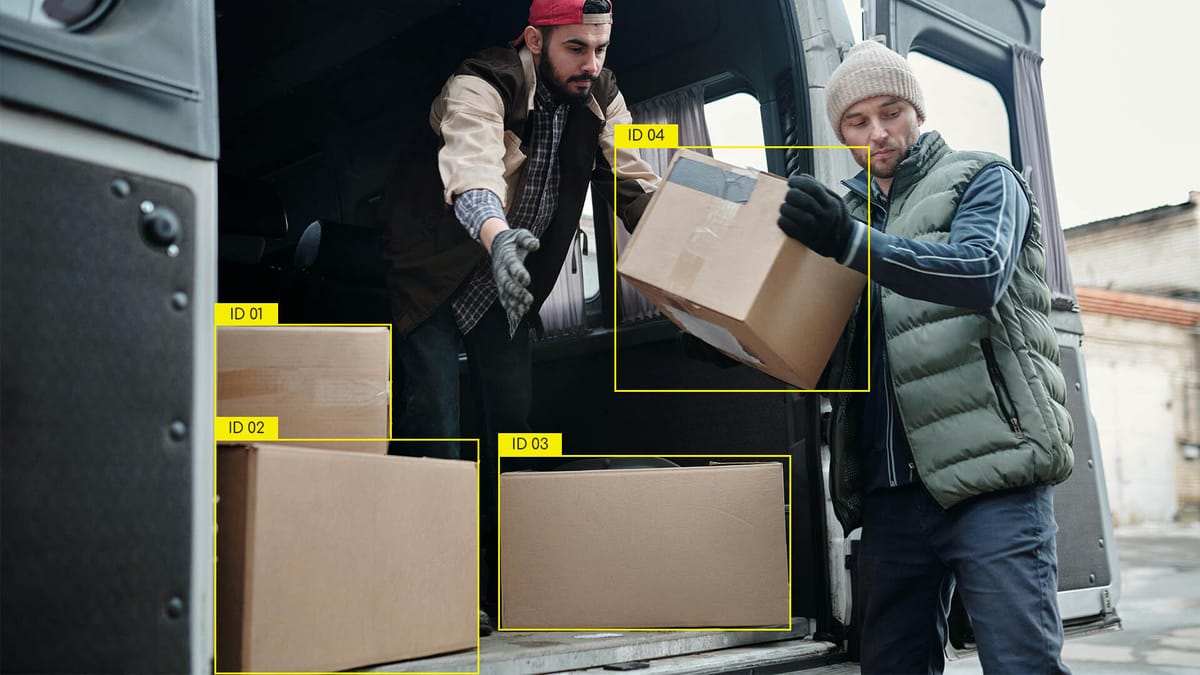

Real-World Applications of YOLOv10

YOLOv10's remarkable speed and efficiency are transforming various industries. Its advanced performance is revolutionizing tasks in autonomous driving, robotics, and security. This technology is changing how we approach complex challenges.

Autonomous Driving

In the world of self-driving cars, YOLOv10 stands out. It processes visual data 1.8 times faster than before. This means quicker detection of pedestrians, traffic signs, and obstacles. The result is safer roads and smoother rides for everyone.

Robotics and Automation

The manufacturing sector sees significant benefits from YOLOv10. Robots equipped with this technology navigate warehouses more efficiently and spot defects accurately. In healthcare, YOLOv10 helps medical staff quickly identify and track essential equipment.

Surveillance and Security

Security systems are greatly enhanced by YOLOv10. It enables seamless tracking of individuals or vehicles across multiple feeds. With 25% fewer parameters than earlier versions, it's more resource-efficient without losing vigilance. This makes public spaces safer.

FAQ

What makes YOLOv10 stand out in terms of speed and efficiency?

YOLOv10 introduces several innovations that significantly boost its speed and efficiency. It eliminates the need for non-maximum suppression (NMS) during inference, reducing computational overhead. Additionally, it optimizes model components like the lightweight classification head, spatial-channel decoupled down-sampling, and rank-guided block design. This results in up to 70% lower latency and fewer parameters compared to previous YOLO versions.

How does YOLOv10 advance the YOLO series for real-time object detection?

YOLOv10 builds upon the success of previous YOLO models with key innovations in architecture and design strategies. It introduces consistent dual assignments for NMS-free training, a holistic efficiency-accuracy driven model design, and enhanced model capabilities like large-kernel convolutions and partial self-attention modules. These advancements significantly improve real-time object detection performance while reducing computational overhead.

Can you explain the architectural components of YOLOv10?

YOLOv10's architecture consists of an enhanced CSPNet backbone for feature extraction, a PAN neck for multiscale feature fusion, and a dual-head design. The One-to-Many Head generates multiple predictions per object during training, while the One-to-One Head produces a single best prediction per object during inference, eliminating the need for NMS.

How does YOLOv10 optimize computational efficiency?

YOLOv10 employs several strategies to enhance computational efficiency. It uses a lightweight classification head with depth-wise separable convolutions, spatial-channel decoupled down-sampling to minimize information loss and computational cost, and rank-guided block design for optimal parameter utilization. These improvements result in significant reductions in latency and parameter count while maintaining or improving accuracy.

What is the impact of consistent dual assignments in YOLOv10?

YOLOv10 implements consistent dual assignments, combining one-to-many and one-to-one strategies during training. This approach eliminates the need for non-maximum suppression during inference, significantly reducing latency. The consistent matching metric aligns supervision between both strategies, enhancing prediction quality and simplifying deployment while improving inference speed.

How does YOLOv10's holistic model design strategy balance efficiency and accuracy?

YOLOv10 employs a holistic efficiency-accuracy driven model design strategy. For efficiency, it incorporates a lightweight classification head, spatial-channel decoupled down-sampling, and rank-guided block design. For accuracy, it utilizes large-kernel convolutions to enlarge the receptive field and partial self-attention modules for improved global representation learning with minimal overhead.

What are the different model variants offered by YOLOv10?

YOLOv10 offers six model variants catering to different application needs: Nano (N), Small (S), Medium (M), Balanced (B), Large (L), and Extra-large (X). These variants range from 2.3M to 29.5M parameters and 6.7G to 160.4G FLOPs, providing options for various computational resources and accuracy requirements.

How does YOLOv10 perform compared to other state-of-the-art object detection models?

YOLOv10 outperforms previous YOLO versions and other state-of-the-art models in terms of accuracy and efficiency. For example, YOLOv10-S is 1.8x faster than RT-DETR-R18 with similar AP on the COCO dataset, and YOLOv10-B has 46% less latency and 25% fewer parameters than YOLOv9-C with comparable performance. Across all variants, YOLOv10 demonstrates superior accuracy-latency trade-offs.

What are some potential real-world applications of YOLOv10?

YOLOv10's improved speed and accuracy make it ideal for various real-world applications. In autonomous driving, it enhances pedestrian detection, traffic sign recognition, and obstacle avoidance. For robotics and automation, it enables efficient warehouse navigation, defect detection in manufacturing, and assistance in healthcare settings. In surveillance and security, it provides real-time tracking of individuals or vehicles across multiple camera feeds, enhancing overall system effectiveness.

Comments ()