Step-by-Step Guide to Training YOLOv10

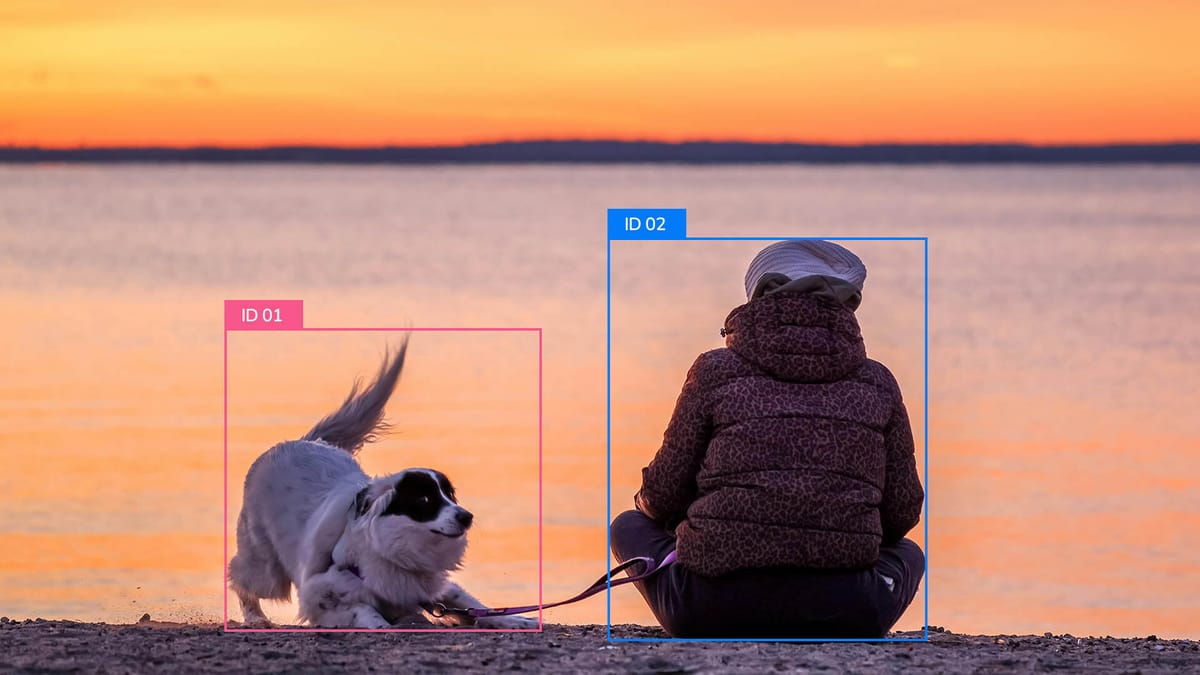

Welcome to the forefront with our YOLOv10 Guide, a pivotal advancement in object detection. Released on May 23, 2024, YOLOv10 elevates the standards of computer vision and deep learning. Its development by Tsinghua University's researchers showcases enhanced performance alongside reduced computational needs.

YOLOv10 presents six variants, each designed for distinct requirements. The lightweight YOLOv10-N, with only 2.3 million parameters, contrasts with the robust YOLOv10-X, featuring 29.5 million. The YOLOv10-S variant notably outshines its predecessors, delivering 1.8 times the speed of RT-DETR-R18 while preserving accuracy.

This guide will navigate you through the intricacies of leveraging YOLOv10 for your object detection endeavors. You will discover the steps to set up, train, and deploy this cutting-edge model. This will empower you to extend the frontiers of real-time object detection.

Key Takeaways

- YOLOv10 offers six variants for different performance needs

- YOLOv10-S is 1.8x faster than RT-DETR-R18 with similar accuracy

- The model achieves lower latency with fewer parameters

- YOLOv10-B shows 46% less latency than YOLOv9-C

- Improved efficiency in both speed and computational resources

- Eliminates need for non-maximum suppression during inference

- Suitable for various applications including surveillance and traffic monitoring

Introduction to YOLOv10 and Its Advancements

YOLOv10 represents a major advancement in object detection technology. This version of the YOLO series enhances both speed and accuracy, transforming real-time video processing and image recognition. It stands out for its significant improvements.

Brief history of YOLO models

Since their creation, YOLO models have led the field of object detection. These neural networks use a single-shot method, simultaneously detecting and classifying objects. Each version of YOLO has built upon the last, enhancing its capabilities.

Key improvements in YOLOv10

YOLOv10 brings several innovative features:

- NMS-Free training, eliminating the need for Non-Maximum Suppression during inference

- Consistent dual assignments, combining one-to-one and one-to-many matching strategies

- Efficiency-driven model design, reducing computational costs

These enhancements lead to better performance in various metrics:

| Metric | Improvement |

|---|---|

| Average Precision (AP) | Up to 1.4% increase |

| Latency | 37% to 65% reduction |

| Parameters (YOLOv10-L vs YOLOv8-L) | 1.8 times fewer |

Applications in real-time object detection

YOLOv10's advanced features make it perfect for real-time applications:

- Autonomous vehicles

- Surveillance systems

- Robotics

The model excels in real-time video processing, with the smallest variant processing images in just 1 millisecond. This achievement enables new possibilities for edge devices, expanding the use of neural networks in everyday technology.

Setting Up Your Environment for YOLOv10

Setting up your environment for YOLOv10 is essential for effective object detection. Released in May 2024 by researchers at Tsinghua University, YOLOv10 boasts superior accuracy and speed over its predecessors. To start, establishing a strong AI model training platform is necessary.

Google Colab is a prime choice for running YOLOv10. It offers free GPU access, making it perfect for training complex AI models. Begin by installing YOLOv10 from source using pip. Then, download pre-trained weights for various YOLOv10 versions (n, s, m, b, x, l) trained on the Microsoft COCO dataset.

For the best YOLOv10 setup, tailor your data.yaml file with details like training datasets, validation sets, class numbers, and class names. This is crucial for customizing YOLOv10 for your specific object detection needs.

Once your environment is set, test the model with a sample image. This confirms the model's functionality and prepares you for training your custom YOLOv10 model. You can adjust parameters like epochs, batch size, and plotting options during this step.

YOLOv10 offers higher accuracy and faster performance compared to previous YOLO models, enhancing object detection capabilities.

With your deep learning environment set up, you're now prepared to leverage YOLOv10's power for top-tier object detection tasks.

Preparing Your Dataset for YOLOv10 Training

Preparing your dataset is key to a successful YOLOv10 training. Released on May 23, 2024, by researchers at Tsinghua University, YOLOv10 brings notable speed and accuracy enhancements. Let's explore the essential steps for dataset preparation.

Collecting and Organizing Image Data

Begin by collecting a diverse range of images pertinent to your object detection needs. Place these images into folders, distinguishing them into training and validation subsets. It's crucial to include images with varied lighting, angles, and backgrounds for the best results.

Image Annotation

Annotation of images is vital in the preparation process. Utilize tools such as LabelIMG or Labelme for this purpose. Mark objects in your images by drawing bounding boxes and assigning the correct class labels. This activity generates annotation files that YOLOv10 will use for its training.

Converting to YOLO Format

YOLOv10 demands annotations in a specific format. Transform your annotations into the YOLO format, which includes text files with normalized bounding box coordinates and class labels. Each image must have a corresponding text file with the identical name.

To further enhance your dataset, consider applying augmentation techniques. YOLOv10 training benefits significantly from various augmentations, including flipping, scaling, and rotation. These methods enhance model generalization and performance.

| Augmentation Technique | Probability/Range |

|---|---|

| Left-Right Flip | 50% |

| Up-Down Flip | 20% |

| Scaling | ±10% |

| Translation | ±10% of dimensions |

| Rotation | ±5 degrees |

With your dataset prepared and annotations converted, you're now set to proceed with training your YOLOv10 model for sophisticated object detection tasks.

Installing YOLOv10 and Required Dependencies

Setting up YOLOv10 for your computer vision projects is a straightforward process. It begins with creating a virtual environment to manage Python dependencies efficiently. This ensures a clean and isolated workspace for your object detection tasks.

To start the YOLOv10 installation, you'll need to install the Ultralytics package. The recommended method is using pip, the Python package installer. Run this command in your terminal:

pip install ultralyticsFor those who prefer the latest developments, cloning the Ultralytics GitHub repository is an option. This gives you access to the most recent YOLOv10 features and improvements.

After installing the main package, you'll need to set up additional Python dependencies for your computer vision setup. These include torch, torchvision, and onnx. YOLOv10's advancements in object detection require specific versions of these libraries to function optimally.

For CUDA-enabled systems, it's crucial to ensure compatibility between your CUDA version and the installed PyTorch version. This alignment is key for leveraging GPU acceleration in YOLOv10, significantly boosting performance in real-time object detection tasks.

| YOLOv10 Version | Latency Reduction | Parameter Reduction |

|---|---|---|

| YOLOv10-B | 46% vs YOLOv9-C | 25% vs YOLOv9-C |

| YOLOv10-S | 1.8x faster than RT-DETR-R18 | Fewer parameters and FLOPs |

With these steps completed, your environment is ready for advanced object detection tasks using YOLOv10. The setup process ensures you have all necessary components for a robust computer vision pipeline. This sets the stage for efficient model training and deployment.

YOLOv10 Guide: Configuration and Hyperparameter Tuning

YOLOv10 introduces significant advancements in object detection, offering enhanced efficiency and accuracy. This guide will explore the essential aspects of configuring and tuning this model for your custom object detection tasks.

Understanding YOLOv10 Architecture

YOLOv10 employs a novel NMS-free training method with dual label assignments. It incorporates lightweight classification heads and spatial-channel decoupled downsampling. The model leverages large-kernel depthwise convolutions and partial self-attention to broaden the receptive field and improve global context understanding.

Optimizing Model Parameters

To achieve peak performance, focus on hyperparameter optimization. Key areas include learning rate, batch size, and epoch count. YOLOv10 models, ranging from N to X, allow you to balance speed and accuracy according to your requirements.

| Model | Parameters | FLOPs | AP (%) | Latency (ms) |

|---|---|---|---|---|

| YOLOv6-3.0-N | 3.21M | 11.10G | 37.5 | 1.21 |

| YOLOv10-N | 2.64M | 7.01G | 38.0 | 0.86 |

| YOLOv8-S | 11.17M | 28.60G | 44.9 | 1.47 |

| YOLOv10-S | 8.73M | 24.24G | 45.1 | 1.26 |

Customizing for Specific Detection Tasks

For tailored object detection, tailor the model configuration to your dataset. The Kidney Stone Detection Dataset, with 1300 images, exemplifies this. During training, monitor the Mean Average Precision (mAP) at IoU threshold 0.50. The model achieved optimal performance around epoch 176, with mAP50 peaking at ~0.72.

Effective model configuration and hyperparameter tuning are vital for leveraging YOLOv10's full potential in your object detection applications.

Training Process: From Start to Finish

The YOLOv10 training process is a deep learning journey that demands meticulous attention. You start by using the command line interface or Ultralytics API to kick off the training. This initial step requires defining essential files such as data configuration, model setup, and initial weights.

Throughout the YOLOv10 training, monitor loss values and performance metrics closely. These metrics are crucial for tracking the model's advancement and adjusting it as needed. For the best outcomes, aim to train your model for about 100 epochs. This allows it to fully converge and perform optimally.

The YOLOv10 model significantly enhances performance. It outpaces its predecessors and rivals in efficiency. For example, YOLOv10-S is 1.8 times faster than RT-DETR-R18 with comparable accuracy. YOLOv10-B, on the other hand, reduces latency by 46% and parameters by 25% compared to YOLOv9-C.

| Model | AP Improvement | Parameter Reduction | Latency Decrease |

|---|---|---|---|

| YOLOv10 (overall) | 1.2% - 1.4% | 28% - 57% | 37% - 70% |

| YOLOv10L vs GoldYOLOL | 1.4% | 68% | 32% |

| YOLOv10S vs RTDETRR18 | Comparable | Fewer | 1.8x faster |

These advancements in model optimization make YOLOv10 a top choice for real-time applications in various sectors. This includes autonomous vehicles, surveillance, and robotics. As you navigate the deep learning process, remember these improvements to fully exploit YOLOv10's capabilities.

Evaluating Your Trained YOLOv10 Model

After training your YOLOv10 model, it's essential to evaluate its performance. This evaluation is crucial for understanding how well your object detection system functions in real-world settings.

Interpreting Performance Metrics

Performance metrics are vital for assessing your model's effectiveness. The mean Average Precision (mAP) is a key metric used in object detection. It evaluates how accurately your model identifies objects across various classes.

| Model | mAP | Inference Time |

|---|---|---|

| YOLOv10-X | 55.2% | 12.3ms |

| YOLOv8-X | 54.7% | 15.9ms |

| RT-DETR-R101 | 54.8% | 16.0ms |

Analyzing Confusion Matrices

A confusion matrix provides a visual representation of your model's performance across different object classes. It highlights where your model performs well and where it needs improvement. This insight is crucial for identifying areas to focus on.

Fine-tuning Based on Evaluation Results

Use the insights from your evaluation to refine your model. If specific classes show low precision, consider expanding your training data for those categories. Adjusting hyperparameters or data augmentation techniques can also enhance performance.

Model evaluation is an ongoing process. Continually assess and refine your YOLOv10 model to achieve the best results for your object detection tasks.

Deploying Your YOLOv10 Model for Real-World Applications

Deploying your YOLOv10 model for real-world applications is a pivotal step. This tool excels in real-time object detection, offering various models to fit different needs and resources.

When selecting a model for deployment, consider your specific requirements:

- YOLOv10-N (Nano): Ideal for edge devices with limited resources

- YOLOv10-S (Small): Balances speed and accuracy for general applications

- YOLOv10-M (Medium): Suitable for more demanding tasks with moderate resources

- YOLOv10-L (Large): Ideal for high-accuracy needs with ample computing power

- YOLOv10-X (Extra-large): Best for tasks requiring maximum precision

Each variant provides distinct performance, allowing tailored optimization for your computer vision applications.

| Model | APval | Latency (ms) | GFLOPs |

|---|---|---|---|

| YOLOv10-S | 46.3 | 2.49 | 21.6 |

| YOLOv10-M | 51.1 | 4.74 | 59.1 |

| YOLOv10-L | 53.2 | 7.28 | 120.3 |

| YOLOv10-X | 54.4 | 10.70 | 160.4 |

Consider inference speed and accuracy based on your application's needs when deploying. YOLOv10's elimination of non-maximum suppression reduces latency, enhancing real-time performance in object detection tasks.

Optimize your model for edge devices or cloud platforms based on your use case. Implement post-processing techniques to refine detection results. Integrate the model into larger systems for practical applications in autonomous driving, surveillance, or industrial automation.

YOLOv10 sets a new standard in real-time object detection, offering superior accuracy-latency trade-offs compared to previous models.

By selecting and deploying the right YOLOv10 model, you can significantly enhance your computer vision applications. This leverages state-of-the-art object detection capabilities in real-world scenarios.

Mastering YOLOv10 for Advanced Object Detection

YOLOv10 marks a major advancement in the field of advanced object detection. It leverages the strengths of its predecessors, providing faster and more accurate results essential for real-time use. Through this guide, you've learned how to train and deploy YOLOv10 models for various computer vision tasks.

The path through object detection algorithms has been extraordinary. We've seen progress from HOG and DPM to YOLO-NAS. Each step has expanded the capabilities of computer vision. YOLOv10 excels by blending the speed of single-shot detectors with the accuracy of two-stage methods. This makes it a versatile tool for a wide range of applications.

Looking ahead, the potential of YOLOv10 in addressing small object detection and occlusion is thrilling. Your expertise in this powerful tool places you at the leading edge of advanced object detection. Continue to explore, adapt, and push the boundaries of what YOLOv10 can accomplish in your endeavors.

FAQ

What is YOLOv10?

YOLOv10, unveiled on May 23, 2024, is a cutting-edge real-time object detection model. It was developed by researchers at Tsinghua University. This model marks the latest advancement in the YOLO (You Only Look Once) series. It boasts enhanced speed and accuracy over its predecessors.

How does YOLOv10 compare to previous YOLO models?

YOLOv10 outperforms earlier YOLO models in terms of speed and accuracy, despite having fewer parameters. For instance, YOLOv10-S is significantly faster than RT-DETR-R18, achieving similar Average Precision (AP) on the COCO dataset. It also has fewer parameters and FLOPs than its predecessors. Compared to YOLOv9-C, YOLOv10-B offers 46% less latency and 25% fewer parameters for equivalent performance.

What are the applications of YOLOv10?

YOLOv10 excels in real-time object detection across various fields. Its applications include autonomous vehicles, surveillance systems, robotics, and other computer vision tasks. These tasks require fast and precise object detection.

How do I set up the environment for YOLOv10?

Setting up for YOLOv10 involves installing the model from source using pip. You also need to download model weights for different versions (n, s, m, b, x, l) trained on the Microsoft COCO dataset. Finally, test the model installation with a sample image to ensure functionality.

How do I prepare my dataset for YOLOv10 training?

Preparing your dataset for YOLOv10 training requires collecting and organizing image data. Use annotation tools like LabelIMG or Labelme for creating annotations. Then, convert these annotations to the YOLOv8 PyTorch TXT format, which YOLOv10 uses. Alternatively, leverage pre-existing datasets from sources like Roboflow Universe, Kaggle, COCO, or Pascal VOC.

How do I install YOLOv10 and its dependencies?

Installing YOLOv10 involves using pip or cloning the repository. Create a virtual environment to manage dependencies. Install required packages, including torch, torchvision, onnx, pycocotools, and others listed in the requirements.txt file. Ensure your system's CUDA version is compatible for GPU acceleration.

How do I configure and tune YOLOv10 for optimal performance?

To optimize YOLOv10, understand its architecture and configure it using a YAML file that specifies dataset paths and classes. Adjust hyperparameters like learning rate, batch size, and number of epochs. Customize the model for specific detection tasks by modifying the number of classes and adjusting the network architecture if necessary.

How do I train YOLOv10 on my dataset?

Training YOLOv10 involves starting with the command line interface or the Ultralytics API. Specify the data configuration file, model configuration, weights, and output name. Monitor the training process, including loss values and performance metrics. Adjust training parameters as needed based on the model's progress.

How do I evaluate the trained YOLOv10 model?

Evaluating the trained YOLOv10 model requires analyzing performance metrics like mean Average Precision (mAP). Examine the confusion matrix to understand the model's strengths and weaknesses in detecting different classes. Analyze training graphs to assess the model's learning progress. Use these insights to fine-tune the model, adjusting hyperparameters or augmenting the dataset if necessary to improve performance.

How do I deploy the trained YOLOv10 model in real-world applications?

Deploying the trained YOLOv10 model requires optimizing it for inference speed and accuracy based on your application's needs. Consider deploying on edge devices or cloud platforms depending on your use case. Implement post-processing techniques to refine detection results. Integrate the model into larger systems or applications for practical use in fields such as autonomous driving, surveillance, or industrial automation.

Comments ()