Synthetic data can enhance machine learning.

It is possible to teach a machine to recognize human actions in a variety of ways, including automatically detecting workers who fall on a construction site. A smart home robot could interpret a user's gestures using this technology.

In order to accomplish this, researchers use a vast database of video clips that show humans performing actions to train machine-learning models. Nevertheless, gathering and labeling millions or billions of videos is not only costly and labor intensive, but also contains sensitive information, such as the faces of individuals or license plates. It is also possible to violate copyright or data protection laws by using these videos. It is critical to note that this assumes that the video data are public in the first place. A large number of datasets are owned by companies and are not available to the public.

Therefore, researchers are turning to synthetic datasets for their research. Rather than using real data, these clips are generated by computers using 3D models of scenes, objects, anhumans. This is, without the possible copyright issues or ethical concerns associated with real data.

However, are synthetic data as reliable as real data? What is the performance of a model trained with these data when it is asked to classify real human actions? This question was addressed by researchers at MIT, IBM Watson AI Lab, and Boston University. Their machine-learning models were trained on a synthetic dataset of 150,000 video clips that captured a wide range of human behaviors. In order to test the models' ability to recognize real-world actions, six datasets of real-world videos were shown.

In videos with fewer background objects, synthetically trained models performed better than models trained on real data.

Researchers may be able to improve models' accuracy on real-life tasks by using synthetic datasets in this way. Using synthetic data could also assist scientists in identifying which machine-learning applications are best suited to training with synthetic data, thereby mitigating some of the ethical, privacy, and copyright concerns associated with real data.

"Our research seeks to replace real data pretraining with synthetic data pretraining as the ultimate objective. Once you have created a synthetic action, you can generate unlimited images or videos by altering the pose, the lighting, and so on. Rogerio Feris, principal scientist and manager at MIT-IBM Watson AI Lab and co-author of a paper detailing this research, explains that is the beauty of synthetic data.

A lead author of the paper is Yo-whan Kim '22, a member of the MIT Schwarzman College of Computing, Aude Oliva, the director of strategic industry engagement at MIT, a senior researcher in the Computer Science and Artificial Intelligence Laboratory (CSAIL), and seven others. We will present our research at the Conference on Neural Information Processing Systems.

Creating a synthetic dataset

To begin, the researchers compiled a new dataset of synthetic video clips that captured human actions from three publicly available datasets. It included 150 action categories and 1,000 video clips per category. The dataset is referred to as Synthetic Action Pre-Training and Transfer (SynAPT).

Based on the availability of clips with clean video data, they selected as many action categories as possible.

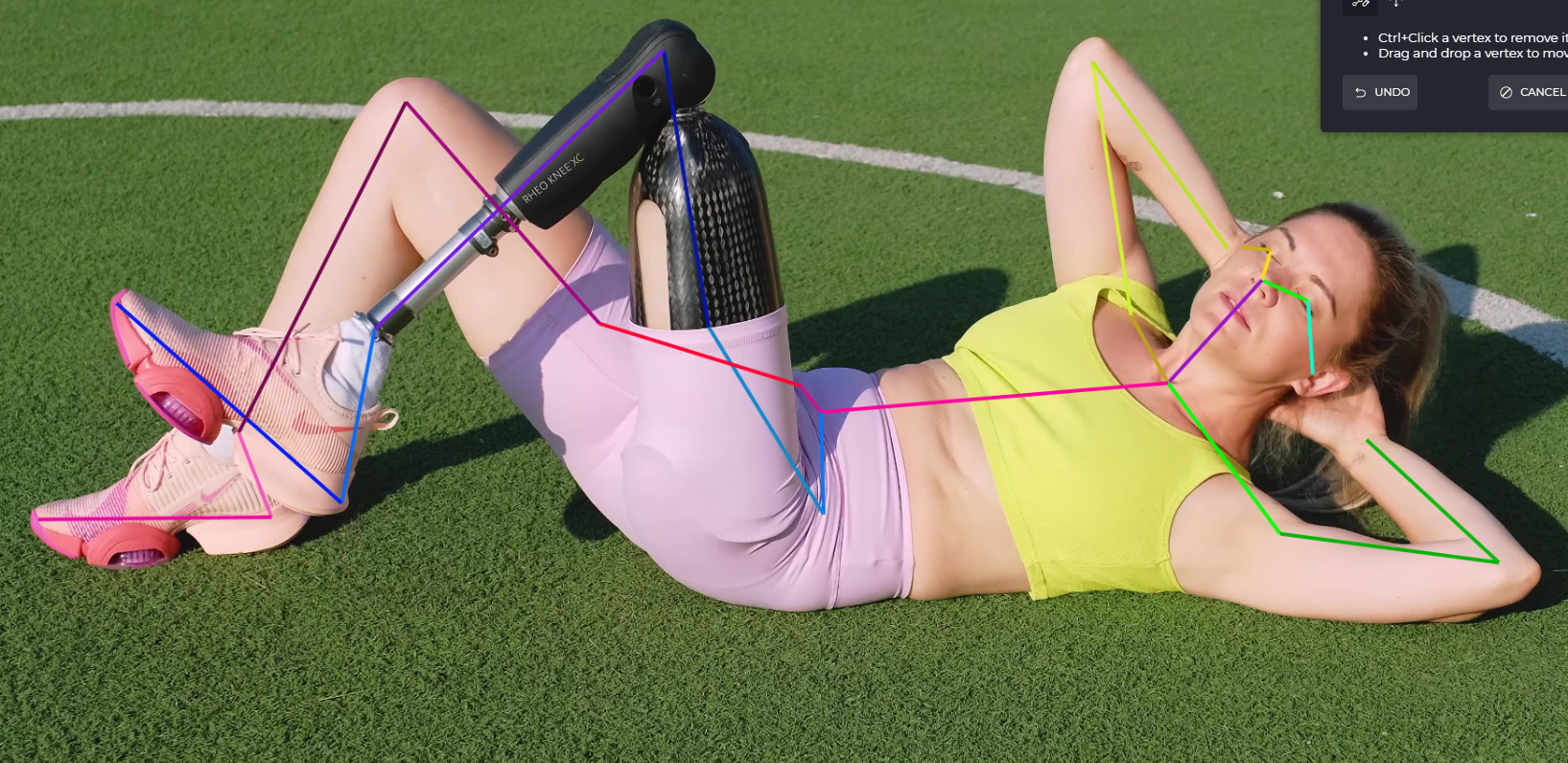

Following the preparation of the dataset, three machine-learning models were pretrained to recognize the actions. To give a model a head start when learning other tasks, pretraining involves training it for one task first. Using the parameters that have already been learned to help the pretrained model learn a new task with a new dataset as quickly and effectively as possible is a technique inspired by the way people learn-we reuse old knowledge when we learn something new.

Using six datasets of real video clips, each representing a different class of action, the pretrained models were tested.

On four of the six datasets, the synthetic models outperformed those trained using actual video clips. Video clips with low scene-object bias produced the highest accuracy for datasets containing these video clips.

An action with low scene-object bias cannot be recognized by the model by looking at the background or other objects in the scene; the model must focus on the action in question. The model, for instance, cannot identify a diving pose by looking at the water or tiles on the wall of a swimming pool, if it is asked to classify diving poses in video clips of people diving into a swimming pool. A person's movement and position must be considered when classifying an action.

The timing dynamics of the actions are more important in videos with low scene-object bias, and synthetic data appear to capture that well," Feris says.

According to Kim, "A high scene-object bias may actually act as an obstacle since the model could misclassify an action based on an object rather than the actual action itself."

Optimizing performance

According to co-author Rameswar Panda, a research staff member at the MIT-IBM Watson AI Lab, future research will include more action classes as well as additional synthetic video platforms, creating a catalog of models that have been pretrained using synthetic data.

According to him, "we are trying to develop models that perform very similarly or even better than previous models in the literature, but without the biases and security concerns associated with them."

As part of the research, they also intend to integrate their work with work that seeks to generate synthetic videos that are more accurate and realistic, which could enhance the models' performance, according to SouYoung Jin, a co-author and CSAIL postdoc. In addition, she is interested in exploring how synthetic data may affect the way models learn.

In order to prevent privacy issues and contextual or social bias, synthetic datasets are used, but what does the model actually learn? Does it provide unbiased results?" she asks.

As a result of their demonstration of synthetic videos' potential for use, they hope that other researchers will build upon their work.

The cost of obtaining well-annotated synthetic data is lower than that of annotated videos, however, currently we do not have a dataset that has the scale to compete with the largest annotated video datasets. It is our hope that we will motivate efforts in this direction by discussing the various costs and concerns associated with real videos and demonstrating the effectiveness of synthetic data," adds co-author Samarth Mishra, a graduate student at Boston University.

Src: MIT Computer Science & Artificial Intelligence Lab

Comments ()