Taking on new learning challenges

Various connected devices, such as internet-of-things (IoT) devices and sensors in automobiles, rely on microcontrollers, miniature computers that can execute simple commands. Microcontrollers with limited memory and no operating system, however, make it difficult to train artificial intelligence models on edge devices that are independent of central computing resources due to their limited memory and lack of operating system.

Training a machine-learning model on an intelligent edge device allows it to make better predictions due to its ability to adapt to new data. A smart keyboard, for instance, could learn continuously from the user's writing by training a model. Due to a large amount of memory required for the training process, it is typically done in a data center using powerful computers. In this case, user data must be sent to a central server, which is more costly and raises privacy concerns.

To overcome this issue, researchers at MIT and the MIT-IBM Watson AI Lab created a novel approach that permits on-device training with less than a quarter megabyte of RAM. Most microcontrollers' memory capacity (1,024 kilobytes) is 256 kilobytes. Other training systems designed for linked devices can use more than 500 megabytes of memory, significantly improving over the 256 kilobytes available on most microcontrollers.

By developing intelligent algorithms and frameworks, the researchers reduced the computation required to train a model, making it faster and more memory efficient. Their technique can train a machine-learning model on a microcontroller in minutes.

Additionally, this technique preserves privacy by maintaining data on the device, which is particularly useful in medical applications where sensitive data is involved. In addition, this technique may also facilitate the customization of a model according to the user's needs. In addition, the framework maintains or increases the model's accuracy in comparison to alternative training methodologies.

The research paves the path for IoT devices to not only execute inference but also continually update AI models to newly gathered data, opening the way for on-device learning that lasts a lifetime.Using low-power edge devices makes deep learning more accessible and possible.

Lightweights

A neural network is one of the most common types of machine-learning models. They are loosely modeled after the human brain and contain layers of interconnected neurons, or nodes, that process data to accomplish a task, such as recognizing people in photographs. For the model to learn the task, it must first be trained, showing millions of examples. By learning, the model increases or decreases the strength of the connections between neurons, which are referred to as weights.

Each round of learning can result in hundreds of updates to the model, which is why the intermediate activations must be stored during each iteration. A neural network's middle layer is responsible for activation. To train a model, memory is required since there may be millions of weights and activations.

The training process was made more efficient and memory-efficient by utilizing two algorithms. First, a sparse update uses an algorithm that determines which weights are most important to update at every training session. When the accuracy dips below a set threshold, the algorithm stops freezing the weights one at a time. It is unnecessary to store the activations associated with the frozen weights since the remaining weights are updated.

The entire model must be updated since there are many activations. Therefore, people tend to upgrade only the last layer, which reduces the accuracy. For this method, those important weights are selectively updated to ensure accuracy.

The second solution involves simplifying the weights, typically 32 bits, and quantifying them. A quantization process reduces memory requirements for training and inference by rounding the weights to eight bits. Applying a model to a dataset is necessary to generate a prediction. Using quantization-aware scaling (QAS), the weight-to-gradient ratio is adjusted to avoid any loss of accuracy caused by quantized training.

The researchers developed a tiny training engine system to run these algorithmic innovations on a simple microcontroller without an operating system. With the help of this system, the training process is reorganized so that more work is completed before the model is deployed on the edge device during the compilation process.

An effective speedup

Using their optimization, a machine-learning model can be trained on a microcontroller with only 157 kilobytes of memory, while other techniques aimed at lightweight training require between 300 and 600 megabytes.

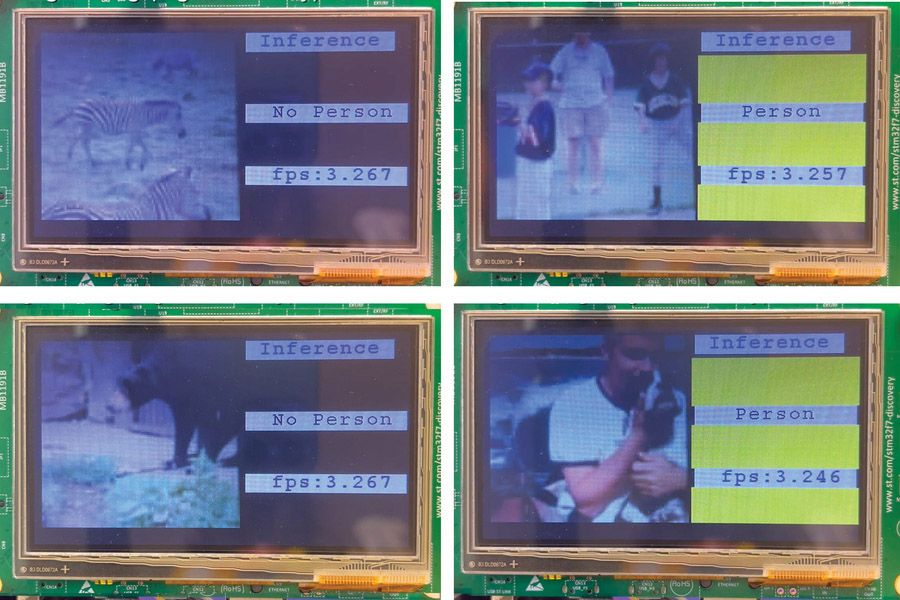

The researchers tested the framework by training a computer vision model to detect people in images. It completed the task successfully after only 10 minutes of training. Compared with other methods, their method could train a model more than 20 times faster.

The researchers intend to apply these techniques to language models and different types of data, including time series data, now that they have demonstrated the effectiveness of these techniques for computer vision models. However, they would like to use what they have learned to reduce the size of larger models without sacrificing accuracy, which would contribute to reducing the carbon footprint associated with training large-scale machine-learning models.

An open challenge is adapting and training AI models for devices, particularly embedded controllers. This research from MIT has successfully demonstrated the capabilities and opened up new possibilities for privacy-preserving device personalization in real-time. This publication presents innovations with a broader application and stimulates new research in systems-algorithm co-design.

Source: MIT News

Comments ()