Understanding The Importance Of Data Annotation

Data annotation is a crucial step in machine learning and artificial intelligence (AI) development. It involves labeling and categorizing data sets to train algorithms to recognize patterns and make predictions. However, the process of data annotation can be tedious and time-consuming, and errors can lead to inaccurate results. That's why it's essential to have an efficient and accurate data annotation process.

In this article, we will explore three ways to increase efficiency in data annotation. We will discuss the importance of quality control measures, the role of human annotation in the process, and the benefits of utilizing AI and machine learning. We will also provide tips on how to streamline the annotation workflow and train and empower annotation teams for success.

By implementing these strategies, you can improve the accuracy and speed of your data annotation process, leading to more reliable results and better AI and machine learning models. Let's dive in and redefine the data annotation process for increased efficiency.

Data annotation is a crucial step in machine learning (ML) projects, as it involves labeling data with specific characteristics that allow algorithms to process and recognize patterns. Poor data quality can significantly impact the accuracy and effectiveness of ML models, which is why data annotation plays a vital role in improving data quality for successful project outcomes.

One effective way to improve data quality through annotation is by ensuring clear communication with annotators regarding the desired characteristics for labeling. By providing training and detailed guidelines, annotators can better understand what criteria to consider when classifying data and ensure accurate labeling. Additionally, breaking down the annotation task into smaller stages with time for reflection and correction allows for errors to be identified and corrected early on in the process.

Another way to increase efficiency in data annotation is by developing simplified frameworks specifically designed for optimizing results. These frameworks provide more enhanced automation capabilities that can help accelerate the overall process while still maintaining label accuracy.

Finally, optimizing efficiency within certain domains requires domain-specific expertise from experienced professionals who are knowledgeable about specific industry requirements. This approach helps tailor efficient solutions specifically designed for unique requirements within different industrial sectors.

In conclusion, high-quality annotated datasets play a critical role in ensuring effective machine learning model development. Implementing effective communication strategies between annotators and project leaders while utilizing optimized frameworks tailored to unique industries ensures an efficient yet accurate data annotation process that alters every aspect of ML projects' lifecycle positively.

Common Challenges In The Data Annotation Process

Data annotation is an essential step in many machine learning projects, but it can be a complex and time-consuming process for small teams. One of the biggest challenges is the cost of data annotation, which can quickly add up if you have a large dataset. To increase efficiency and reduce costs, consider using semi-supervised or active learning methods to minimize the amount of fully annotated data required.

Speed is another important factor in data annotation. The faster you can label your data, the quicker you can start training and testing your models. However, speed should not come at the expense of quality. It's crucial to ensure that labels are accurate and precise to avoid bias or errors in your models.

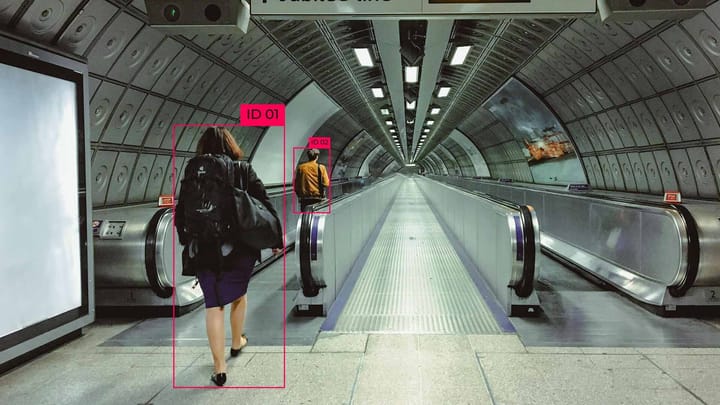

One common challenge when dealing with video data is annotating multiple objects within one frame accurately. This task requires skilled annotators who may require additional training or compensation compared to other types of annotations. A possible solution here could be using video object-tracking algorithms that simplify this task for human annotators.

In conclusion, while data labeling may take up a significant part of your project's time, it's essential for ensuring high-quality results from machine learning models. By leveraging efficient tools like active learning and tracking algorithms, small teams facing budget constraints can optimize their resources without compromising on accuracy and precision while labeling datasets. Regular review cycles with trained individuals are necessary to maintain label consistency over time by detecting patterns when debugging errors found during the annotation process—thereby redefining standardized annotation processes overall for Machine Learning success stories being told every day across every industry with ease—with third-party support services available online specializing in providing effective result-driven solutions guaranteed!

Utilizing AI And Machine Learning For Data Annotation

One of the biggest challenges facing deep learning projects is low accuracy in data annotation. Despite its importance, data annotation can be extremely time-consuming and prone to human error. However, recent advancements in AI and machine learning are revolutionizing the data annotation process and increasing efficiency. Here are three ways AI and machine learning can improve data annotation:

1) Automating Annotation with Machine Learning: With machine learning models, it's possible to automate the process of labeling large datasets without human intervention. This significantly reduces the time required for labeling while also improving accuracy.

2) Using AI-Assisted Annotation: Instead of fully relying on automation, an AI-assisted approach combines manual work with artificial intelligence. In this system, humans teach a model how to label data correctly via examples or scenarios provided by that algorithm.

3) Video Annotation for Object Recognition: Video has become a significant component of modern computing, which makes video annotation a priority in training computer vision models accurately. Supervised image alignment tools can help automatically annotate your videos by using specialized algorithms that target specific objects or features.

Through these applications, we could see advanced methods used to define what information is needed most from an image or object during training processes; greatly assisting developers as they configure programming elements inside deep neural networks/models/scripts associated with detecting patterns & classifying images based upon those very patterns - automatically determining what marks positive or negative labels would be assigned within inputs it receives next time around when fed into such software systems!

Implementing Quality Control Measures For Accurate Annotation

To ensure accurate data annotation, it is important to implement quality control measures. These measures help to identify and correct errors at each stage of the data annotation process, ensuring high-quality results.

One way to implement quality control measures is through inter-annotator agreement (IAA). This involves comparing the annotations of two annotators to measure the level of agreement and identify discrepancies. By doing this, you can identify areas where annotators may require additional training or support.

Another method for controlling data quality is self-agreement. Annotators can assess their own performance by comparing the annotations they produce at different points in time. This allows them to review their work and detect any inconsistencies or errors that may have been missed during initial annotation.

Finally, building efficient quality-control tools is essential to ensuring quick checks and corrections. Data repair involves identifying errors in the dataset and determining the best way to remediate them effectively. Quality controls also ensure accurate input into a table, record or control.

By implementing these three methods for increasing efficiency in annotation processes, we can ensure high-quality results across all stages of a project. The use of gold sets, consensus and auditing are other ways that are helpful when creating high-quality training datasets so as to achieve accuracy and consistency prior to starting a machine learning project.

The Role Of Human Annotation In The Process

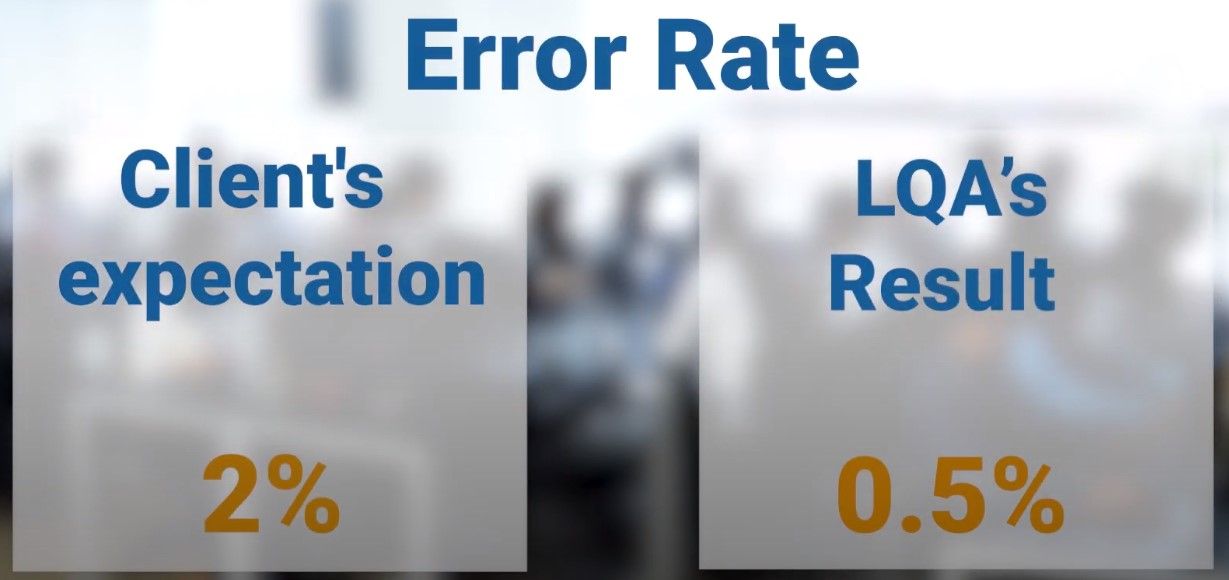

Human annotation plays a critical role in modern machine learning processes. According to Bloomberg, human annotation at scale has been their go-to strategy for years. In a workshop held for experienced annotators, collaborations showed up to 80% savings in annotation costs. This not only highlights the cost-effectiveness of human annotation but also its efficiency and accuracy when compared to automated tools.

High-quality human annotations are necessary for creating effective machine learning-driven stream processing systems. It is essential to define the scope and parameters of an annotation project before undertaking it to achieve specific goals better. Additionally, the iterative deep learning strategy is becoming increasingly popular among data analysts as it saves on human labor and accelerates the training process.

On top of these factors, there are additional capabilities that set humans apart from machines. Humans have unique cognitive abilities such as abstract reasoning, complex problem-solving skills, creativity, and social intelligence that enable them to understand contexts much better than machines can. These factors give humans an upper hand when performing critical tasks like semantic segmentation or natural language processing.

While automated tools may improve efficiency in handling big data sets quickly; they cannot entirely replace humans' cognitive abilities in complex scenarios requiring context understanding or creativity yet. Human annotations deliver reliable results with lesser errors even though it may be more time-consuming; thus making reliable predictions over processed information reasonably achievable—redefining the data-annotation process by embracing parallel cooperation between both forms of analysis could result in higher production rates without compromising reliability over affordability and scalability concerns needed by modern industries today.

Streamlining The Annotation Workflow For Increased Efficiency

One of the best ways to increase efficiency in the data annotation process is by streamlining the workflow. This can be done through a variety of means, such as utilizing cloud collaboration, automating repetitive tasks through data science pipelines, and implementing predictive tooling with sophisticated features.

Customized reporting is also crucial when it comes to streamlining the annotation workflow. By focusing on quality and throughput metrics, businesses can identify areas for improvement and make cyclical changes to continually optimize their processes.

To further optimize your workflow, start by stating your desired outcomes clearly and reconfirming that each step in the process is necessary. Simplify or optimize tasks where possible to reduce completion time and automate repetitive tasks wherever you can.

Analyzing your current workflow is also key to maximizing efficiency. Make use of a business process management (BPM) tool to improve consistency across multiple workflows and business groups.

By streamlining the data annotation process, businesses can remove obstacles and take advantage of profit opportunities more quickly. With greater automation and a focus on efficiency improvements over time, those in charge of data annotation workflows should achieve increasingly accurate results with less manual input over time – all while avoiding costly errors along the way.

Training And Empowering Annotation Teams For Success

Workflow automation plays a vital role in successful data annotation. It enables teams to focus on complex aspects of the process instead of wasting time on repetitive and monotonous tasks. Consistency and accuracy in annotation are crucial for creating high-quality training data for machine learning models, and automation can help achieve these goals.

Keymakr is one such tool that allows for pre-annotation by machine models, streamlining the manual auditing process, saving time and effort. Large teams need to annotate data at unprecedented scales for effective searches and extraction of network data, but siloed activity negatively impacts overall efficiency. Process mapping visually communicates how a process works and helps identify bottlenecks hindering optimization opportunities.

It is essential to train annotation teams to handle these sophisticated tools effectively. Video annotation is an important method used to train computer vision systems that form the backbone of AI applications today. Developing in-house tech solutions may not be a scalable solution due to operational challenges; outsourcing annotation services can prove helpful here.

By leveraging automated workflows, investing in upskilling annotation staff members, adopting video-centric approaches where possible, organizations stand better chances at redefining their data annotations processes towards high-quality outputs faster than competition - driving better business outcomes over the long-term horizon.

Measuring The Success Of Your Annotation Process

When it comes to measuring the success of your data annotation process, there are several factors to consider. The measures for BDA (Big Data Analytics) processes are typically categorized into efficiency, flexibility, effectiveness, and performance. This means you should be looking at how quickly you can annotate the data, how easily changes can be made, how accurate the annotations are in meeting your objectives and how well your system performs overall.

To ensure accuracy and consistency while creating high-quality training data sets for machine learning models, there are standard methods that can be used such as gold sets, consensus techniques or audits. These measures enable you to more effectively gauge the quality of your data annotations.

In addition to these quality measures, workflows also play a key role in facilitating success with data annotations. Workflows guide the journey of raw or unstructured data from collection all the way through to final delivery. By optimizing this journey - ensuring all tasks are interconnected and efficient - it becomes possible to improve annotation efficiency for deep learning algorithms by providing larger amounts of annotated datasets.

Overall, measuring the success of your annotation process requires a robust approach with multiple metrics taken into account. By focusing on both quality control measures and optimizing workflows as needed for achieving cost-effective results while improving performance on increasingly complex tasks that leverage deep learning technology increasingly found across various sectors today which rely heavily on the accuracy of collected datasets over time.

Comments ()